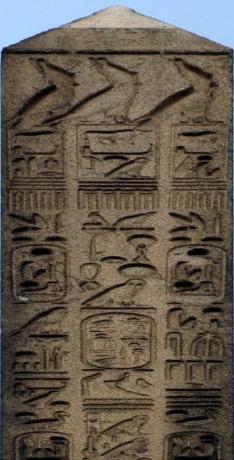

My wife and I were in New York’s Central Park last fall when we saw a nearly 4,000-year-old Egyptian obelisk that has been remarkably well preserved, with hieroglyphs that were clearly legible — to anyone capable of reading them, that is. I’ve included a couple of pictures below to give you a better sense of this ancient artifact — and how it relates to data storage issues.

As we stood wondering at this archaeological marvel, my wife, ever mindful of how I spend the bulk of my time, blurted out, “Rocks do not need backing up!”

Luckily for me, no one backs up data to stone anymore, with the possible exception of the Rosetta project, but my wife raised an important point: electronic data storage and preservation raises a host of technological concerns that the builders of the obelisk never had to consider. Just try reading your backup tape, archived DVD or old Word file after 10 years, much less after thousands of years. Electronic data faces format, migration and data integrity issues that hard copies don’t, although they have their own preservation issues, as archaeologists and document preservation specialists could tell you.

In some ways, the Egyptians with their simpler approach were far better off than we are at recording and saving information. Just look at the well preserved obelisk as you consider all the formats you probably have lying around that can no longer be accessed, from 5.25-inch floppy disks to 8-track tapes and old home movies. What would it take to preserve those for 3,500 years?

After rocks, the human race moved on to writing on animal skins and papyrus, which were faster at recording but didn’t last nearly as long. Paper and printing presses were even faster, but also deteriorated more quickly. Starting to see a pattern? And now we have digital records, which might last a decade before becoming obsolete. Recording and handing down history thus becomes an increasingly daunting task, as each generation of media must be migrated to the next at a faster and faster rate, or we risk losing vital records.

Paper was the medium of choice until about 10 to 15 years ago. Before that, digital storage was far too expensive. Today, we store just about everything digitally, from home pictures, music and movies to feature films, medical records, documents and personal communications like e-mail. But our brave new digital world poses a number of significant problems for future generations, such as formats, frameworks, interfaces and data integrity, that need to be solved through the standards process so that our digital records can be preserved and handed down more easily. Nothing less than the preservation of our history depends on it.

Photos by Lisa Belvito

Metadata Framework

The first thing we need is a standardized framework for file metadata, backup and archival information (see File System Management Is Headed for Trouble).

What we need is a framework that can transfer and maintain metadata between systems. Some home file systems have ways of adding metadata, but they are not transferable between operating systems. All you get is the POSIX-based information when transferring between Apple, Microsoft and Linux. This does not provide much incentive to add the metadata. What if there is a disaster? Does this type of information even get transferred to a backup device? Transport protocols such as ftp, NFS and CIFS do not transfer the metadata between systems except perhaps between like systems. For Microsoft, most secondary devices are formatted at the FAT file system instead of NTFS, and FAT does not support some of the features that NTFS has for metadata. On the enterprise side, vendors either have proprietary frameworks or put everything into a database, which is used to access the file system or manage the storage space. An application is written to display and manipulate the file metadata. This is not very portable and is often expensive to maintain.

Storage Drives and Interfaces

It wasn’t all that long ago that we were using 5.25-inch floppy drives to back up our systems, then came 3.5-inch drives and CD-ROMs and now DVDs, and maybe this year we’ll see Blu-Ray recording drives and then something else a few years from now. Are the drivers available on Windows and Mac systems to support these devices?

On the enterprise side during the same time period we had ER-90s, Redwood, 9940A, 9940B, DLT and lots of other technologies. The only technology that seems to have long-term support for the enterprise is 3480 and 3490 tape drives on mainframes. The same can be said about the channels that interconnect these technologies. Where is SCSI-FW, where is FC-AL (Fibre Channel arbitrated loop, for those you not old enough to remember), and even where is FC-2? All of these communications interfaces are dead (end of life and end of service), and even if they were alive, what operating system today has drivers to support them? What if there was a driver bug that needed to be fixed? IBM does this for mainframes, but not for the general purpose, open system enterprise environment; it is too difficult and far too expensive.

Clearly, as technology changes, you must migrate your old data, whether it is your home machine or the systems that you use at work. This was not required for rocks, of course. All that was needed was to understand the language the rocks were written in, and we have been able to do that for just about all forms of written communications.

Data Integrity

Just like with a poor language translation, the integrity of modern data is not guaranteed except at high cost. File systems and storage management frameworks such as ZFS and Hadoop might verify a checksum, but such solutions are beyond the average home user. Low-power options such as flash do not solve the problem either, as they have other issues. The hard error rate of disk drives has not changed very much over the last 15 years even as the density has increased significantly. This hard error rate means that disk drives, whether they are enterprise or consumer, are going to fail, and when they do the result is data loss and lots of time spent rebuilding the environment. You can add hardware and reduce the likelihood that this will happen, but that does not eliminate the problem either at home or at work. You can throw lots of money at the problem and build a very high reliability archive, but that isn’t something that even many enterprises can afford.

Clearly, rocks have some advantage here as along as they remain intact. With electronic records, if there is a device failure, reading the data requires specialized expertise, and even with that, much of the data will likely be lost.

Data Format

Has anyone tried opening an MS Word document circa 1990 with Word 2007? We all know that all data formats have a limited lifespan. That format might be longer with something like PDF or shorter with other applications, but nothing is guaranteed for very long, and formats can change very quickly. We have no real framework to change and transcribe formats. With Windows, you know the file type by the extension, and that can be misleading. With Mac OS, there is extra metadata for each file that does not translate to Windows, and in the enterprise on UNIX systems there is nothing to help you. Rocks, on the other hand, have only the same language translation issues that we face today.

My wife is not in the data storage business, but she clearly understands that digital data management is far more complex than information management used to be. Digital data management concepts, technologies and standards just do not exist today. I don’t know of anyone or anything that addresses all of these problems, and if it is not being done by a standards body, it will not help us manage the data in the long run. It is only a matter of time until a lot of data starts getting lost. A few thousand years from now, what will people know about our lives today? If we are to leave obelisks for future generations, we’d better get started now.

Henry Newman, a regular Enterprise Storage Forum contributor, is an industry consultant with 28 years experience in high-performance computing and storage.

See more articles by Henry Newman.