Here is my working hypothesis: POSIX (Portable Operating System Interface) can’t scale to meet the demand of clouds and big data, but REST (Representational State Transfer) can’t manage and tier data the way POSIX can, but will likely get those features in the next few years and then take off as the new data interface standard of the cloud era.

There is a lot going in the archive world as archives are becoming far more important, given that companies and researchers are looking back at data to gain a better understanding of our world and help predict the future.

Let’s first address the difference between an archive and (HSM) hierarchical storage management. My view is that the definition of an archive is about storing data that will be needed in the future, while the definition of HSM is managing the archive with hierarchies of storage. There is a lot of money in predicting the future, whether it be commodities traders, the healthcare industry agricultural planning or some other industry. Some use tiers of disk, but the ones that I am taking use tape as one of the tiers, given the high reliability and lower cost.

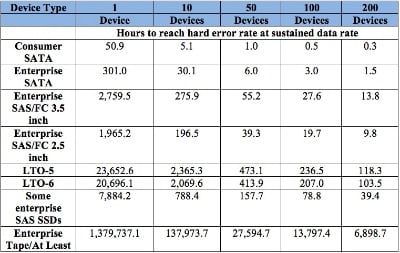

This a chart that illustrates these concepts:

But this is an article on archive interfaces, not the underlying archive. Some industries, such as the geosciences companies that collect seismic information from around the world, have known about the importance of archives for decades. All of the vendors in this market I am aware of use archives with POSIX interfaces.

The archive for a geoscience company contains their intellectual property and must be saved and usable for the future as new algorithms have allowed these companies to both find oil using old survey data and find better ways to extract oil and gas. Every industy I have worked with that has an HPC compute environment has an archive, some of which have decades of data.

Other examples include weather forecasting, simulation of aircraft and simulation in the auto industry, climate modeling to whatever the simulation environment or type of industry it is – all these environments that I am aware of are today are accessing the data via a POSIX file system interface.

This interface is either running on the system directly or accessed via NFS or CIFS or some archive specific API and/or ftp. Today the majority, if not all HSM software, uses this POSIX interface. The companies that make the software have been working in industries that need tiered storage and high reliability.

Now we know there is a company that shall remain nameless, that has said for over two decades that tape is dead, but tape is still not dead yet and I believe it is actually making a comeback for POSIX archives, given the cost differences. Now some will say that tape is dead based on the Santa Clara Group’s reporting of the LTO market, which has seen some significant sales reductions. But sadly the Santa Clara Group does not and cannot report on enterprise tape because the enterprise tape vendors do not report sales.

Enterprise tape has greater reliability, high performance, and more density than LTO tape and in some cases cost about the same as LTO tape, given that you need fewer cartridge slots in the libraries given the increased density, and fewer tape drives given the performance improvement.

The point I will try and make is that the interface to the archive is going to change dramatically over the next 5 years, from a POSIX interface to REST. And the people that are developing REST interfaces are going to have to tier storage, given the costs and density improvements. Here are some of the reasons:

1. Clouds environment such as Dropbox, Skybox, Google and AWS

2. POSIX is not changing and for long term archives needs to change

3. REST and other similar interfaces have more development happening

Let’s review each of these.

Clouds

Whether it be a private or public cloud, the drop and drag ability to save information that you could not afford to save on your local storage is allowing a great deal more data saved.

So now you have a situation where you can save a lot more stuff and you do not really see the charges and/or costs. So the people who run these types of sites are going to have to tier storage to reduce costs.

Clouds allow easy consolidation of the data from all departments and groups, and people will have more stuff than they do now as space is unlimited. Right? Just look at how AWS, Dropbox, Google drive or SkyDrive are being used and the cost is continually dropping. Dropping faster than the rate of density growth and/or disk price drops, which have not been much lately.

People often dump lots of data that they never use. Here is a good example. I had lots of videos of my grandmother and when she died a few years ago I reviewed them and put together a few for a family get-together. I added up the space I had in pictures and video of my grandmother and it came to about 5 GiB.

Now that’s not a lot but maybe I had the data in the cloud and found that much of it was not used for 5 or 10 years. And it would have been automatically moved to devices that don’t use much power and have a lower cost point such as tape does today.

If I went to get these files and got a message on the screen saying that the download would begin in about 3 minutes (more than enough time if the tape drives were busy and to pick and load a tape and get my files) it would not bug me at all – especially if I were paying less for storage or using a free service.

Now if this was important for my business, even for that type of data, most people that had not accessed the data for 3 to 5 years could wait 3 to 5 minutes to get it back. In my opinion given the network latencies and bandwidth available today for even big files, you are going to in fact wait to put the data in the cloud or get it out of the cloud.

So if the data is not local the fact is that, in the future, you’re going to wait for your data for at least a few minutes before it arrives. Let’s look at what I am talking about. Take my 5 GiB of data to download. It would start in 3 minutes or so. With my home Internet of 35 Mbit/sec that gives me, say, 4 MB/sec of download or 1280 seconds (a bit over 21 minutes) to get all my data back. In my opinion a small price to pay if my data is already in the cloud and I am paying less on secondary storage.

The bottom line here is that most if not all of the cloud implementations use a REST/SOAP interface. What we see in our directories is based on using those interfaces and not POSIX. What is missing is a rich set of interfaces that could automatically give hints for tiering storage, that provide data integrity and that could show an approximate amount of time it will take to get my data back.

POSIX Development

The file system development has not changed in decades, while at the same time there have been lots of technology changes. The vendors that control the standard for the most part do not want to change anything. This is because change costs significant money in terms of potentially developing the features, testing and developing the tests for the new functionality, running the tests, and the hardware costs. And who is going to pay for these changes?

This was and is myopic thinking on the part of the vendors controlling POSIX. Good short term strategy but not good for the long haul. Today, we have no large SAN or local file systems that scale, and the Red Hat supported limit for XFS (the largest file system supported) is still only 100 TB, which is tiny today given the size of file systems people really want.

Today we have a few parallel file systems that scale to 10s of PB in a single namespace and these are mostly done with parallel file system appliances, for the most part that is it. Interfaces to tiered storage with policies that are not standardized and there are no plans as far as I can tell. I actually made a proposal to some groups to try and add this, but got nowhere.

At this point, scaling to 10s billions of files in a single namespace is difficult and often expensive. Recovery time for POSIX file systems is a long process, given the required consistency and the fact that the file system by default has to control access to two threads trying to write to the same file.

The real issue is that there is no standard way for the file system to put and get things out of secondary storage. 20 years ago there were many 10s of POSIX HSM vendors and systems. Today there are less than 10 from what I can determine, and most of the 10 are not growing anywhere near as rapidly as storage in the cloud market.

REST Development

If you are under 30 and doing cloud or other development you are mostly likely developing to a REST or REST-like interface and not using a POSIX file system for your cloud. This allows you far more flexibility than does POSIX. Because instead of the backend having to be a file system that has to deal with the VFS layer and inodes, from what I can tell most REST interfaces put the data in a database to manage all of the files.

Ask yourself, when was the last time the industry had 10 announcements about file system in a quarter? My bet, it was the late 1990s and today we get 10 announcements about cloud interfaces and alike sometimes in a week. REST and REST-like interfaces are gaining massive momentum, but from the vendors I have looked at they are almost without question all missing one thing, and that is tiered storage, where an archival policy can be set.

Of course there is Glacier. But when my next relative dies and I want all the data back I do not want to have to pay a fortune or figure out what I can bring back what day as compared to what can be done with POSIX HSM today.

Last Thoughts

From the 1980s to about the 1990s, POSIX file system world lived without HSMs and users had to figure out how to archive their data. Mostly during this time period mainframes operating systems, which had HSMs, managed those tasks and it was difficult moving things back and forth.

When file systems vendors added HSMs to their POSIX file systems the world changed. Users wanted to integrate into the large file systems, and wanted to move off the mainframes to have computation and data closer to their applications. The trend in the 1990s archive neared the computation, but this changed again as disk drive capacities increased significantly and many did not need archives.

This trend changed again in the late 2000s, when the disk drive capacity trends started to drop and tape, at least enterprise tape capacities, increased tremendously compared to disk. HSM and archives (aka tiered storage) now made significant financially sense to all.

About this time is when REST interfaces became very popular, but the developers took the replication and spinning disk approach, given disk drive density. It took a good 10 years after the POSIX file system under UNIX before someone developed an HSM to tier data using a POSIX file system. My bet is that history will repeat itself for REST and clouds but a bit quicker this time, and that we will see automatic tiered storage.

My hope this time is that standard policies and interfaces will be developed, which was missing from the POSIX interface and prevented, in my opinion, broad adoption.