Virtualization for servers, storage and networks is not new, with years, if not decades, of propriety implementations. It can be used to emulate, abstract or aggregate physical resources like servers, storage and networks. What is new — and growing in popularity — are open systems-based technologies to address the sprawl of open servers, storage and networks to contain cost, address power or cooling limitations and boost resource utilization, along with improving infrastructure resource management.

With the growing awareness of server virtualization (VMware, Xen, Virtual Iron, Microsoft), not to mention traditional server platform vendor hypervisors and partition managers and storage virtualization, the terms virtual I/O (VIO) and I/O virtualization (IOV) are coming into vogue as a way to reduce I/O bottlenecks created by all that virtualization. Are IOV and VOI a server topic, network topic or a storage topic? The answer is that like server virtualization, IOV involves servers, storage, networks, operating system and other infrastructure resource management technology domain areas and disciplines.

You Say VIO, I Say IOV

Not surprisingly, given how terms like grid and cluster are interchanged, mixed and tuned to meet different needs and product requirements, IOV and VIO have also been used to mean various things. They’re being used to describe functions ranging from reducing I/O latency and boosting performance to virtualizing server and storage I/O connectivity.

Virtual I/O acceleration can boost performance, improve response time and latency and essentially make an I/O operation appear to the user or application as though it were virtualized. Examples of I/O acceleration techniques, in addition to Intel processor-based technologies, include memory or server-based RAM disks and PCIe card-based FLASH/NAND memory solid state disk (SSD) devices like those from FusionIO, which are accessible only to the local server unless exported via NFS or on a Microsoft Windows Storage Server-based iSCSI target or NAS device. Other examples include shared external FLASH or DDR/RAM-based SSD like those from Texas Memory (TMS), SolidData or Curtis, along with caching appliances for block- or file-based data from Gear6 that accelerates NFS-based storage systems from EMC, Network Appliance and others.

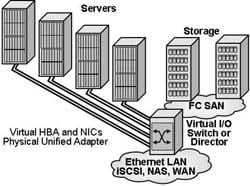

Another form of I/O virtualization (IOV) is that of virtualizing server-to-server and server-to-storage I/O connectivity. Components for implementing IOV to address server and storage I/O connectivity include virtual adapters, switches, bridges or routers, also known as I/O directors, along with physical networking transports, interfaces and cabling.

|

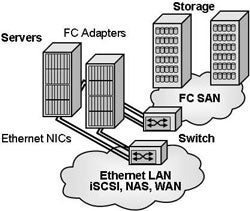

| Figure-1: Traditional separate interconnects for LANs and SANs |

Virtual N_Port and Virtual HBAs

Virtual host bus adapters (HBAs) or virtual network interface cards (NIC), as their names imply, are virtual representations (Figure 2 below) of a physical HBA (Figure 1 above) or NIC similar to how a virtual machine emulates or represents a physical machine with a virtual server. With a virtual HBA or NIC, real or physical NIC resources are carved up and allocated as virtual machines, but instead of hosting a guest operating system like Windows, UNIX or Linux, a Fibre Channel HBA or Ethernet NIC is presented.

On a traditional physical server, the operating system would see one or more instances of Fibre Channel and Ethernet adapters, even if only a single physical adapter such as an InfiniBand-based HCA were installed in a PCI or PCIe slot. In the case of a virtualized server such as VMware ESX, the hypervisor would be able to see and share a single physical adapter, or multiple for redundancy and performance, to guest operating systems that would see what appears to be a standard Fibre Channel and Ethernet adapter or NIC using standard plug and play drivers.

Not to be confused with a virtual HBA, N_Port ID Virtualization (NPIV) is essentially a fan-out (or fan-in) mechanism to enable shared access of an adapter bandwidth. NPIV is supported by Brocade, Cisco, Emulex and QLogic adapters and switches to enable LUN and volume masking or mapping to a unique virtual server or VM initiator when using a shared physical adapter (N_Port). NPIV works by presenting multiple virtual N_Ports and unique IDs so that different virtual machines (initiator) can have access and path control to a storage target when sharing a common physical N_Port on a Fibre Channel adapter.

The business and technology value proposition or benefits of converged I/O networks and virtual I/O are similar to those for server and storage virtualization. Benefits and value proposition for IOV include:

- Doing more with what resources (people and technology) you have or reducing costs

- A single (or pair for high availability) interconnect for networking and storage I/O

- Reduction of power, cooling, floor space and other green friendly benefits

- Simplified cabling and reduced complexity of server to network and storage interconnects

- Boosting clustered and virtualized server performance, maximizing PCI or mezzanine I/O slots

- Rapid re-deployment to meet changing workload and I/O profiles of virtual servers

- Scaling I/O capacity to meet high-performance and clustered server or storage applications

- Leveraging common cabling infrastructure and physical networking facilities

|

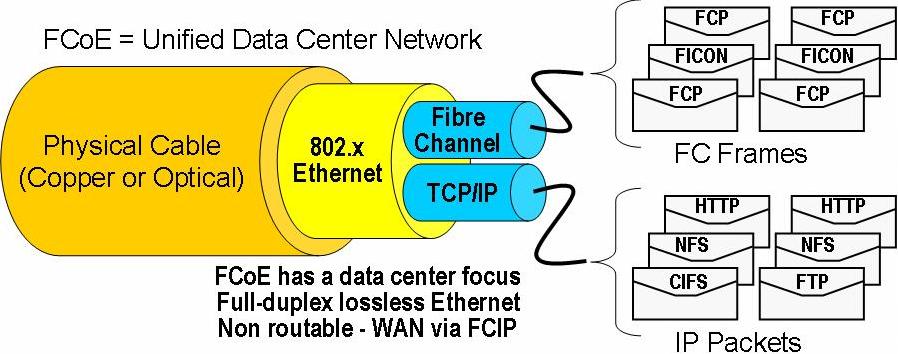

| Figure 2: Example of a unified or converged data center fabric or network |

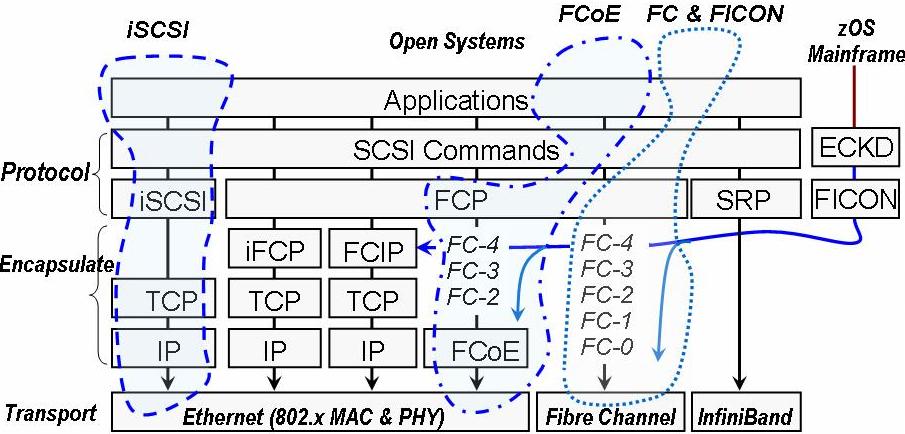

In Figure 2, you see an example of virtual HBAs and NICs attached to a switch or I/O director that in turn connects to Ethernet-based LANs and Fibre Channel SANs for network and storage access. Figure 3 shows a comparison of various I/O interconnects, transports and protocols to help put into perspective where various technologies fit. You can learn more about storage networks, interfaces and protocols in chapters 4 (Storage and I/O Networks), 5 (Fiber Optic Essentials) and 6 (Metropolitan and Wide Area Networks) in my book, “Resilient Storage Networks” (Elsevier).

|

| Figure 3: Positioning of data center I/O protocols, interfaces and transports |

(Continued on Page 2: Data Center Ethernet and FCoE )

Data Center Ethernet and FCoE

Data center Ethernet (DCE) is a new evolution and extension of existing Ethernet to address higher performance as well as lower latency I/O demands for data centers as a unified interconnect for both network and storage traffic. An example of DCE implementation is Fibre Channel over Ethernet (FCoE), which leverages lower latency, quality of service (QoS), priority groups and other enhancements over traditional Ethernet to be used as a robust storage interconnect.

Figure 4 shows how traditionally separate fiber optic cables are dedicated (in the absence of wave division multiplexing, or WDM) to Fibre Channel SAN and Ethernet or IP-based networks. With FCoE (Figure 5), Fibre Channel absent of the lowest physical layers is mapped onto Ethernet to co-exist with other traffic and protocols, including TCP/IP. Note that FCoE is targeted for the data center as opposed to long distance, which would continue to rely on FCIP (Fibre Channel mapped to IP) or WDM-based MAN for shorter distances.

|

| Figure 4: Separate physical data center I/O networks (interfaces and protocols) today |

|

| Figure 5: Ethernet-based unified, converged data center fabric or I/O network |

Another component in the taxonomy of server, storage and networking I/O virtualization is the virtual patch panel that masks the complexity of adds, drop, move and changes associated with traditional physical patch panels. For example, a new company leveraging a large installed base and taking mature technology into the future is OptiPath, originally launched as Intellipath. For large and dynamic environments with complex cabling requirements and the need to secure physical access to cabling interconnects, virtual patch panels are a great complement to IOV switching and virtual adapter technologies.

DCE versus IB: And The Winner is?

I do not see InfiniBand disappearing anytime soon, but all of the technology features and capabilities of IB will be at a disadvantage moving forward, given the mass market economies of scale for Ethernet, even with a higher-priced version of Ethernet. For those looking to deploy a unified or converged fabric today, InfiniBand-based solutions are an option, with the ability to bridge to existing Ethernet LANs or WANs along with Fibre Channel-based SANs. Rest assured, for those deploying InfiniBand-based unified, converged or data center fabrics today while waiting for data center Ethernet and its associated ecosystem (adapters, drivers, switches, storage systems) to evolve, your investment should be protected.

Ethernet is a popular option for general purpose networking and is moving forward with extensions to support FCoE and enhanced low-latency data center Ethernet, eliminating the need to stack storage I/O activity onto IP and leaving IP as a good solution for spanning distance or use for NAS or for low-cost iSCSI block-based access co-existing on the same Ethernet. Like it or not, getting Fibre Channel mapped onto a common Ethernet-based converged or unified network is a stepping stone, if not a compromise, between different storage and networking interfaces, commodity networks, experience and skill sets along with performance or deterministic behavior. If nothing else, a converged Ethernet makes for a more comfortable migration from various comfort zones and path of least resistance to a network that IP has been built on.

Near term, putting the pros and caveats aside from traditional storage professionals who have concerns about IP or networking and converted storage professionals who favor IP, FCoE is a step forward. Marketing and fanfare aside, InfiniBand has some legs to stand on for now, but when factoring in business, economic, broad existing adoption and other facts, converged data center class Ethernet becomes a winner. IP is a contender on a longer-term basis beyond its current role of supporting iSCSI, NAS and Fibre Channel over distance (FCIP).

Vendors to Watch

Several vendors have announced initiatives, shown technology proof of concept (technology demonstrations) or actually begun shipping IOV enabling technology. For example Brocade has announced its Data Center Fabric (DCF) initiative. Meanwhile QLogic, NetApp and startup Nuova demonstrated converged network architecture based on FCoE at the fall 2007 SNW in Dallas. Not to be outdone, Cisco has enhanced its InfiniBand line of switches and routers based on the technology acquired from Topspin, and QLogic has updated its Silverstorm-acquired InfiniBand lineup with announcements during the recent Supercomputing 2007 event.

Startup Woven has released a core edge low-latency, high-performance Ethernet switch to support data center class Ethernet deployments. Another startup, Xsigo, continues to gain momentum by deploying IOV solutions enabling virtual HBAs and virtual NICs for any-to-any access of Ethernet networks including IP-based storage as well as Fibre Channel-based SANs. Additional marketing names you can expect to hear more about include converged network adapter (CNA), converged network interface (CNI), service-oriented network architecture (SONA) and unified fabrics, among others.

Storage and I/O adapter, NIC, switch and network chip vendors to keep an eye on include, among others, Brocade, Chelsio, Cisco, Emulex, Intel, Mellanox, Neterion, NetXen, Nuova, OptiPath, QLogic, Voltaire, Woven and Xsigo, along with operating systems, server and storage systems vendors. Also keep an eye on industry trade groups and standards organizations, including ANSI T11, FCIA, FCoE, the InfiniBand trade association (IBTA) and PCIsig.

Wrapping Up For Now

To wrap up, virtual environments still rely on physical resources and infrastructure resource management to exist. Learn to identify the differences between the various approaches of virtual I/O operations and virtual I/O connectivity, along with their applicable benefit to your organization. As with other virtualization techniques and technologies, align the applicable solution to meet your particular needs and address specific pain points while being careful not to introduce additional complexity.

Greg Schulz is founder and senior analyst of the StorageIO group and author of “Resilient Storage Networks” (Elsevier).