The concept of parity-based RAID (levels 3, 5 and 6) is now pretty old in technological terms, and the technology’s limitations will become pretty clear in the not-too-distant future — and are probably obvious to some users already. In my opinion, RAID-6 is a reliability Band Aid for RAID-5, and going from one parity drive to two is simply delaying the inevitable.

The bottom line is this: Disk density has increased far more than performance and hard error rates haven’t changed much, creating much greater RAID rebuild times and a much higher risk of data loss. In short, it’s a scenario that will eventually require a solution, if not a whole new way of storing and protecting data.

We’ll start with a short history of RAID, or at least the last 15 years of it, and then discuss the problems in greater detail and offer some possible solutions.

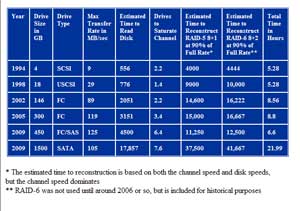

Some of the first RAID systems I worked on used RAID-5 and 4GB drives. These drives ran at a peak of 9 MB/sec. This, of course, was not the first RAID system, but 1994 is a good baseline year. You’ll need to click on the image below for how the RAID rebuild picture has changed in the last 15 years.

|

| RAID reconstruction time, 1994-2009 |

A few caveats and notes on the data:

- Except for 1998, the number of drives that were needed to saturate a channel has increased. Of course some vendors had 1Gb Fibre Channel in 1998, but most did not.

- 90 percent of full rate is in many cases a best-case number, and in many cases, full rebuild requires the whole drive.

- The bandwidth assumes that two channels of the fastest type are available to be used for the 9 or 10 (RAID-5 or RAID-6) drives. So for 2009, I am assuming two 800 MB/sec channels are available for rebuild for the 9 or 10 drives.

- The time to read a drive is increasing, as the density increases exceed performance increases.

- Changes in the number of drives to saturate a channel — along with new technologies such as SSDs and pNFS — are going to affect channel performance and cause RAID vendors to rethink the back-end design of their controller.

We all know that density is growing faster than bandwidth. A good rule of thumb is that each generation of drives will improve bandwidth by 20 percent. The problem is that density is growing far faster and has been for years. While density percentages might be slowing now from 100 percent to 50 percent or less, drive performance is pretty fixed at about 20 percent improvement per generation.

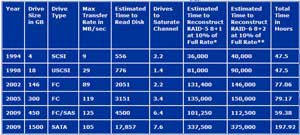

Using the sample data in the table above, RAID rebuilds have gone up more than 300 percent over the last 15 years. If we change the formula from 90 percent of bandwidth to 10 percent of disk bandwidth — which might be the case if the device is heavily used with application I/O, thanks in part to the growing use of server virtualization — then rebuild gets ugly pretty, as in the RAID rebuild table below.

|

| RAID Rebuild for Application I/O |

Hard Errors Grow with Density

The hard error rate for disk drives has not, for the most part, improved with the density. For example, the hard error rate for 9GB drives was 1 in 10E14 bits, and that error rate has improved by an order of magnitude to 1 in 10E15 for the current generation of enterprise SATAand 1 in 10E16 for the current generation of FC/SASdrives. The problem is that the drive densities have increased at a faster rate.

|

What this means for you is that even for enterprise FC/SAS drives, the density is increasing faster than the hard error rate. This is especially true for enterprise SATA, where the density increased by a factor of about 375 over the last 15 years while the hard error rate improved only 10 times. This affects the RAID group, making it less reliable given the higher probability of hitting the hard error rate during a rebuild.

|

Think about it: Only 8.88 PB of data has to move before you hit the hard error rate, and remember that these types of values are numbers that vendors say you cannot likely exceed. Consumer-grade SATA drives are pretty scary and must be a consideration when talking to low-end RAID vendors who might use these drives. A single 8+2 rebuild with 1.5 TB drives reads and writes about 28.5 TB (read 1.5 TB*9 drives, write 1.5 TB*10 drives). That means that in a perfect world using vendor specifications, we can expect one out of every 312 rebuilds to fail today with data loss. Moving to the next-generation 2 TB drives, this value drops to every 234 rebuilds, and with 4 TB drives it drops to every 117 rebuilds.

Some considerations:

- Hard error rates are not likely to increase given the cost to make the drives more reliable

- Given that what RAID vendors see for MTBF is often half of what drive vendors see, hard error rates are lower than what drive vendors report when the drives are put into RAID disk trays, with the need for both high performance and dense packaging increasing vibration and heat.

All of this means that the hard error rate is beginning to become a big factor in MTDL (Mean Time before Data Loss) as a part of rebuild, given the amount of data moved and the density of the drives.

OSD, Declustered RAID Might Help

Some vendors claim that file systems can address the storage reliability problem, but I don’t buy that argument, given the latency inherent in large operating systems and file systems, and the need for high performance (see File System Management Is Headed for Trouble and SSDs, pNFS Will Test RAID Controller Design). And running software RAID-5 or RAID-6 equivalent does not address the underlying issues with the drive. Yes, you could mirror to get out of the disk reliability penalty box, but that does not address the cost issue.

The disk reliability density problem is getting worse, and fast. Some vendors are using techniques such as write logging — keeping track of write on another disk during rebuild to allow the rebuild to occur faster — to get around the growing problem. Will this solve the problem for the long term, or is this the equivalent of the RAID-5 to RAID-6 fix that just delayed the inevitable problem? Personally, I think it will turn out to be just another short-term fix. The real fix must be based on new technology such as OSD, where the disk knows what is stored on it and only has to read and write the objects being managed, not the whole device, or something like declustered RAID. In essence, the disk drive layer needs to have more knowledge of what is storage, or fixed RAID devices must be rethought. Or both.

There are some technologies that are available today and on the way that could help alleviate the problem. The sooner they arrive, the better chance we have of avoiding MTDL.

Henry Newman, CTO of Instrumental Inc. and a regular Enterprise Storage Forum contributor, is an industry consultant with 28 years experience in high-performance computing and storage.

See more articles by Henry Newman.

For more on the future of RAID, see The Future of RAID Storage Technology

Follow Enterprise Storage Forum on Twitter