Just The Facts, Ma’am

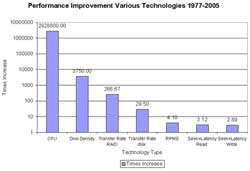

Let’s begin with a chart comparing a few areas of technology improvement over the last 30 years, ranging from CPU and disk density to transfer rates and latency:

|

| Figure 1: Performance Improvement Various Technologies 1977 – 2005 |

Clearly, storage technology and performance have not kept pace with CPU performance, but storage is about more than just performance — latency — the time for an action or request to complete, such as an I/O or memory request — is also a big issue. John Mashey once said, “Money can buy you bandwidth, but latency is forever.”

The bottom line is that you can store more and process faster, but the time it takes to access the data has stayed about the same. That means it takes more and more hardware to keep up with the increase in CPU performance.

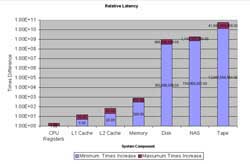

System performance is all about reducing latency. That is why over the last 35 years, processors have developed many new innovations to deal with latency throughout the system. In the 1970s, vectors for high performance computers were developed to deal with the fact that CPUs were getting faster and memory performance was lagging. In the 1980s and 1990s, the concept of using caches to hide latency became commonplace. These caches (L1, L2 and L3) were used to pre-fetch data to be used by the CPU.

Today, we’re seeing techniques such as speculative pre-fetch and multi-threading in the processors to deal with the fact that memory performance has improved far less than CPU performance and made relative latency even higher. The key to having good CPU performance is to keep the memory pipeline full, but full of data that you need to process, not full of data that you don’t need, which is why speculative execution can sometimes be a problem.

Next we’ll look at a chart of the relative latency of various technologies:

|

| Figure 2: Relative Latency |

I could be off on one or more of these technologies for a product or two, but the point is that latency between CPU registers and disk are nine orders of magnitude. Now let’s look at the relative bandwidth differences for these same technologies:

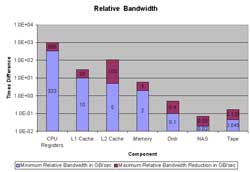

|

| Figure 3: Relative Bandwidth |

Here we’re talking only four orders of magnitude difference in bandwidth between CPU registers and disk performance, and less than two between disk and memory performance. So the way I see it, storage performance is all about latency and the massive increases in that latency over the last 30 years. To prove my point, let’s look at disk drives from 1976 and today and how many 4KB random I/O each can do. The 4KB IOs are issues with latency.

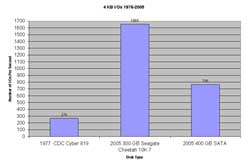

|

| Figure 4: 4KB I/O from 1977 to 2005 |

You can see that the Cyber 819 — the fastest drive in 1976 — is about one-sixth the speed of the fastest drive today and about one-third that of SATAdrives. Compare that to increases in CPU and even memory performance and you can see that we have a serious problem with technology mismatch. Even if the I/O sizes increase by a factor of four, we are still bound by the lack of improvement in the latency for storage technology.

Now let’s look at the relative bandwidth per GB of storage for the same technologies. I recently was chastised by a number of people in the disk drive community for putting up a chart with this information. They made the point that storage densities have increased. This is all true. Arial density has made a dramatic improvement over the last 30 years, but in my opinion this is not the issue. The issue is not arial density, but what the user sees in terms of a disk drive and a channel. No matter how dense the drives are, it is how fast you can access the data.

|

| Figure 5: Relative Bandwidth per GB |

So we are seeing that bandwidth per GB of storage is less than 1 percent of what it was 30 years ago. Combined with the latency problem, it is clear to me that we have some big storage technology issues to deal with, and at least in the short term, this is going to require some major re-thinking of storage and applications.

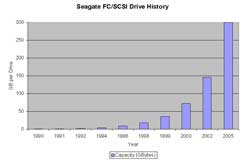

One of the base technologies that we all deal with is Fibre Channel drives. The following chart is the history of Seagate SCSI and Fibre channel drives:

|

| Figure 6: Seagate Fibre Channel and SCSI Drive History |

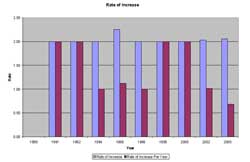

Looking purely at history, the rate of increase in storage density appears sound, but let’s look at the rate of density increase by year:

|

| Figure 7: Rate of yearly increase in storage density |

As you can see, the rate of increase per year has slowed dramatically since the early 1990s.

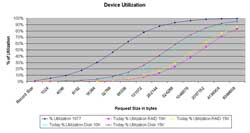

Applications are going need to change to make up for some of these storage performance issues. In this chart, we look at the utilization of a single disk drive in 1976 and a 400 MB/sec RAID and single disk drive today, assuming that each request is followed by an average seek plus average latency and this is for each I/O request. I charted this against various request sizes and am showing the percentage utilization of the device.

|

| Figure 8: Device utilization |

Clearly, you need to make large requests to get good utilization, and the alternative to these large requests is having more disk drives. The argument will be made by some RAID vendors that cache eliminates much of this problem but I do not agree with this conjecture (please see Let’s Bid Adieu to Block Devices and SCSI ).

Trouble Ahead

I think storage is headed for trouble for a number of reasons why. Consider the following:

- Storage technology latency has not kept pace with CPU or memory performance.

- Storage technology bandwidth has not kept pace with memory bandwidth.

- Storage bandwidth improvements are better than latency, but still not very good.

- Storage density is the closest to matching CPU performance increases, but is still orders of magnitude behind and still lacks latency and bandwidth improvements.

None of these storage issues is going to change anytime soon. So this leads me to the conclusion that our jobs as storage professionals are going to get much harder. Without change to the underlying technology, storage budgets are going to have to increase to keep pace with the demand for I/O. At least that’s the way I see it.

Henry Newman, a regular Enterprise Storage Forum contributor, is an industry consultant with 25 years experience in high-performance computing and storage.

See more articles by Henry Newman.