What’s an IO worth to you? Is it worth more than a gigabyte? Less? That’s a hard question for many IT and business professionals to begin to consider; yet we often see it bandied about. It certainly has merit, it just isn’t easily understood.

The fact is there are few among IT professionals who realize how often IOs play into application performance, nor what they really need in terms of IO behind every application. Such quests are typically left to storage experts, and when it comes to performance, the industry has seldom equipped the storage expert with much better than rules of thumb, current performance figures from systems that may be riddled with bottlenecks, and magical guesstimates.

Yet the relationship between applications and storage performance is the critical foundation underlying the usefulness of applications ranging from in-the-microsecond decision support systems to time-consuming computational design systems. Behind such applications, minor IO latency or throughput differences can turn cascading reactions into wasted time day-in and day-out, missed revenue, missed goals, blown schedules, competitive inadequacy, lost customers or any other number of seriously bad possibilities.

For the first time in decades, we’re seeing good hope for better storage solutions, and the impact on businesses stands to be enormous. That’s because there is a huge cost hidden in how today’s storage technologies are used to deliver performance, and better technologies will dramatically alter the costs of many parts of the storage infrastructure. That makes understanding these costs, and how they might be altered, more important than ever. We’ll take a look at how big the cost of performance is, and with that understanding in mind, we’ll look at two examples of new solutions. What they suggest is a new way to get cost-effective performance inside the data center walls.

Jump to:

The storage practitioner lives and breathes in a storage industry where the storage of data is at odds with the use of data, and it comes at a serious cost. The storage industry has long suffered from a legacy of mismatched data delivery capabilities. Applications depend upon rotational disk storage, and while it has driven the cost of capacity to phenomenal low dollar points, it comes at a tremendous price: the enormous total cost of performance. The clearest picture is found in how the cost of both capacity and performance has changed over time.

|

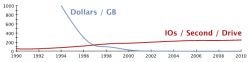

| Figure 1 Improvements to Capacity and IO Performance Since 1990 |

The cost of capacity has improved as rotational drive media has taken exponential leaps forward in areal density – the amount of data stored per square inch. But the performance of individual drives has barely improved. For disk media, this is little wonder. Faced with explosively growing data, rotational disk capacity improvement has been relatively easy, while driving more performance into a rotational media is bound by the practical limits of physics; things can only turn and twitch so fast. But this trend has occurred on a far greater playing field than the disk spindle. Multi-million or billion dollar industries have sprouted up from capacity-oriented deduplication, compression, HSM, and more. The capabilities of today’s media are aligned with capacity, and at odds with what they cost for performance. Table 1 illustrates this point

Table 1: Per IO Cost of Media

The IO cost of different media. Taneja Group estimates for the typical

costs of IO for different media types given their list prices in common

enterprise systems today

| Rotational Disk | $3 / IO |

| Flash | $.35 / IO |

| DRAM | Less than $.01 / IO |

While storage arrays have seemed to march ahead in performance capabilities, advancements have been incremental compared to capacity improvements. The problem is that every performance advancement in this $12B industry must at the end of the day come down to working with IO from rotational disk, even when faster media may sometimes be incorporated. Moreover, caching controller architectures have too often put the storage engineer in the position of designing performance two or three times, with a fixation on the constraints and costs of both the storage controller and the slowest media tier in focus. Caching is good only as long as a working data set fits within cache. Today’s data sets are outgrowing caches on a regular basis, and this means the performance engineer may well need a plan for delivering IO from disk, when the capabilities of cache are inevitably exhausted. A storage system built to handle 50,000 IOPs beneath a demanding set of applications is often designed for both storage controller and disk capabilities, and results in out of control costs such as those in Table 2.

Table 2: Estimated Costs of Designing for 50,000 IO/s

Gross estimates of $/IOP in a performance configured midrange array.

Using rules of thumb we’ve grossly estimated what the typical

components of a midrange array cost when configured to deliver maximum performance

from each media, which is often the practice today.

| Item | IO Capability | Costs |

| Storage Controllers and System Costs | 50,000 IOs | $100,000 |

| Rotational Disk Costs (250) | 50,000 IOs | $125,000 |

| A few flash drives | 50,000 IOs | $60,000 |

|

|

|

|

| Total | 50,000 IOs+ | $285,000 |

| Cost per IO | $5.70/IO |

This storage system might make use of IO efficient DRAM in the controllers, but for the IO driven application, it leverages performance in the wrong way. The costs illustrated in Table 2 are not even the worst case, as exceeding the capabilities of such a midrange array makes the picture even worse. Adding more arrays will bring with it expensive switches and more complex infrastructures, while the more powerful controllers and caches of enterprise class arrays will start at a much higher $/IOP figure. The mismatch comes with a huge capability and cost of acquisition penalty that can get worse over time, but it doesn’t stop there. It also comes with tremendous and unnecessary operational costs.

The Costs of Owning Performance

It is too often overlooked that buying performance in this inefficient way has costs beyond simply acquiring too many parts in the form of disks and controllers. There is enormous cost bound up in the space, power, cooling, service and management of such systems over time. Moreover, these costs are exacerbated by the over-provisioning typical with rotational disk systems under high performance demands — a practice where excess disk capacity is provisioned to get more IO generating spindles, and even enhance spindle IO through innovations like short-stroking disks. Where excess disks are provisioned, operational costs are nearly linearly increased in every dimension. Excess disks increase power and cooling costs, while consuming additional data center space. Additional disks increase the likelihood of failure, driving up protection costs and increasing service costs and time and effort. They also directly increase the time and effort involved in managing a system more prone to component failures. In our example of a performance-driven 50,000 IO/s rotational-disk-based system, the supporting infrastructure, resource consumption, and human time and effort can add as much as many thousands of dollars in cost per month. Consider that during the three- to five-year lifecycle of performance-oriented storage systems, ownership costs can easily exceed the acquisition costs in terms of cost per IO.

Turning New Media Into Effective Storage Performance

The cost consequences of this mismatch of rotational disk with today’s performance demands becomes clear in this brief survey. But the technological shortfalls of our reliance on rotational media turns the story into an absurd travesty when the performance of other media is considered. While we have looked at the IO cost of different media types today (in Table 2), state of the art technologies are coming onto the market now that are turning these different media types into permanent storage. It is possible to harness these technologies in systems that deliver as much as 150,000 IO’s per 1.75″ rack unit. But traditionally, high performance solid state systems have come at a technological disadvantage compared to traditional storage. They have too often come with fixed capacity and performance that may lead to appliance sprawl. They have often required specialized integration time and effort, as they may not hold an entire data set. Moreover, with changing business demands, they can easily require a dedicated expert just to constantly retune them. They usually lack a full complement of storage features, look distinctly different from enterprise storage, and may complicate management and protection. When considering such disadvantages, the Total cost of ownership (TCO) for these systems may exceed even rotational disk.

Fortunately, better promises are on the horizon. We think 2011 may prove to be a revolutionary year for storage performance. Let’s take a look at two solutions that represent what we think are significant breakthroughs in storage performance –- not because of the level of performance they deliver, but because of the architecture with which they deliver performance. Make no mistake. We are excited by new media such as SSD, but we are looking for distinctly new architectures that extend performance beyond the limitations of an array controller, and provide a single foundation on which performance can be scaled. We are looking for all of this in a solution that does not alter the practice of storage inside the data center walls.

The first example is Kaminario. Kaminario recently introduced a bladed, scale-out DRAM-based storage system, and it claims to deliver approximately 150,000 IOs per node, in configurations that range from 150,000 IOs up to a tested configuration of 1.5M IOs. DRAM is used as real storage, and not just cache. In use, the Kaminario K2 system uses auto-tuning algorithms to dynamically balance data and IO access requests across all nodes in the system, making it entirely unnecessary to manually manage system loads and ensuring that maximum performance is available to any system that needs it. This auto-tuning is constantly in action, and it does away with huge costs of constantly tuning legacy performance solutions. Moreover, Kaminario wraps the K2 with a unique integration of disk that is used to turn the DRAM-based storage system into a permanent storage system with at least as much resilience and data protection as today’s best enterprise arrays. Kaminario’s Automated Data Distribution harnesses the capabilities of every node. It simultaneously uses redundancy across all nodes as well as what the company calls Self Healing Data Availability to actually increase resiliency and availability with scale. Capacity is fully separated from performance and IO ports (FC) so that each can be scaled independently. The system always makes use of parallelized IO across all nodes. That parallelized IO is maintained as nodes are added.

What is important about this storage performance innovation? There have been few performance-oriented solutions that promise the equivalent of unlimited performance, scale-out capacity, and the appearance and function of regular storage. The alternatives have been hairy in comparison, typically consisting of appliances that don’t scale, may come with very limited features, and may require highly specialized skillsets. Kaminario represents two breakthroughs. One, it gives the enterprise the densest, highest performance possible for maximum cost effectiveness in terms of Cost per IO. Second, it eliminates the issue of performance appliance sprawl and gives enterprises a single scale-out system that looks and acts like traditional storage. This adds up to serious TCO impact. In a nutshell, that dramatically alters the total cost per IO in terms of both acquisition costs and costs of ownership. At scale, the costs per IO of a Kaminario K2 look to be well under $1/IO.

Table 3: Kaminario –- Comparative Costs per IO

Taneja Group estimates for cost per IO from Kaminario K2 storage,

compared to the cost per IO from traditional storage.

|

Cost Dimension

|

Traditional System

|

Kaminario K2

|

| IO | $5+ / IO | $.33 / IO |

| Cost of Ownership at scale (500k IO) | $5+ / IO | Negligible, single system for all performance requirements, traditional storage appearance, better than enterprise availability. |

An entirely different example is Alacritech’s recently announced ANX Series NAS accelerators. Using 4TB of internal SSD and Alacritech’s unique IP acceleration technology, the ANX sits in front of NFS file servers as a read-only, caching accelerator. As a read only-cache, it never interferes with the write stream, but rather it offloads the read traffic that makes up 70 percent to 90 percent of the load on most file servers. This, in turn, frees up file server processors to handle the remaining mission-critical write traffic, and manage the rotational disks on which data is permanently stored. The 4TB of SSD within the ANX can be transparently inserted into an infrastructure, and shared across multiple file servers until ANX performance is saturated, when the business can choose to add more ANX accelerators to further scale their performance.

Similar to the Kaminario K2, the Alacritech ANX allows businesses to effectively sever the relationship between capacity and performance, scale each dimension independently without alterations in IT practices, and fundamentally change their total IO footprint without sprawl and management overhead. Since ANX is transparent, the storage infrastructure looks and acts like it always has, but with a fundamental shift in the total IO available. With a single filer, if the ANX triples or quadruples available IO, then it has significantly reduced the cost per IO of the environment, perhaps to a third of the original cost per IO (accounting for the cost of the ANX). If this happens across multiple filers, an organization can reduce cost per IO to a fraction (it is easy to imagine 1/10th or less) of the original cost. When the cost of the appliance is considered against this added IO capability, the cost of the additional IO is easily under $1/IO as well.

Table 4: Alacritech ANX –- Comparative Costs per IO

Taneja Group estimates for how Alacritech ANX impacts

the cost per IO for a 500,000 IO system compared to the costs of building similar

performance with traditional storage.

|

Cost Dimension

|

Traditional System

|

Alacritech ANX

|

| IO | $5+ / IO | Without adding a new primary system, reduces existing cost of IO to 1/3rd to 1/10th of original or less (typically $.50 – $1.75/IO) |

| Cost of Ownership at scale (500k IO) | $5+ / IO | Negligible – no addition to cost of management, minimal impact on data center footprint, no alteration in storage practices. |

It’s All About the Architecture

The disruption we’re seeing in performance capabilities is all about architecture, and it extends the costs benefits beyond the cost of acquisition. Speed is one thing, but coupling speed with a system architecture that preserves the existing practice of storage is an altogether different creature. This will deliver a distinctly different cost of ownership than deploying limited performance appliances, changing application architectures, or the cost-ineffective strategy of deploying expensive SSD behind limited performance array controllers. What does it take to deliver a break-through system architecture? It doesn’t seem like much: Look and act like storage; be scalable enough to avoid creating many separate performance silos or islands; enhance cost effectiveness by working alongside the capacity-oriented storage infrastructure that is already in place. But building such capabilities around storage media that has historically never been designed as robust permanent storage has been a challenge. Systems that do so will make performance possible with very little cost or complexity driven overhead -– in fact, they may even reduce operational costs by relieving the organization of the on-going management that is required to carefully monitor rotational disk systems when they are pushed to their limits. The next-generation products we are now seeing will create distinctly different ownership costs per IO, and they will pair those ownership costs with the superior costs of better media. The total costs of such solutions may significantly alter your bottom-line (see Table 5). With those promises and new hope on the horizon in the form of real products, it is time to start thinking about your strategy for meeting your storage performance demands.

Table 5: Fully Burdened Costs per IO

Taneja Group estimates for the fully burdened costs per IO in typical midrange

performance-built systems when the cost of operating the system through its lifecycle is factored in.

Next generation systems will not only unlock more efficient acquisition costs, but also shake off the

management and resource consumption burdens of big disk systems.

Jeff Boles is sr. analyst/director – Validation Services with storage analyst firm Taneja Group.Follow Enterprise Storage Forum on Twitter

| Rotational Disk Systems | Greater Than $10/IO |

| Next generation architectures | Less than $1/IO |