Recently, some of the major HBA vendors have released drivers for some operating systems that provide failover within the device driver. Combine this with the fact that some RAID

To help sort out those choices, let’s start by looking at the hardware and how it works. The RAID is likely the most important component to understand because it has the most options and issues for failover. How hosts view and access the LUNs

RAID controllers generally have two different characteristics for access to the LUNs:

- Active/Active

- Active/Passive

Higher-end and enterprise controllers are always Active/Active. Mid-range and lower-end controllers can be either. How the controller manages internal failover and your server side, software and hardware will have a great deal to do with your choices for accomplishing HBA and switch failover. Before developing a failover or multipathing architecture, you need to fully understand the issues with the RAID controller.

With Active/Active controllers, all LUNs are seen and can be written to by any controller within the RAID. Generally, with these types of RAID controllers, failover is not a problem, since the host can write or read to any path. Basically, all LUN access is equal, and load balancing I/O requests and access to the LUNs in case of switch or HBA failover is simple. All you have to do is write to the LUN from a different HBA path.

Active/Passive Increases Complexity

If your RAID controller is active/passive, the complexity for systems that require HBA failover can increase greatly. With active/passive controllers, generally the RAID system is arranged in a controller pair where both controllers see both LUNs, but LUNs have a primary path for access to a LUN and a secondary path. If the LUN is accessed via the secondary path, the ownership of the LUN changes from the primary path to the secondary path.

This is not a problem if the controller has failed, but if the controller path has failed, either the HBA or switch and other hosts are accessing that LUN via its primary path. Now each time one of the other LUNs accesses the LUN on the primary path, the LUN moves from ownership on the secondary path to ownership on the primary path. Then when the LUN is again accessed on the secondary path, the LUN fails over again to the secondary path. This ping-pong effect will eventually cause the performance of the LUN to drop dramatically.

Host-Side Failover Options

On the host side, there are three options for HBA and switch failover, and in some cases, depending on the vendor, load balancing of I/O requests across the HBAs. Here they are in order of hierarchy in the operating system:

- Volume manager and/or File system failover

- A failover and/or load balancing driver failover

- HBA driver failover

Each of these has some advantages and disadvantages — what they are depends on your situation and the hardware and software you have in the configuration.

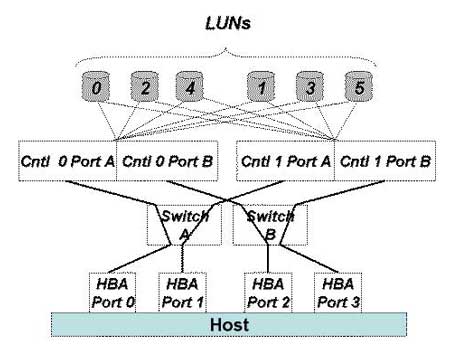

In the drawing below, we have an example of a mid-range RAID controller connected in an HA configuration with dual switches and HBAs, and with a dual-port RAID controller for both Active/Active and Active/Passive.

|

| Figure 1: An example of a active/active RAID controller. |

With an Active/Active RAID controller configuration, the failover software knows the path to each of the LUNs and ensures that it will be able to get to the LUN through the appropriate path. With this Active/Active configuration, you could access any of the LUNs via any of the HBAs with no impact on the host or another host, and both controllers can equally access any LUN.

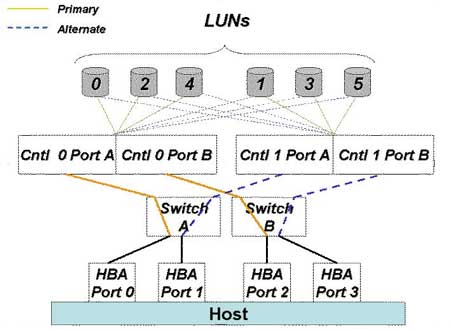

|

| Figure 2: An example of a HA active/passive RAID controller. |

If this were an Active/Passive RAID controller, it would be critical to access LUNs 0, 2 and 4 with primary controller A if a switch or HBA failed. You would only want to access LUNs 0, 2, and 4 from controller B if controller A failed. If a port on controller A failed, you would want to access the LUNs via the other switch and port and not via controller B. If you did access via controller B, and another host accessed the LUNs via controller A, the ownership of the LUNs would pong-ping and the performance would plummet.

Continued on Page 2: Volume Manager and File System Options

Volume Manager and File System Options

Volume managers such as Veritas VxVM and file systems such as ADIC StorNext and a number of Linux cluster file system vendors understand and are able to maintain multiple potential paths to a LUN. These types of products are able to determine what the appropriate path to the LUN should be, but oftentimes for Active/Passive controllers, it is up to the administrator to determine the correct path(s) to access the LUNs without failing over the LUNs to the other controller unnecessarily.

Failover at this layer was the initial type of HBA and storage failover available for Unix systems. Failover at the file system layer allows the file system itself to understand the storage topology and load balance it. On the other hand, you could be doing a great deal more work in the file system that might belong at lower layers that have more information about the LUNs and the paths. Volume managers and file system multipathing also support HBA load balancing.

Loadable Drivers

Loadable drivers from vendors such as EMC (PowerPath) and Sun (Traffic Manager) are examples of loadable drivers that manage HBA and switch failover. You need to make sure that the hardware you plan to use with these types of drivers is supported.

For example, according to the EMC Web site, EMC PowerPath currently supports only EMC Symmetrix, EMC CLARiiON, Hitachi Data Systems (HDS) Lightning, HP XP (Hitachi OEM) and IBM Enterprise Storage Server (Shark). According to Sun’s Web site, Sun Traffic Manager currently supports Sun Storage and Hitachi Data System HDS Lightning.

Other vendors are developing products that will provide similar functionality. As with the volume manager and file system method for failover, loadable drivers also support HBA load balancing as well as failover.

HBA Driver Failover

HBA drivers on some systems provide the capability for the drive to maintain and understand the various paths to the LUNs. In some cases, this failover works only for Active/Active RAIDs, and in other cases, depending on the vendor and the system type, it works for both type of RAIDs (Active/Active and Active/Passive). Since HBA drivers often recognize link failures and link logins faster than other methods, using this failover mechanism generally allows for the fastest resumption of I/O, since at the lowest level you have the greatest knowledge.

Conclusions

Each failover mechanism can be tuned to improve the performance of the system, but the most important issue is to determine which products work with which systems and OS releases and with which RAID hardware. If you have a heterogeneous environment, as is becoming more common, you need to develop a matrix. It is very likely that you will need to have different failover software for different machine types.

What you do not want to do is what I saw at one site. They decided that they wanted to have a failover and a backup, so they implemented two different (HBA and Volume Manager) failover methods. The two failover methods confused each other and the system became overwhelmed. Whatever you do, implement only one method per machine, if at all possible. On rare occasions, you might have to implement different failover mechanisms for different RAIDs and HBAs depending on what is supported, but do so carefully.

It goes without saying that testing HBA failover is critical to ensure that the configuration is correct and that HBA and switch failover works as planned. Management often doesn’t understand the complexity of this type of configuration and doesn’t realize that testing must be done for each kernel patch, volume manager/file system update, loadable driver update, HBA driver or firmware update, switch firmware update and RAID controller update.

All too often, I have seen that a simple patch or firmware change is installed, and a week later an HBA or switch port fails and failover no longer works. This happens far less often than it did a year ago, and hopefully will be less common still a year from now, but it happens. Testing is your only hope to ensure that everything works. It is expensive in terms of hardware, software and time, but worth it.