Back in 1984, the high-speed network backbone was 10 BaseT Ethernet and disk drive sizes where measured in MB, not GB. NFS served a need and was rapidly adopted throughout the industry as a standard.

NFS has had some limited updates since then, some for performance, but most of these were minor — many were for security and to keep up with other UNIX standards. We are all familiar with NFSv1, v2, v3 and now v4, and we have all complained about the performance of NFS, since network and storage performance have gotten faster but NFS has not kept pace.

Since NFS was introduced, the network side has gone from 10 BaseT to 10 GbE (three orders of magnitude performance improvement), and on the storage side from 3 MB/sec disk drives to 400 MB/sec Fibre Channel RAIDs, a performance improvement of 133 times. At the same time, NFS has changed from 4 KB packets to 64 KB. Large packet sizes might be an issue for dirty networks, but when moving massive amounts of data over high-speed networks, something better is needed. Well, the good news is that the future of NFS is almost here.

Never Fear, NFSv4.1 Is Here

The best way to understand NFSv4.1 and what is being called pNFS is to do some reading. pNFS (Parallel NFS) is the part of the NFSv4.1 protocol that allows for high-speed movement of data between machines. It was initially a separate group. pNFS.com provides some of the background information on why pNFS was needed (read the problem statement here, which details the problems with the current NFS protocol very well).

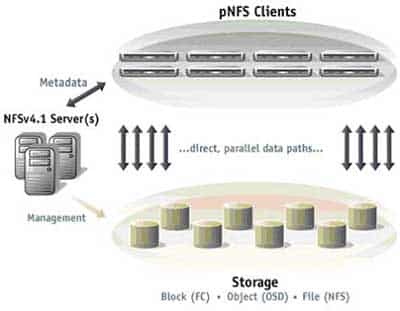

Here is a picture of what a pNFS system would look like, courtesy of pNFS.com:

What is most interesting about this picture is that the IEFT (Internet Engineering Task Force) is going to support the T10 OSD protocol as part of NFSv4.1. This means that with NFSv4.1, you can move data from the NFS server via blocks, T10 OSD objects or files. This is important to keep in mind as NFSv4.1 starts to take off, since most vendors are implementing only one of these methods to start, so it’s good to ask questions.

What is also important is that the data and metadata are separated as part of the transport mechanism. So what basically happens for block-based storage is that the inode and any indirect extents are gathered up and the block addresses are read directly over the network using DMA. The performance of this method will be night and day compared to the way NFSv4 and earlier versions read files over the network. This won’t amount to much if you are reading over a 100 BaseT line or maybe even 1 Gbit Ethernet, although you might see some improvement. Now what if you have 10 Gbit ethernet or, say, DDR (double data rate) IB (InfiniBand), or in the future, something even faster. The current NFS protocol could make these high-speed networks run like it was on 1 Gbit Ethernet.

Back around 1991 I got called to a site on a technical support call. They had two Cray machines connected via HiPPI (High Performance Parallel Interface 800 Mbit) and they also had an FDDI (Fiber Distributed Data Interface 100 Mbits). You might have guessed that HiPPI did not run 8 times faster than FDDI, and in fact it ran about 10 percent faster. They asked why they spent all of this money for HiPPI for a 10 percent performance improvement. I could not give a good reason. Of course ftp and rcp went much faster on HiPPI. The only good thing out of this trip was I got to go to Germany, since I had no good answers for the customer. NFS even back then on high-speed networks was not fast. Some minor tweaks were made to NFS to make it barely acceptable on 1 GbitE, but it still did not run at channel speed. Fast-forward 15 years and we are about to enter the world of commodity high-speed networking with 10 Gbit ethernet and the current version of NFS is not up to the task. This is the introduction to the problem statement:

“The storage I/O bandwidth requirements of clients are rapidly outstripping the ability of network file servers to supply them. Increasingly, this problem is being encountered in installations running the NFS protocol. The problem can be solved by increasing the server bandwidth. This draft suggests that an effort be mounted to enable NFS file service to scale with its clusters of clients. The proposed approach is to increase the aggregate bandwidth possible to a single file system by parallelizing the file service, resulting in multiple network connections to multiple server endpoints participating in the transfer of requested data. This should be achievable within the framework of NFS, possibly in a minor version of the NFSv4 protocol.”

Clearly, from this statement the IETF understands the current limitations of NFS and is working to solve them. Almost every major vendor is committed to NFSv4.1 (pNFS), including, but not limited to, EMC, IBM, NetApp, Sun, and of course the Linux community. There are even internet rumors that Microsoft is looking at NFSv4.1 in a future Windows offering. I have my doubts.

What NFSv4.1 Will Not Do

Contrary to what some people think, NFS is a protocol, not a file system. NFS allows a common external interface into a file system. It is not a file system, even though the name implies it is. What this means is that you are bound by the underlying file system performance. For example, if Microsoft ports NFSv4.1 to some future Windows system and you mount your camera over a 10 Gbit Ethernet connection, that does not mean with a FAT32 file system you will be able to stream the data off your hard drive on your old computer at 1 GB/sec. You have multiple limitations that will prevent this.

Let’s start with the fact that the media cannot transfer data at 1 GB/sec, and more importantly, you will be limited by file system performance. Even if you did get the media to transfer data at 1 GB/sec, there is no way that a FAT32 file system, given the data layout, could support that data rate.

I believe that NFSv4.1 is going to make a number of file systems look very bad. Right now these NFS file system can use the excuse that they are limited by the current NFS performance limitations, and that might be true, but the future will be different. One thing I always try is to run on the local NFS file server box and run a performance test to see how fast I can read and write. I then look at the underlying hardware and estimate if the performance limitation is the file system or the hardware. In this day and age, more often than not, it is not the hardware but the file system. Since the current NFS protocol is limiting and 10 Gbit ethernet is not, file systems connected to severs running NFS can still blame the protocol, but those days will be over soon.

Being the pessimist that I am, my bet is that some vendors will not support NFSv4.1 and 10 Gbit Ethernet until they get their file systems fixed to allow them to stream data at near channel rates. Why would a vendor want to make themselves look bad? The vendors that have high-performance file systems (we all know who they are) might be the first vendors that support NFSv4.1. Those file systems that do not support high-performance data transfers might not support NFSv4.1, since you are always limited by the slowest component in the data path. Watching the progression of NFS4.1 is going to be very interesting over the next few years, especially if you understand some of the reasons vendors are doing what they are doing.

Editor’s note: Henry Newman will discuss NFSv4.1 and pNFS further in a free Webcast on April 16, Storage Is Changing Fast — Be Ready or Be Left Behind.

Henry Newman, a regular Enterprise Storage Forum contributor, is an industry consultant with 27 years experience in high-performance computing and storage.

See more articles by Henry Newman.