Software-defined storage (SDS) decouples storage intelligence from the underlying storage devices. The environment orchestrates multiple storage devices into a software storage management layer that operates above the physical storage layer. By moving intelligence up the stack, customers can buy commodity hardware that supports SDS policy-driven workload processing, load balancing, dedupe, replication, snapshots, and backup. Instead of purchasing expensive proprietary NAS or SAN, SDS runs on commodity hardware and standard operating systems.

This is true as far as it goes. However, some SDS vendors – especially the software-only sellers – claim that the hardware doesn’t matter. But when it comes to software-defined storage design, hardware choices are critical.

It’s true that software-defined storage users can use commodity hardware and avoid expensive SAN or NAS with built-in storage intelligence. But software-defined storage users still need to integrate SDS with hardware, and design the physical infrastructure, so it optimizes the software-defined storage layer.

Optimize Software-Defined Architecture

Storage IO paths are complex and pass through multiple stages, and pathing issues easily compromise quality of service (QoS) and performance. When admins add software-defined storage on top of this already-complicated architecture, complexity increases and performance and QoS may suffer even more.

SDS users can avoid pathing issues by carefully integrating their software-defined storage with the virtual data center and its physical infrastructure. For example, coordinate the SDS design with the SDN manager to optimize virtual paths for packet routing and quality of service.

However, many SDS admins spend the majority of their resources on integrating the software-defined data center, and don’t pay enough attention to integrating SDS with the physical layer. This inattention can seriously affect your SDS project.

Benefits of Integrating Software-Defined Storage with Hardware

The benefits of SDS design that properly integrates your hardware can be substantial.

· Optimize application workloads with policy-driven performance and RTO/RPO settings.

· SDS pools storage capacity from storage systems and provides them to applications running in the software-defined layer. Provisioning is simplified, and the policy-driven software manages SLAs and QoS for different applications.

· Admins centrally manage the logical storage pools instead of logging in and out of device-level data services. Centralized management enables IT to create security and policies across a single logical infrastructure instead of different interfaces at the device level.

· SDS simplifies scaling with dynamic provisioning. Admins can easily add servers without manual data distribution and load balancing.

· SDS is capable of strong security. Not all SDS offerings are developed equally, but these environments should at a minimum enable encryption, multi-tenant logical layers, and strong logging and monitoring with understandable reports at the management interface.

These are strong benefits. But none of this will work well for you if your software-defined storage design does not integrate smoothly with the underlying physical devices. Let’s talk about how you can ensure that it does.

Software-Defined Storage Considerations: Key Tips

These are the critical points to observe when you design and implement your software defined storage:

1. Know your storage performance and capacity requirements

2. Understand vendor and hardware compatibilities

3. Design for current and future resilience

5. Manage your software defined storage

SDS Design Tip 1: Know Your Storage Requirements

The first thing to do is to identify workloads and their respective applications, servers, and clients. Typical characteristics to look at include baseline IOPs and peak IOPs, throughput, depth of queue waiting to be processed, latency, the portion of data that is actively changing (working IO), variation patterns, and how much throughput performance you need to maintain backup windows. You might also want to look at additional characteristics like the presence of encryption and the ratio of sequential to random data.

Now determine their performance and storage capacity requirements, and how data protection will work. Identify application workloads by performance, capacity, and data protection needs, including RTO and RPO. Assign priority applications to fast SSD and disk.

Do the same for secondary workloads. Identify characteristics such as where the backup stream is stored, where it is replicated, and the amount of storage capacity to preserve for backup workloads. Plan the storage capacity you need for backup and archive. Although long-term data retention is likely on tape or in the cloud, you might want to continuously backup some high priority applications to the storage hardware underlying the SDS. Typical capacity factors include the existing size of data and its monthly, bi-yearly, or yearly growth rate, and its scheduled retention period.

SDS Design Tip 2: Understand vendor and hardware compatibilities

Once you have identified workloads, performance characteristics, and capacity needs then you can look for your SDS vendor and optimize the physical storage and networking. As you deploy new software and hardware, be careful to deploy recommended firmware and drivers.

Keep in mind that as recently as 2015, most new storage hardware did not automatically work with SDS software. Consequences included SDS not recognizing new devices or media, disk sector and whole disk failures, and difficulty troubleshooting integration problems using separate software and hardware management interfaces.

Since then SDS vendors who also build hardware have been developing for SDS stacks, and software-only developers are improving reference architecture. This begs the question about going with commodity hardware and avoiding vendor lock-in, but there has been improvement.

Still, assume nothing when you research the storage hardware for your SDS system. Look deeply into compatibilities and best practices.

SDS Design Tip 3: Design for current and future resilience

Once you have chosen your SDS and physical storage vendors, you will need to design your SDS environment. Design for resilience so your environment will cost-effectively scale to match your developing storage requirements.

When you design for resilience, understand your current and future thresholds before you start. Know your uptime requirements and resilience objectives for your storage configurations including cluster nodes, redundant network connections for uninterrupted traffic, and performance.

Pay attention to scalability and growth characteristics on your SDS, storage media, processors, and RAM. And be sure to know how simple (or not) it will be to update firmware and drivers. The last thing you want is put your whole admin team on alert and down your SDS system for hours every time you need to upgrade firmware.

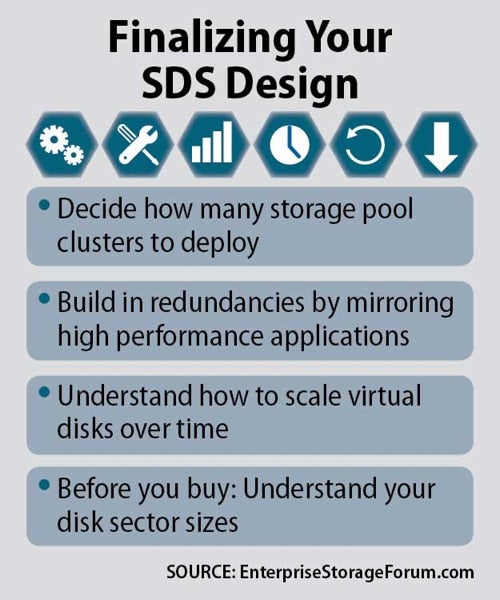

SDS Design Tip 4: Finalize the design

Remember that your SDS runs on top of physical storage. Carefully design performance and capacity so the physical layer will properly support your software-defined storage environment.

Decide how many storage pool clusters to deploy and understand provisioning and the process of adding new pools. There is no right or wrong way to do this: large storage pools allow for centralized management and fault troubleshooting but failing storage media can ripple through the entire SDS. In that case, you may prefer to maintain smaller storage pools if the admin management time is not overwhelming.

Build in redundancies by mirroring high performance applications to different storage devices in the pool or across multiple pools or use parity for low-write applications like archival. Both parity and mirroring let you copy data sets and consider reserving pool capacity for redistributed data should an SSD or HDD fail.

Decide what storage media sizes you will need as well as RAM and CPU requirements. Enclosures enter the picture here: you want sufficient size to house your current drives, and easy scalability so you can simply add more enclosures and redistribute disk drives as needed.

Performance and capacity are critical. Remember that calculating SSD performance has two dimensions: the number of SSDs that data can access simultaneously, and the capacity of the SSDs to know how much data it can process at the same time. Also think about the types and sizes of your SSDs and HDDs. Storage tiering comes into play here, with many admins opting for SSDs for Tier 0 and SSDs plus fast disk for Tier 1.

Processors and RAM are not necessarily as critical as media performance and capacity but may be very important depending on the processing point for data traffic. If it’s processed at the physical networking layer, such as RDMA network cards, then your storage processors don’t have to do all the heavy lifting. If the storage processors do process the traffic, then you’ll need high performance processors for high priority traffic. RAM makes a difference if you are doing operations such as deduping on clustered nodes.

Now that you have planned the underlying storage foundation, plan the virtual one just as carefully. Know how many virtual disks you plan on starting with and understand how to scale them over time. Remember that the more virtual disks you create in cluster nodes, the more time it will take to manage and load balance. The more intelligence your SDS offers for virtual disk management, the easier your job becomes. Also remember that the more virtual disks you are using, the more capacity you reserve for write-back caches.

The total size of virtual disks will of course depend on the actual size of your storage tiers. Factor in reserved capacity for dynamic data distribution and write-back caches. Virtual disks can be terabytes in size, but peak workloads should not spike over threshold performance or capacity settings. Smaller disk sizes may be more reliable when running large workloads.

Understand before you buy what your disk sector sizes are. For example, if your virtual OSs and applications support native 4KB sector disks, then you will get better write performance and some benefits to reliability and capacity. You can of course use 4K disks with 512-byte emulation for backwards compatibility, but this will not get you the same level of benefits as a native 4KB.

SDS Design Tip 5: Manage the Software and Physical Layers

Monitoring the software and hardware aspects of the SDS architecture are two different interfaces – more if you are separately managing multiple storage devices. SDS management abstracts the storage from its physical infrastructure and manages it at a logical layer. It administers policies for the hardware storage devices but does not manage the hardware. Common events like failed interconnects and disks can down entire software-defined storage environment.

The disadvantage is that admins must separately troubleshoot and manage the physical layer instead of centrally managing the storage stack. This does not have to be a deal breaker for deploying SDS. SAN and NAS hardware are already highly complex and attempting to change application pathways and configurations is time and resource intensive. SDS can certainly simplify these changes, which cuts down on the resources required for managing disk devices and SDS.

Simplify hardware troubleshooting by using robust hardware architecture with redundant clusters, dynamic scaling, and self-healing mechanisms. Also look for reference architectures and storage devices that are purpose-built to run SDS stacks. Invest in additional software like drive monitoring utilities.

Software Defined Storage: Powerful but Not Simple

SDS architectures can save money and time. When admins successfully integrate storage software and hardware and customize them to their storage needs, the business may realize significant improvements and cost savings.

No one is saying this is easy. One of the beauties of virtualized environments is that they run on a variety of storage, server, and networking components. Yet when admins want to combine these platforms into a single virtual SDS, they cannot simply expect them to automatically work seamlessly.

This is why storage admins need to spend time and attention to make sure their hardware is reliable and fault-tolerant and integrates well with their software-defined storage design. Only this level of integration allows SDS to properly manage stored data with flexible and dynamic policies.

You can do it by creating a clear knowledge and implementation plan for SDS and hardware integration and giving your team enough time to optimize the infrastructure right from the start. Expending the right resources at the start of the project will help ensure that your SDS deployment will be everything you need, now and into the future.

Related Posts

Top 10 tips for SDS deployment