You have probably watched Happy Days at some point in your life (even Mark Whatney watched it while stranded on Mars). In the first years it was generally considered a good show and had good ratings. But then they showed the episode where the Fonz jumped over a shark on water skis. After that episode, Happy Days never recovered. The show stayed on the air but the ratings continued to drop. Thus the phrase “jumping the shark” was coined. While it originally applied to television programs, it has been extended to, “… indicating the moment when a brand, design, franchise or creative effort’s evolution declines.”

Storage technology is evolving rapidly. New types of storage, new storage solutions and new storage tools that hold a great deal of promise for pushing storage technology forward are being developed. It is extremely likely that some current technologies may not survive in their current form. Which technologies may jump the shark is the subject of this article.

Taking current trends and making predictions from them is always a dicey proposition. However, there are some trends that will have a clear impact on hard drives.

NVRAM and Burst Buffers

While hard drives are still being produced at a very high rate, other storage technologies are catching up in different categories. Solid State Drives (SSDs) are becoming increasingly popular, and now Intel/Micron’s 3D XPoint NVRAM (Non-Volatile RAM) will provide a completely new way to store data.

3D XPoint will look like DIMMs and sit in the system DIMM slots. With regular DRAM memory, the data in memory is lost if the system is turned off. However, for NVRAM, the system can be turned off and the memory state remains in the memory (hence the label “non-volatile”). Rather than write data to a conventional storage device, the data can be left in memory and shared with other applications. This can improve application performance because an application doesn’t have to read data from conventional storage — the data is already in memory, and the application just needs a pointer to its location. Moreover, NVRAM will usually come in terabyte (TB) quantities for systems, instead of gigabytes (GB), as is the case for DRAM.

Alternatively, some or all of the NVRAM can be used as storage (a block device) allowing the creation of a “burst buffer.” Data can be quickly copied from DRAM to the NVRAM burst buffer because it’s inside the system. Theoretically, the state of the system can be stored in the burst buffer while the memory contents are still stored in NVRAM. Then the system can be power cycled, the state can be read from the burst buffer, and the system will resume its previous state. While this can be done today, the fact that the memory contents stay in memory means that the restart to the last state is very, very fast.

The key to burst buffers is the extremely high bandwidth because the storage is on the memory bus. The current projections are that NVRAM won’t be as fast as regular memory but it will be faster than SSDs. It will also cost less than DRAM but be more expensive than SSDs. NVRAM will first go on the market in 2016 or 2017 in HPC systems.

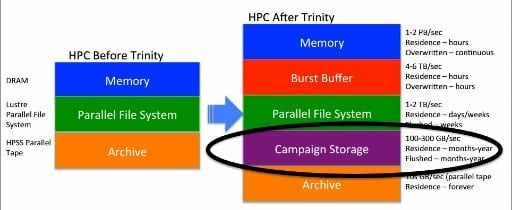

The introduction of NVRAM reduces the performance and capacity gap between main memory and an external file system. In the figure below, courtesy of The Next Platform, is an outline of the storage hierarchy before the advent of NVRAM and after the advent of NVRAM for a new HPC system at Los Alamos named Trinity. The image is from a talk given by a talk by Gary Grider from Los Alamos.

Trinity is expected to have a peak performance of more than 40 PetaFLOPS. It is also expected to have an 80 Petabyte (PB) parallel file system with a sustained bandwidth of 1.45 Terabytes/s, and a burst buffer file system that is 3.7 PB in capacity with a sustained bandwidth of 3.3 TB/s [Note: It will have a memory capacity of about 2PB, so the burst buffer can easily hold the entire contents of memory].

Notice how the burst buffer storage (NVRAM) in Trinity (on the right in the diagram) has a bandwidth that is 2-6 times that of the parallel file system but still lower than main memory. However, when the power is turned off, the data is not lost as it is with DRAM.

In Trinity, Los Alamos has introduced burst buffers into the storage hierarchy as well as something new they refer to as “campaign storage.” The campaign storage layer is below the parallel file system and above the archive layer. It has about 1/10th the performance of the parallel file system but presumably has a greater capacity than the layers above it. It is intended to hold the data longer (months-years) and flushed less frequently.

The righthand diagram, which is the storage stack for Trinity, is projected to become a very common hierarchy for HPC systems in the next few years. The prior hierarchy on the left hand side of the diagram only had two layers of storage, but now users have to contend with four layers.

With two layers, the parallel file system was the primary storage for the system. Any data that needed to be kept for a longer time but wasn’t accessed very often was sent to tape (archive). Tools that automatically performed the migration between the two tiers of storage were, and are, fairly common. There are tools and techniques for making all data, regardless of whether it’s on tape or disk, appear to be on the same file system (e.g. HSM – Hierarchical Storage Management). The has tremendous advantages for the user because the same set of commands can be used on a file regardless of whether it’s in the parallel file system or in the archive.

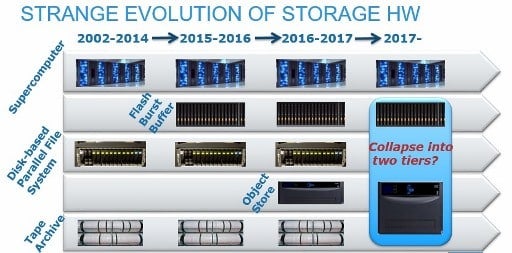

John Bent, who has worked at Los Alamos for many years on innovative storage systems, predicts that several of the storage layers will ultimately collapse into a single layer. He illustrates this in the diagram below.

Specifically he sees parallel file systems, object storage (“campaign storage”) and archive storage collapsing down into 1-2 layers. This brings the number of storage layers down to 2-3, which is better than four.

The top storage layer is NVRAM (burst buffers) that are inside the nodes. The next layer down can either be parallel file systems or a combination of parallel file systems and object storage. The final and third storage layer is either an archive or a combination of an archive and object storage (recall that the Bent says that the parallel file system, object store and archive are to be split into two tiers).

New Archive Media

Traditionally, archive meant a storage layer where you place data that is infrequently accessed but still has to be available to be read. The data is written to the archive layer in a sequential fashion, and there is really no such thing as random access because the data is to be accessed very infrequently. The classic solution for this has been tape.

Today tape is commonplace. It has high density, several tape solutions have very large capacities, and the media is stable and reliable. However, the needed tape robots are expensive and generally have high maintenance costs. For archive data they are an obvious choice versus storing everything on spinning media (hard drives). But there is some new technology that might change things.

Recently, there was an article about storing data in five dimensions on nanostructure glass that can survive for billions of years. This comes from the University of Southampton, where researchers have developed a method of using lasers to read and write to a fused quartz substrate (glass). Currently they are capable of writing 360TB to a 1-inch glass wafer. These wafers can withstand temperatures of up to 1,000 deg. Celsius and are stable at room temperature for billions of years (13.8 Billion years at 190 deg Celsius).

The technology is still being developed and commercialized, so many aspects of it are unknown. The read and write speeds are unknown, but it is a fair assumption that the data is written to the glass wafers in a sequential manner, and random IO is not allowed (sounds a great deal like tape). But the promise of the technology is massive. The researchers have already written several historical documents to a wafer as a demonstration. Such a dense and stable media is an obvious solution for archiving data.

Gunfight at the Storage Coral

The burst buffer storage layer uses NVRAM for storage, and the archive layer either uses tape or most likely, a new media such as the glass wafers previously mentioned. The two middle layers of parallel file system such as Lustre, and the object storage layer, are where data needs to be accessed in a random manner including random write access and re-writing data files. These two layers are the only places where classic storage media such as hard drives or SSDs could reside.

The capacity of hard drives is continually increasing with manufacturers releasing 8TB and 10TB 3.5″ drives. To create these increased capacities, manufacturers have started to use shingled magnetic recording drives (SMR). SMR drives allow the density of the individual platters to be increased at the cost of greatly reduced random access write performance. To write some changed data involves first reading the data from surrounding tracks, writing it to available tracks, and then writing the changed data to the drive. Consequently, re-writing data is a very time-consuming process. This has led people to refer to SMR drives as “sequential” drives. This also sounds a great deal like archive storage.

At the same time, the performance of SSDs is much better than hard drives — although the capacity is not quite the same nor is the price. The $/GB of SSDs has consistently been much higher than hard drives, but with new technologies such as 3D NAND chips and TLC (Triple Level Cells) there has been a bit of change.

To get an idea of the $/GB for both hard drives and SSDs, Newegg was searched on Feb. 13 for the least expensive SATA hard drives and SATA SSDs. These are consumer storage devices, but the intent is to get a feel for the trends. The results are in the table below:

| Drive Capacity (GB) | SSD $/GB | 5400 RPM Hard Drive $/GB |

7200 RPM Hard Drive $/GB |

|---|---|---|---|

| 120 GB | 0.3332 | ||

| 128 GB | 0.3358 | ||

| 240 GB | 0.2499 | ||

| 480 GB | 0.2291 | ||

| 500 GB | 0.0399 | 0.0699 | |

| 512 GB | 0.2343 | ||

| 1,000 GB (1 TB) | 0.2299 | 0.0445 | |

| 2,000 GB (2 TB) | 0.3289 | 0.0249 | 0.0269 |

| 3,000 (3 TB) | 0.0299 | 0.0269 | |

| 4,000 (4 TB) | 0.0304 | 0.0399 | |

| 5,000 GB (5 TB) | 0.0339 | 0.0399 | |

| 6,000 GB (6 TB) | 0.0358 | 0.0391 | |

| 8,000 (8 TB) | 0.0278 | 0.0549 | |

| 8,000 (8 TB) archive (SMR) |

0.0683 |

The table indicates that the hard drives are roughly an order of magnitude less expensive than SSDs on a $/GB basis. Hard drives also have larger capacities than SSDs, at least for consumer drives. Recently, Intel and Micro announced that 10TB SSDs will be available.

However, one trend that the table is not presenting is the price drops of SSDs. Just a few years ago, the average $/GB for an SSD was about $1/GB, even for consumer drives. Now some of them are below $0.25/GB. Given that the sequential performance of an SSD is about 5-10 times that of a hard drive and the random IOPS performance is 3-5 orders of magnitude greater than a hard drive, SSDs are becoming extremely popular as a storage medium.

What Does the Future Hold?

A quick summary:

- Burst buffers will likely become the very fast layer of storage for systems, replacing parallel file systems that are external to the system.

- The introduction of Burst Buffers will likely cause a consolidation in the middle layer of storage.

- New archive media that have a very high density and a very long life are being productized.

- Hard drives are not increasing in performance and with SMR drives the random IO write performance is decreasing.

- Hard drives are still the least expensive storage media that isn’t archive oriented.

- SSDs are rapidly coming down in price and the capacities are quickly increasing.

Putting these trends together points to the fact that hard drives, as they exist today, are not evolving at the same pace as other storage solutions. They are being squeezed by much higher-performing technologies such as burst buffers, and from the bottom by tape, and most likely a new media such as glass.

Hard drives will be in use for a long time. They have a wonderful $/GB ratio so if capacity and reasonable performance are important then hard drives are a great solution. However, just like Happy Days, hard drives may have already jumped the shark.

Photo courtesy of Shutterstock.