SSDs are one of the hottest technologies in storage. They have great throughput performance (read and write), great IOPS performance with the challenges of a limited number of rewrite cycles, and a much higher price than spinning media. In addition, there are some challenges to make the technology perform well, requiring new techniques to improve the overall behavior. Some of these controller techniques improve performance or longevity, or both. However, these techniques must be “tuned” so the best possible performance is extracted from the technology. One SSD controller company has a technique that improves both the performance and the longevity, but the improvements are solely based on your data.

SandForce is a fairly new SSD controller

company. Its products are used in a great number of SSDs, from affordable consumer

SSDs to enterprise-class SSDs. The technique SandForce has developed is that

its controllers use real-time data compression. They actually compress the

data before it is written to the drive enabling the performance can be increased

and the longevity of the drive can be improved.

This article examines the concepts of using real-time data compression in SSDs by taking a consumer SandForce-based SSD for a spin. I test the throughput performance using IOzone, which allows me to vary the compressibility (dedupability) of the data so I can see the impact on the throughput performance of the drive. The results are pretty exciting and interesting as we’ll see.

Real-Time Data Compression in SSDs

I talked about real-time data compression in SandForce SSD controllers in a different article, but the concepts definitely bear repeating because they are so fascinating.

The basic approach SandForce has taken is to use some of the capabilities from its SSD controller for real-time data compression. Since the implementation is proprietary, I can only speculate on what is going on inside the controller. Most likely, the uncompressed data comes into the drive (the controller) and is likely stored in a buffer. The controller then compresses the data in the buffer in individual chunks or perhaps coalescing the data chunks prior to compression. This process takes some time and computational resources prior to writing it to the storage media.

Once the data is compressed, it is placed on the blocks within the SSD. However, things are not quite this simple. To ensure the drive is reporting the correct amount of data stored, presumably the uncompressed data size is also stored in some sort of metadata format on the drive itself (maybe within the compressed data?). This means that if a data request comes into the controller with a request for the number of data blocks or size of a data block, the correct size is reported.

But since the data has been compressed, the amount of data that is written is less than the uncompressed data. Less data is written to the storage media, which means less time is used, which means faster throughput. The amount of time used to write the data is proportional to the size of the compressed data (i.e., the compressibility of the data), which drives the throughput performance. But we also need to remember that the “latency” for a SandForce controller can be higher than a typical controller because of the time needed to compress the data. I’m sure SandForce has taken this into account so that not too much time is spent compressing the data. In fact, I bet it’s a constant time compression algorithm.

During a read operation, the compressed data is probably read into a cache and then uncompressed. After that, it is sent to the operating system as though the data was never compressed. Presumably, the performance also depends on the ability to uncompress the data quickly so the algorithm should have a fixed time.

The really interesting and exciting part of this is that the compressibility of your data influences the performance of the storage media. If your data is very compressible, then your performance can be very, very good. If your data is as compressible as a rock then your performance may not be as good. But before you start thinking, “my data is very incompressible,” note that I have seen lots of different data sets (even binary ones) capable of being compressed. Also keep in mind that the SandForce controller needs to compress the data only in a specific chunk, not the entire file. So it’s difficult to say a priori with any certainty that your data will not perform well on a SandForce controller. I’m sure SandForce has spent a great deal of time running tests on typical data for the target markets and thus has a good idea of what it can and cannot do. Since the controller is quite popular, I’m sure the efforts have been successful.

However, the fundamental fact remains that the performance of the SSD depends on the compressibility of the data. Thus, I decided to get a consumer SSD with a SandForce 1222 controller and run some IOZone tests with variable levels of dedupability (compressibility) to see how the SSD performs.

Testing/Benchmarking Approach and Setup

The old phrase of “if you’re going to do it, do it right,” definitely rings true for benchmarking. All too often storage benchmarks are nothing less than marketing materials providing very little useful information. In this article, I will follow concepts that aim to improve the quality of the benchmarks. In particular:

- The motivation behind the benchmarks will be explained (if it hasn’t already).

- Relevant and useful storage benchmarks will be used.

- The benchmarks will be detailed as much as possible.

- The tests will run for more than 60 seconds.

- Each test is run 10 times, and the average and standard deviation of the results will be reported.

These basic steps and techniques can make benchmarking and testing much more useful.

The benchmarks in this article are designed to explore the write, read, random write, random read, and fread, fwrite performance of the SSD. I don’t really know what the results will be prior to running the tests so there are no expectations — it’s really an exploration of the performance.

This article examines the performance of a 64GB Micro Center SSD that uses a SandForce 1222 controller. This is a very inexpensive drive that provides about 60GB of useable space for just under $100. ($1.67/GB). The specifications on the website state the drive has a SATA 3.0 Gbps interface (SATA II), and it has a performance of up to 270 MB/s for writes, 280 MB/s for reads, and up to 50,000 IOPS.

The highlights of the system used in the testing are:

- GigaByte MAA78GM-US2H motherboard

- An AMD Phenom II X4 920 CPU

- 8GB of memory (DDR2-800)

- Linux 2.6.32 kernel

- The OS and boot drive are on an IBM DTLA-307020 (20GB drive at Ultra ATA/100)

- /home is on a Seagate ST1360827AS

- The Micro Center SSD is mounted as /dev/sdd

I used CentOS 5.4 on this system, but I used my own kernel — 2.6.32. Ext4 will be used as the file system as well. The entire device, /dev/sdd was used for the file system so it is aligned with page boundaries.

I used IOzone because it is one of the most popular throughput benchmarks. It is open source and written in very plain ANSI C (not an insult but a compliment), and perhaps more importantly, it tests different I/O patterns, which very few benchmarks actually do. It is capable of single-thread, multi-thread, and multi-client testing. The basic concept of IOzone is to break up a file of a given size into records. Records are written or read in some fashion until the file size is reached. Using this concept, IOzone has a number of tests that can be performed. In the interest of brevity, I limited the benchmarks to write, read, random write, random read, fwrite, and fread, all of which are described below.

- Write: This is a fairly simple test that simulates writing to a new file. Because of the need to create new metadata for the file, many times the writing of a new file can be slower than rewriting to an existing file. The file is written using records of a specific length (either specified by the user or chosen automatically by IOzone) until the total file length has been reached.

- Read: This test reads an existing file. It reads the entire file, one record at a time.

- Random Read: This test reads a file with the accesses being made to random locations within the file. The reads are done in record units until the total reads are the size of the file. Many factors impact the performance of this test, including the OS cache(s), the number of disks and their configuration, disk seek latency and disk cache.

- Random Write: The random write test measures the performance when writing a file with the accesses being made to random locations with the file. The file is opened to the total file size, and then the data is written in record sizes to random locations within the file.

- fwrite: This test measures the performance of writing a file using a library function “fwrite()”. It is a binary stream function (examine the man pages on your system to learn more). Equally important, the routine performs a buffered write operation. This buffer is in user space (i.e., not part of the system caches). This test is performed with a record length buffer being created in a user-space buffer and then written to the file. This is repeated until the entire file is created. This test is similar to the “write” test in that it creates a new file, possibly stressing the metadata performance.

- fread: This is a test that uses the fread() library function to read a file. It opens a file and reads it in record lengths into a buffer that is in user space. This continues until the entire file is read.

Other options can be tested, but for this exploration only the previously mentioned tests will be examined.

For IOzone, the system specifications are fairly important since they affect the command-line options. In particular, the amount of system memory is important because this can have a large impact on the caching effects. If the problem sizes are small enough to fit into the system or file system cache (or at least partially), it can skew the results. Comparing the results of one system where the cache effects are fairly prominent to a system where cache effects are not conspicuous is comparing the proverbial apples to oranges. For example, if you run the same problem size on a system with 1GB of memory compared to a system with 8GB you will get much different results.

For this article, cache effects will be limited as much as possible. Cache effects can’t be eliminated entirely without running extremely large problems and forcing the OS to virtually eliminate all caches. However, one of the best ways to minimize the cache effects is to make the file size much bigger than the main memory. For this article, the file size is chosen to be 16GB, which is twice the size of main memory. This is chosen arbitrarily based on experience and some urban legends floating around the Internet.

For this article, the total file size was fixed at 16GB and four record sizes were tested: (1) 1MB, (2) 4MB, (3) 8MB, and (4) 16MB. For a file size of 16GB that is (1) 16,000 records, (2) 4,000 records, (3) 2,000 records, (4) 1,000 records, respectively. Smaller record sizes took too long to run since the number of records would be very large so they are not used in this article.

The command line for the first record size (1MB) is,

./IOzone -Rb spreadsheet_output_1M.wks -s 16G -+w 98 -+y 98 -+C 98 -r 1M > output_1M.txt

The command line for the second record size (4MB) is,

./IOzone -Rb spreadsheet_output_4M.wks -s 16G -+w 98 -+y 98 -+C 98 -r 4M > output_4M.txt

The command line for the third record size (8MB) is,

./IOzone -Rb spreadsheet_output_8M.wks -s 16G -+w 98 -+y 98 -+C 98 -r 8M > output_8M.txt

The command line for the fourth record size (16MB) is,

./IOzone -Rb spreadsheet_output_16M.wks -s 16G -+w 98 -+y 98 -+C 98 -r 16M > output_16M.txt

The options, “-+w 98”, “-+y 98”, and “-+C 98” are options that control the dedupability (compressibility) of the data. IOZone uses the phrase dedupe to describe if the data is capable of being deduplicated. This is basically the same as compressed, so I will use the phrases interchangeably. The number “98” in the options means the data is 98 percent dedupable, hence very compressible. These three options allow me to control the compressibility of the data, so I can examine the impact of data compressibility on performance.

I tested three levels of data compressibility — 98 percent (very compressible), 50 percent, and 2 percent (very incompressible). I wanted to get an idea of the range of performance with these levels of data compressibility but I didn’t want to get unrealistic results with either 100 percent compressible data or 0 percent compressible. (Though I’m not really sure if either of those are really possible.)

Results

All of the results are presented in bar chart form, with the average values plotted and the standard deviation shown as error bars. For each of the three levels of compressibility (98 percent, 50 percent and 2 percent), I plot the results for the four record sizes (1MB, 4MB, 8MB, and 16MB).

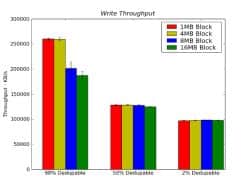

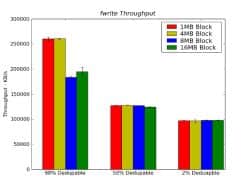

The first throughput result is the write throughput test. Figure 1 below presents the results for the four record sizes and the three levels of dedupability (compressibility). The averages are the bar charts and the error bars are the standard deviation.

|

| Figure 1 Write Throughput Results from IOzone in KB/s |

The first obvious thing you notice in Figure 1 is that as the level of compressibility decreases (thus decreasing dedupability), the performance goes down. The best performance for the cases run was for a 1MB record size and 98 percent dedupable data, where the write throughput performance was about 260 MB/s, which is close to the stated specifications for the drive. As record size increases, the performance goes down, so that at a record size of 16MB, the write throughput performance was about 187 MB/s for 98 percent dedupability.

For data that is 50 percent dedupable (compressible), the performance for a record size of 1 MB was only about 128MB/s, and for data that is almost incompressible (2 percent dedupable), the performance for a record size of 1 MB was only about 97 MB/s. So the performance drops off as the data becomes less and less compressible (as one might expect). Another interesting observation is that as the data becomes less compressible, there is little performance difference between record sizes.

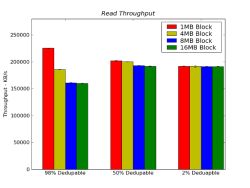

Figure 2 below presents the read throughput results for the four record sizes and the three levels of dedupability (compressibility).

|

| Figure 2 Read Throughput Results from IOzone in KB/s |

These results differ from the write results in that the performance does not decrease that much as the level of data compressibility rises. For a 1MB record size, at 98 percent dedupable data, the performance was about 225 MB/s, for 50 percent dedupable data the performance was about 202 MB/s, and for 2 percent dedupable data, the performance was about 192 MB/s.

In addition, as the level of compressibility decreases the performance difference between record sizes almost disappears. Compare the four bars for 98 percent dedupable data and for 2 percent dedupable data. At 2 percent the performance for the four record sizes is almost the same. In fact, for larger record sizes, the read performance actually improves as the data becomes less compressible.

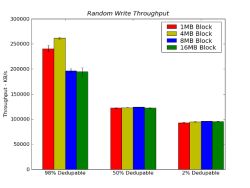

Figure 3 presents the random write throughput results for the four record sizes and the three levels of dedupability (compressibility).

|

| Figure 3 Random Write Throughput Results from IOzone in KB/s |

Figure 3 reveals that just like write performance, random write performance drops off as the level of data compressibility decreases. For the 1MB record size, the random write throughput was about 241 MB/s for 98 percent dedupable data, 122 MB/s for 50 percent dedupable data, and about 93 MB/s for 2 percent dedupable data.

In addition, just like the write performance, the performance difference between record sizes is very small as the level of compressibility decreases. So for small levels of dedupable data, the record size had very little impact on performance over the range of record sizes tested.

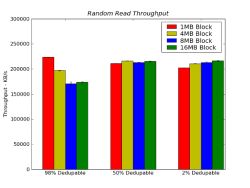

Figure 4 presents the random read throughput results for the four record sizes and the three levels of dedupability (compressibility).

|

| Figure 4 Random Read Throughput Results from IOzone in KB/s |

The general trends for random read performance mirror those of the read performance in Figure 2. Specifically:

- The performance drops very little with decreasing compressibility (dedupability).

- As the level of compressibility decreases, the performance for larger record sizes actually increases.

- As the level of compressibility decreases, there is little performance variation between record sizes.

Figure 5 below presents the fwrite throughput results for the four record sizes and the three levels of dedupability (compressibility).

|

| Figure 5 Fwrite Throughput Results from IOzone in KB/s |

These test results mirror those of the write throughput test (Figure 1). In particular,

- As the level of compressibility decreases, the performance drops off fairly quickly.

- As the level of compressibility decreases, there is little variation in performance as the record size changes (over the record sizes tested).

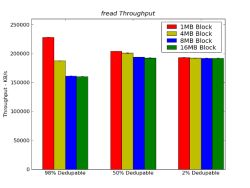

Figure 6 presents the fread throughput results for the four record sizes and the three levels of dedupability (compressibility).

|

| Figure 6 Fread Throughput Results from IOzone in KB/s |

Like the two previous read tests (read, random read), the general trends for fread performance has the same general trends:

- The performance drops only slightly with decreasing compressibility (dedupability).

- As the level of compressibility decreases, the performance for larger record sizes actually increases.

- As the level of compressibility decreases, there is little performance variation between record sizes.

Summary

SandForce is quickly becoming a dominant player in the fast-growing SSD controller market. It makes SSD controllers for many consumer drives and increasingly, enterprise-class SSDs. One of the really cool features of its controllers is that they do real-time data compression to improve throughput and increase drive longevity. The key aspect to this working for you is that the ultimate performance depends on your data. More precisely — the compressibility of your data.

For this article I took a consumer SSD that has a SandForce 1222 controller and ran some throughput tests against it using IOzone. IOzone enabled me to control the level of data compressibility, which IOzone calls dedupability, so I could test the impact on performance. I tested write and read performance as well as random read, random write, fwrite, and fread performance. I ran each test 10 times and reported the average and standard deviation.

The three write tests all exhibited the same general behavior. More specifically:

- As the level of compressibility decreases, the performance drops off fairly quickly.

- As the level of compressibility decreases, there is little variation in performance as the record size changes (over the record sizes tested).

The absolute values of the performance varied for each test, but for the general write test, the performance went from about 260 MB/s (close to the rated performance) at 98% data compression to about 97 MB/s at 2% data compression for a record size of 1 MB.

The three read test all also exhibited the same general behavior. Specifically,

- The performance drops only slightly with decreasing compressibility (dedupability)

- As the level of compressibility decreases, the performance for larger record sizes actually increases

- As the level of compressibility decreases, there is little performance variation between record sizes

Again, the absolute performance varies for each test, but the trends are the same. But basically, the real-time data compression does not affect the read performance as much as it does the write performance.

The important observation from these tests is that the performance does vary with data compressibility. I believe that SandForce took a number of applications from their target markets and studied the data quite closely and realized that it was pretty compressible and designed their algorithms for those data patterns. While SandForce hasn’t stated which markets they are targeting I think to understand the potential performance impact for your data requires that you study your data. Remember that you’re not studying the compressibility of the data file as a whole but rather the chunks of data that a SandForce controller SSD would encounter. So think small chunks of data. I think you will be surprised at how compressible your data actually is. But it’s always a good idea to test the hardware against your applications.