For the first time, the performance of an I/O bus is faster than the fastest host attachment, so we have now reached an important time in technology history where the bus is fast enough to run any card at rate. More on that in a moment, but first, some background is in order.

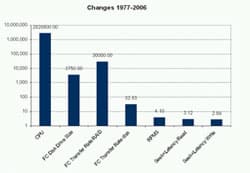

I have often complained about the state of I/O and the data path (see Storage Headed for Trouble). Below I’ve included a chart of the performance increases of various technologies since 1977 (an admittedly arbitrary starting point, but, hey, it was my first year on a computer), to emphasize just how far storage technology is lagging behind.

|

| I/O Performance Increases of Different Technologies Since 1977

|

As can be clearly seen, storage performance is seriously lacking by many orders of magnitude. This trend is not going to change for the foreseeable future, since storage technology has mechanical limits, but PCI-E offers some hope.

PCI-E Design and System Architecture

PCI-E is a serial connection for each direction. Within the bus itself, it is broken up into lanes, with each lane supporting 2.5 Gbits per second in each direction. With encoding and error checking, that translates into an effective bandwidth for NICs, HCAs and HBAs of about 250 MB/sec, which is enough for 2Gb Fibre Channel HBAs. For more information on PCI-E, visit Interfacebus, ars technica and Eurekatech.

The big concept to take away is lanes. For example, if you want to use 4Gb Fibre Channel and run full duplex with a single port HBA, you need 400 MB/sec of bandwidth for each direction. In PCI-E terms, that’s two lanes, since each lane will be able to run at full rate. You could use a single lane, but you would be limited to 250 MB/sec. That might not be a problem for IOPS like database index searches.

Using 400 MB/sec transfer with no other overhead and 16 KB requests would support 25,600 (400 MB per second/16 KB requests) requests per second, while 250 MB/sec single lane would support 16,000 requests. There is additional overhead, so you will never really achieve those rates, but either way, one lane or two lanes far exceeds what most servers, HBAs and RAID systems can deliver.

So from an IOPS perspective, a single lane and 4Gb HBA will work just fine, and with dual port, one or two lanes will more than saturate most RAID configurations. Assuming that a disk drive at most can do 150 random I/Os per second, you would need a large number of disk drives or cache hits to run at full rate. Since most RAID controllers do not have a command queue of 8K, you will also far exceed the command queue of RAID controllers.

I can’t remember a time when the performance of an I/O bus was faster than the fastest host attachment, so we have reached an important time in technology history where the bus is fast enough to run any card at rate. This assumes a number of things:

- You have enough memory bandwidth to run the bus rate full rate: With new 16 lane PCI-E buses on the way, the bandwidth requirements to run the bus at full rate full duplex would be (2.5 Gb/sec*2 (full duplex)*16)/8, or 10 GB/sec. That is a lot of memory bandwidth that is not available today in most x86 and AMD systems.

- Bottlenecks in the system: Many PCI and PCI-X buses from a variety of vendors had performance bottlenecks related to the interface between the bus and the memory system. These performance design issues in most cases restricted bus performance, limiting the bus from reading or writing to memory. Occasionally the bus itself was poorly designed, but more often than not it was bus memory interface that was the problem. Clearly we are upping the required performance of this interface from about 1 GB per second with PCI-X to a much larger number. This requires the vendors to examine the design of this interface, and some early testing for a vendor or two suggests to me that some vendors have problems in this area, but the problems are not nearly as bad as they were for PCI or PCI-X.

- New I/O cards: With a new generation of buses, new I/O cards must be developed. These include, but are not limited to, Fibre Channel, InfiniBand, and Ethernet (1Gb and 10Gb). Testing these cards for streaming performance is difficult. Finding test equipment is not that difficult, but finding people with the knowledge of the hardware and software stack where the card is placed is not that easy. If the card streams and has great IOPS performance, that’s great, but if there is a bottleneck, it will be a hard thing to debug: It could be the application, operating system, I/O driver, card driver, PCI-E bus, memory bandwidth or other issue in the data path. I was involved in some early Fibre Channel testing back in the 1990s, and there were often a few things in the data path that needed work to achieve the rate the vendor claimed we could get.

What Should an Architect Do?

PCI-E is clearly a better choice than PCI-X for both streaming I/O performance and IOPS. PCI-E is also taking over even lower-end PCs from Dell, HP and others.

But what about server vendors with large SMP systems? Where is the PCI-E bus? The problem is that large SMP servers have much greater memory bandwidth and a more complex memory infrastructure. It is pretty simple to design a PCI-E bus to fan in and out of memory when you are working on a single board. The job gets way more complex for large systems that have many buses and memory interconnections that cross boards. While these vendors should have thought about this ahead of time with each new generation of server, it is still not a simple problem. Most of the large SMP server vendors are using PCI-X technology today and not PCI-E. This is a problem for a number of reasons:

- PCI-E and PCI-X NICs, HBAs and HCAs are not interchangeable because of card size voltage. This means that you will have to have two sets of spares: one for your blade systems running PCI-E, and one for your large SMPs.

- SMP I/O performance will not be as good as a blade. High-end home PCs have 8 lane PCI-E slots for I/O and 16 lane PCI-E slots for graphics. Add that up and you have more bandwidth than memory bandwidth in many of these systems, but the raw bandwidth exceeds the bandwidth of almost 12 PCI-X slots running at full rate.

PCI-E will be the state of the art bus technology for a number of years. It is unfortunate that many of the large SMP vendors didn’t plan for the future so that current products would have PCI-E. Designing these changes are complex, but I/O for many of these vendors seems to be an afterthought. As end users, perhaps we need to do more to emphasize the importance of I/O to the large SMP vendors.

Henry Newman, a regular Enterprise Storage Forum contributor, is an industry consultant with 26 years experience in high-performance computing and storage.

See more articles by Henry Newman.