Then SATA drives came along, with significantly higher density but also higher failure rates and lower performance, and RAID-6 designs soon followed to enable their use in higher-end and performance environments.

With RAID-6 growing in popularity, it’s a good time to take a look at some of the issues involved compared to RAID-5 when evaluating RAID controllers, among other concerns.

Driving the Problem

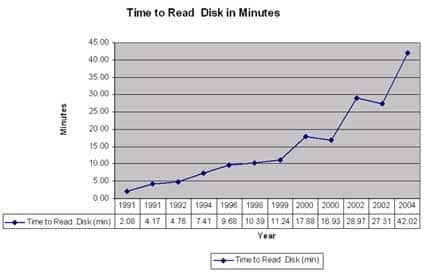

The time to read a single drive has gotten significantly longer over time, as you can see in the chart below:

|

The main reason for this is that density for disk drives has been growing much faster than performance. For enterprise disks (SCSI and Fibre Channel drives), we have gone from 500MB drives to 300GB drives since 1991. That is an increase of 600 times. During the same period, the maximum performance has gone from 4 MB/sec to 125 MB/sec, an increase of 31.25 times. If disk drive performance had increased at the same rate as density, we would have drives that could be reading or writing at about 2.4 GB/sec. That would be great, but it is not likely to happen anytime soon.

So it’s clear that the time to rebuild RAID LUNs has increased dramatically. Another point to consider: look back on 1996, for example, near the introduction of 1Gb half-duplex FC. The transfer rate for a disk drive was 16 MB/sec and a density of 9GB. From 1996 to today, the maximum performance of a drive has gone up 7.8 times, the density has gone up 33.33 times, while the change rate to a single drive has only increased four times. Yes, we have full duplex, but in 1996, a single FC channel could support a maximum of 6.25 drives reading or writing at full rate. Today that number is 3.2. I am aware of no significant changes for enterprise drives that will change these trends. Adding SATA drives to the mix just exacerbates these problems, since the drives are denser and the transfer rates are lower. I believe this became the driving reason for RAID-6, since the risk of data loss for RAID-5 increased as the density increased.

RAID Controller Performance

Given all that, it is clear that at least for the foreseeable future, for Fibre Channel, RAID-6 will become more common given the increase in rebuild time required by denser drives with a slower interface. Add to this the usage of SATA drives and it is clear that RAID-6 is here to stay until someone figures out something better.

The problem is that RAID-6 requires more resources from the controller to calculate the additional parity and more bandwidth to write it, and for some vendors, read the additional parity. The amount of bandwidth required depends on the RAID-6 configuration. For example, with 8+1 RAID-5, you need the bandwidth of nine drives; with RAID-6 8+2, you need 11 percent more bandwidth, or 10 drives. For a 4+1 RAID-5, you need the bandwidth of five drives, but with RAID-6 4+2, you need 20 percent more bandwidth, or a sixth drive. That is 20 percent more bandwidth for a single LUN and surely almost every RAID controller can handle that, but what if all of the LUNs in the system were RAID-6?

Does your controller have 11 percent or 20 percent more computational resources to calculate parity and that much more bandwidth from the controller to all of the trays of disks? Add the potential for RAID reconstruction and you might be trying to run the RAID controller faster than it was designed to run. I think it is important for everyone considering RAID-6 to understand some of the design issues in RAID controllers to better understand if what you are buying can meet your performance needs. I am not going to address the differences between FC and SATA drives, since I have already covered this (see The Real Cost of Storage).

Checking RAID Performance

There are two parts of RAID controllers to consider when evaluating them to ensure that they can meet the performance requirements of RAID-6 compared to RAID-5, since almost every midrange RAID vendor has designed their controller around RAID-5 performance requirements rather than RAID-6. One is the performance of the processor that is calculating the parity and the second is the performance of the back-end channels.

The performance of the processor should be pretty easy to measure. Ask the vendor to take a single disk tray or at most four disk trays. Before you begin you need to understand the number of back-end connections and the performance of those connections. For example, if you have four back-end 4Gb FC connections, you will need to match those four connections with four FC HBAs and a system or systems that can run those HBAs at full rate. You need to ensure that you are matching the front side performance (performance from server to RAID) with the backside performance (performance from RAID controller to the disk trays). Create a 4+1 LUN and a 4+2 LUN and use a program that can write to the raw device with multiple threads such as xdd from www.ioperformance.com. The write to the 4+1 should be the same as the 4+2.

Now do the same for as many LUNs as you have performance to run at full rate. Assume full rate in two ways. Take the maximum performance of the disk drives in the LUN using outer cylinders and ask the vendor what the maximum performance is to the disk tray. Take the lower value of these two numbers. Repeat using 8+1 and 8+2 for as many LUNs as you have performance to run at full rate. The performance for writing where parity performance is critical should be the same. If it is not, then the parity processor is not fast enough, the performance of the back-end of the RAID is not designed well, or both.

Determining if it is the processor is difficult and in this day and age not as likely, since high performance processors are pretty cost-effective. On the other hand, designing the back end of RAID controllers is complex, so that is most often the likely culprit. Today most RAID controllers support an FC fabric connection to each of the disk trays, and within the tray with either FC-Al, SATA or SAS connection(s) within the disk tray. The first thing to understand is the ratio of performance from the RAID controller to the host(s) compared to the performance of the RAID controller to cache. Often for midrange controllers, the ratio is between 1 to 1 and 1 to 4 and sometimes more (more bandwidth from the controller to the disk trays). Remember, for RAID-6, you are going to use more bandwidth as the second parity drive is written and, for many vendors, read.

Take the following example. Let’s say you have four 4Gb FC for the front-end performance and six channels from the cache to the disk trays (1 to 1.5).

|

The RAID controller described above would use the maximum bandwidth available for the four 4+2 LUNs. This is neither good nor bad, but just a fact that RAID-6 uses more bandwidth than RAID-5. Some vendors address this bandwidth issue for reads by not reading all of the parity drives, so the problem would only be on writes; other vendors do other things. Of course this is worst-case for streaming I/O, but the same concepts are true for IOPS but the issues are seek and latency for IOPS and not bandwidth.

Plan Ahead

RAID-6 will require more bandwidth than RAID-5 and could affect the performance of your RAID controller. The write example I give can be caused not only by a user writing from a host, but also if the controller requires a LUN to be reconstructed. It is important, especially for RAID-6, to understand the back-end performance of the RAID controller and relate that to the planned LUN configuration. The performance of the controller from the cache to host is always slower for midrange products than cache to disk, and for enterprise products this is now also the case.

What this all means is that before you buy any RAID controller, understand the planned configuration and how much I/O is needed by the hosts and add in the potential for a rebuild. RAID-6 configurations need to be considered, since they will require more bandwidth between the cache and disk trays and RAID-5.

Henry Newman, a regular Enterprise Storage Forum contributor, is an industry consultant with 27 years experience in high-performance computing and storage.

See more articles by Henry Newman.