Many people have said RAID is dead. But just like the Phoenix, RAID may rise from the dead thanks to some new technologies.

One of the more recent and interesting of those technologies is Dynamic Disk Pools (DDP) from NetApp. An examination of DDP illustrates the problem with conventional RAID and what can be done to make it useful into the future.

The Problem with RAID

RAID is one of the most ubiquitous storage technologies. It allows you to combine storage media into groups to give more capacity, increased performance and/or increased resiliency in the case of drive failure. The cost is that for most RAID levels we lose some of the total capacity of the RAID group because parity information has to be stored or a mirror has to be constructed.

However, like many of us, RAID is showing its age.

Using RAID-5 or RAID-6, you can lose a drive and not lose any data. When a drive is lost in a RAID-5 or RAID-6 group, all of the remaining drives in the group are read so that the data can be reconstructed via the remaining drives and parity information.

For example, if you have ten 3TB drives in a RAID group (RAID-5 or RAID-6) and you lose a drive, you have to read every single block of the remaining nine drives. This is a total of 27TB of data to reconstruct 3TB of data. Reading this much data can take a very long time. The fact that all remaining nine drives are writing to one target spare drive doesn’t help reconstruction performance either. Moreover, while this reconstruction is happening, the RAID group may still be in use, further slowing down the reconstruction. Plus, in the case of RAID-5, if we lose another drive then we can’t recover any of the data (with RAID-6 we could tolerate the loss of one more drive).

While we now have 3TB and 4TB drives, 5TB and 6TB drives are on the way. In the case of a RAID-5 or RAID-6 group, if we have ten drives that are 6TB each, then the amount of data that needs to be read to recover from a single drive failure is 9*6 or 54TB of data to recover a single 6TB drive. Remember that drive speeds aren’t really getting any faster, so the amount of time to read the remaining drives is much greater than it is today (i.e. the read performance stays the same but the amount of data to be read increases).

Moreover we also have the problem that the URE (Unrecoverable Read Error) rate for drives has not increased. The URE describes the amount of data that is read before hitting a read error (i.e. the controller cannot read a particular block). This is particularly important when a RAID rebuild is initiated because the entire capacity of all of the remaining drives in the group have to be read — even if there is no data in the blocks.

In some cases, this can push the probability of hitting a URE to almost 1 (i.e. guaranteed to hit a block that can’t be read), meaning that the rebuild will fail. In the case of RAID-5, this means that the rebuild has failed, and the data has to be restored from a backup. In the case of a RAID-6 you now have 2 failures — the first failed disk and the disk that gave a URE. The RAID-6 rebuild can continue, but it has now lost its protection. If there is another error, then the RAID group is lost and the data must be restored from a backup.

Of course, storage companies have recognized the limitations of conventional RAID, and they have created some techniques for allowing the rebuild process to proceed in the event of a URE. For example, in the case of RAID-6 with a failed drive, you still have one parity drive, so a URE won’t stop a reconstruction. This allows controllers to continue reconstructing the RAID group. But really this is just an application of RAID-6 (the ability to lose 2 drives).

One other idea that I haven’t seen yet but that might exist is the ability to skip the block being reconstructed when a URE is encountered. The array would finish the reconstruction but notify the user which block is bad. Of course, the file associated with the failed block has not been fully reconstructed, but this does allow you to reconstruct all of the other data. This is much better than having to restore 54TB of data from a backup or a copy because ideally you could restore just the file(s) that were affected by the bad block.

So the big problems for conventional RAID groups are (1) as the drives get larger, the number of drives in a RAID group needed to encounter a URE, decreases (true for both RAID-5 and RAID-6 groups), and (2) the rebuild times are getting extraordinary long because read and write performance stays the same but the RAID groups have more data.

The combination of these issues, as well as others, have not painted a rosy picture for RAID. As drives have gotten bigger, the outlook for RAID has gotten gloomier. Many people have predicted its demise for years. But there are technologies that can help improve the situation. In this article I want to examine one such technology, Dynamic Disk Pools (DDP), that shows a great deal of promise in helping RAID be a viable technology into the next generation.

NetApp’s Dynamic Disk Pools

NetApp has developed a concept called Dynamic Disk Pools (DDP) as a way to improve on all of the problems that conventional RAID suffers. The concept is pretty simple – continue using RAID concepts but on a more granular level as opposed to the whole disk. In the latest version of NetApp’s SANtricity software, the company incorporated DDP which uses RAID-6 concepts on a granular level. DDP allows you to combine all of the drives behind a controller to create a pool of storage. It’s just like the classic RAID-5 or RAID-6, but you can use all of the drives in a single pool rather than breaking them into RAID-5 or RAID-6 groups and combining them with lvm (logical volume manager) or some other technique. To better understand how this works we need to dive deeper into the DDP layout.

The lowest level of DDP is called a D-Piece. A D-Piece is a contiguous 512MB section within a disk that is constructed from 4,096 128KB segments. The next level up, called a D-Stripe, is built from ten (10) D-Pieces. Therefore a D-Stripe has a total of 5,120 MB of raw space. The D-Pieces are selected from various drives in the pool using a pseudo-random algorithm. Each D-Stripe is basically a RAID-6 group that with 8+2 configuration (8 data and 2 parity chunks). This means that we have 8*512MB = 4,096 MB of useable space in a D-Stripe. Finally, a volume is constructed from the D-Stripes to meet the desired capacity.

In DDP, we’re still using RAID concepts, in this case, RAID-6. But instead of using the entire drive as one of the chunks for RAID-6, DDP breaks the drives into pieces and creates RAID-6 stripes across all of the disks.

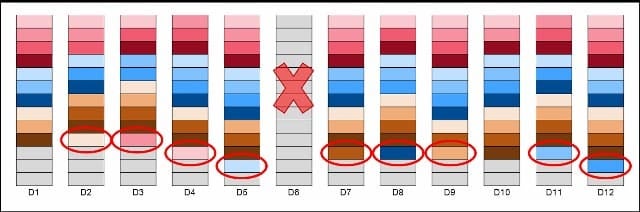

To better see this, Figure 1, provided courtesy of NetApp, illustrates how a DDP is built from 12 drives.

In Figure 1, each block is a D-Piece (512MB). The same color D-Pieces constitute a D-Stripe. For example, all of the pink D-Pieces form one D-Stripe, all of the light blue D-Pieces form another D-stripe, all of the yellow D-Pieces form a third D-Stripe, and the darker red D-Pieces form another D-Stripe and so on.

The D-Pieces in a particular D-Stripe are selected in a somewhat random fashion so that a particular layout is not repeated. The selection process tries to balance capacity across the drives while maintaining the pseudo-random layout. This insures that the pure random case where all of the data on two D-Stripes is on the same ten drives never happens.

In a production system, a Dynamic Disk Pool is created by first defining how many drives are in the pool. Then you create a volume by just defining the capacity you need. The software creates all of the D-Pieces followed by the D-Stripes across the drives in the pool. The volume is built from a number of D-Stripes to match the desired capacity as closely as possible using integer units of D-Stripes. You can create a number of volumes in the same DDP as long as you have enough capacity in the DDP.

Let’s take a look at what happens if we lose a drive in a Dynamic Disk Pool. Figure 2 below, provided courtesy of NetApp, illustrates what happens when we lose drive 6 (D6).

This loss interrupts a number of D-Stripes that had D-Pieces on the failed drive. Therefore, this requires that the affected D-Stripes be reconstructed. In general, a Dynamic Disk Pool always has some spare capacity in case of drive failure. Also, you don’t have to use all of the space in a DDP in volumes, leaving some possible additional spare capacity as well. This spare capacity is utilized during the reconstruction. Note that reconstruction in DDP is different than the classical RAID reconstruction because the D-Pieces and D-Stripes have to be regenerated while everything is rebalanced across the drives to ensure the pseudo-randomness of the data distribution.

In a “normal” RAID-6 with an 10+2 layout, only 11 drives participate in the reconstruction when one drive fails and all of them write to a single spare drive “target.” The reconstruction time is limited by the number of drives and the write speed of one of the drives.

In the case of DDP with the same number of drives, the entire pool of drives participate in the reconstruction. This means we get a larger number of drives working on the reconstruction for both reads and writes. Plus the regeneration and rebalancing happen in parallel, further improving performance. All of this means DDP reconstruction goes faster than classic RAID-6.

A key thing to notice is that unlike classic RAID reconstruction, DDP’s reconstruction only reads the required D-Pieces for the affected D-Stripes. The D-Pieces in the unaffected D-Stripes are not read. This means we don’t have to read entire drives, keeping us much further away from the URE danger zone.

During the reconstruction, priority is given to any D-Stripe missing two D-Pieces to lessen the chance of another failure that might make recovery of the affected D-Stripes impossible. Remember that the D-Stripes are built using RAID-6. Now that two pieces of the RAID-6 group are gone, you are vulnerable to data loss in the event of a failure of a third piece. Since the data is in the controller, both D-Pieces are regenerated at the same time, fully restoring the D-Stripe. This makes the window of vulnerability for any D-Stripe that has lost two D-Pieces very small.

In the case of a lost drive, the mean number of D-Stripes with two more affected D-Pieces is fairly low. This means that a reconstruction of the “critical” D-pieces can happen quickly (remember that they are only 512MB in size). The regenerations happen so quickly that additional drives could fail within minutes without data loss. This is very important to realize — using DDP you can lose more than 2 drives. So while RAID-6 is used at the lower level, the design of DDP allows us to tolerate the loss of more than two drives without data loss.

Netapp says that with twelve 1TB drives (twelve is the minimum number of drives in a DDP), the rebuild time for a classic RAID-6 group (10+2) is almost eleven hours for a particular chassis. The same time for a DDP can be as low as seven hours for a complete reconstruction. While this may not seem like a huge improvement, it is about a 36% decrease in rebuild time.

Also, remember that this is a complete recovery. Having RAID-6 redundancy on some of the impacted D-Stripes happens very quickly, giving you possible protection from any more failed drives. For a classic RAID-6 group you have to wait eleven hours to get complete RAID-6 protection. Whereas with DDP you get some D-Stripes with complete RAID-6 protection very quickly and the number of D-Stripes with full RAID-6 protection increases with time.

You can compare the classic RAID-6 with DDP in terms of resiliency fairly easily. As soon as one drive is lost, the classic RAID-6 operates in degraded mode (only one drive for resiliency). But the classic RAID-6 doesn’t get full redundancy until the very end when the reconstruction is finished. DDP regenerates and rebalances very quickly, so the parts of DDP that have RAID-6 protection grows with time.

Observations

There are several technologies that allow us to extend or replace the benefits of RAID into the future. The example examined here, Dynamic Disk Pools from NetApp, give us a number of benefits. First, DDP is very easy to understand because it is still RAID. However, it isn’t fixed to a specific set of drives in creating RAID groups. Rather, it spreads the underlying RAID “chunks” making up the RAID-6 8+2 group from across a random set of drives in the pool.

Second, in the event of a drive failure, we can get a much larger number of drives involved in the rebuild with DDP. And they all do both read and write, eliminating the single-drive write performance limitation. This can greatly reduce recovery times.

Third, during a reconstruction, only the missing D-Pieces are regenerated. Remember, in a classic RAID-6 you have to read the entire contents of all of the remaining drives even if all of the contents are not being used, perhaps getting us dangerously close to the URE limit.

Moreover, in classic RAID-6 you don’t regain the full RAID-6 protection until the reconstruction is finished (i.e. it’s not 100% until everything is done). In the case of DDP, you quickly regain full RAID-6 protection on some of the D-Stripes. As recovery proceeds, a larger percentage of the total pool has full RAID-6 protection. So it’s not an “all or nothing” proposition.

Additionally, in the case of DDP it is very easy to create a volume with the needed capacity, leaving some spare capacity in the pool. Then when you need the capacity, you just add it to the pool and DDP dynamically takes care of the underlying storage for you (but naturally you have to extend the file system). With classic RAID we would need to create a new volume, add it to the existing pool using something like lvm and then grow the file system.

And finally, the use of DDP means that you can lose more than two drives in a pool without data loss. When classic RAID-6 loses more than two drives in a LUN, then the LUN cannot be rebuilt, and the data has to be restored from a backup or copy. In the case of DDP, several drives can be lost in a single pool without losing data.

But everything is not as perfect as it would seem (nothing ever is). When a drive is lost in a DDP, a larger number of drives participates in the reconstruction, which can adversely impact some performance aspects. In NetApp’s Dynamic Disk Pools, you can turn down the “priority” of the reconstruction so the impact of performance is less. Or you can turn up the priority to finish the reconstruction as quickly as possible. It’s really up to you which approach is more important to you.

Summary

RAID, as it is currently works, is not long for this world. With drives getting larger at a rapid clip while the drive speed and the URE rate stay pretty stagnant, classic RAID levels are not going to last much longer. There have been a number of approaches for “fixing” RAID that give us similar features without the problems. Some of these approaches can be very complicated and expensive, which can possibly explain why they haven’t gotten widespread adoption.

In this article, I examined one approach that applies RAID concepts but on a more granular level). NetApp’s Dynamic Disk Pools use the concept of RAID to create a solution that allows you to easily combine all of the drives into a single pool. It also provides protection for losing more than two drives (which causes a RAID-6 group to fail). The nice thing about DDP is that it is very easy to understand, so we don’t have to dump all of our RAID knowledge built over the years.

Approaches such as DDP will become increasingly important as drives get larger and the amount of data we generate increases almost exponentially. Classic RAID will have to give way to other concepts, such as DDP, or we run the risk of losing our data.