PCI Express, often called PCI-E, has important implications for system architecture that you will need to consider as you design architectures around this new bus structure. Already the technology has made possible the growing popularity of InfiniBand-based storage and connections (see InfiniBand: Is This Time for Real?). For the first time, the performance of an […]

PCI Express, often called PCI-E, has important implications for system architecture that you will need to consider as you design architectures around this new bus structure. Already the technology has made possible the growing popularity of InfiniBand-based storage and connections (see InfiniBand: Is This Time for Real?).

PCI Express, often called PCI-E, has important implications for system architecture that you will need to consider as you design architectures around this new bus structure. Already the technology has made possible the growing popularity of InfiniBand-based storage and connections (see InfiniBand: Is This Time for Real?).

For the first time, the performance of an I/O bus is faster than the fastest host attachment, so we have now reached an important time in technology history where the bus is fast enough to run any card at rate. More on that in a moment, but first, some background is in order.

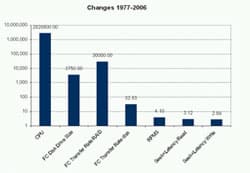

I have often complained about the state of I/O and the data path (see Storage Headed for Trouble). Below I’ve included a chart of the performance increases of various technologies since 1977 (an admittedly arbitrary starting point, but, hey, it was my first year on a computer), to emphasize just how far storage technology is lagging behind.

|

| I/O Performance Increases of Different Technologies Since 1977 (Click to view larger image)

|

As can be clearly seen, storage performance is seriously lacking by many orders of magnitude. This trend is not going to change for the foreseeable future, since storage technology has mechanical limits, but PCI-E offers some hope.

PCI-E Design and System Architecture

PCI-E is a serial connection for each direction. Within the bus itself, it is broken up into lanes, with each lane supporting 2.5 Gbits per second in each direction. With encoding and error checking, that translates into an effective bandwidth for NICs, HCAs and HBAs of about 250 MB/sec, which is enough for 2Gb Fibre Channel HBAs. For more information on PCI-E, visit Interfacebus, ars technica and Eurekatech.

The big concept to take away is lanes. For example, if you want to use 4Gb Fibre Channel and run full duplex with a single port HBA, you need 400 MB/sec of bandwidth for each direction. In PCI-E terms, that’s two lanes, since each lane will be able to run at full rate. You could use a single lane, but you would be limited to 250 MB/sec. That might not be a problem for IOPS like database index searches.

Using 400 MB/sec transfer with no other overhead and 16 KB requests would support 25,600 (400 MB per second/16 KB requests) requests per second, while 250 MB/sec single lane would support 16,000 requests. There is additional overhead, so you will never really achieve those rates, but either way, one lane or two lanes far exceeds what most servers, HBAs and RAID systems can deliver.

So from an IOPS perspective, a single lane and 4Gb HBA will work just fine, and with dual port, one or two lanes will more than saturate most RAID configurations. Assuming that a disk drive at most can do 150 random I/Os per second, you would need a large number of disk drives or cache hits to run at full rate. Since most RAID controllers do not have a command queue of 8K, you will also far exceed the command queue of RAID controllers.

I can’t remember a time when the performance of an I/O bus was faster than the fastest host attachment, so we have reached an important time in technology history where the bus is fast enough to run any card at rate. This assumes a number of things:

What Should an Architect Do?

PCI-E is clearly a better choice than PCI-X for both streaming I/O performance and IOPS. PCI-E is also taking over even lower-end PCs from Dell, HP and others.

But what about server vendors with large SMP systems? Where is the PCI-E bus? The problem is that large SMP servers have much greater memory bandwidth and a more complex memory infrastructure. It is pretty simple to design a PCI-E bus to fan in and out of memory when you are working on a single board. The job gets way more complex for large systems that have many buses and memory interconnections that cross boards. While these vendors should have thought about this ahead of time with each new generation of server, it is still not a simple problem. Most of the large SMP server vendors are using PCI-X technology today and not PCI-E. This is a problem for a number of reasons:

PCI-E will be the state of the art bus technology for a number of years. It is unfortunate that many of the large SMP vendors didn’t plan for the future so that current products would have PCI-E. Designing these changes are complex, but I/O for many of these vendors seems to be an afterthought. As end users, perhaps we need to do more to emphasize the importance of I/O to the large SMP vendors.

Henry Newman, a regular Enterprise Storage Forum contributor, is an industry consultant with 26 years experience in high-performance computing and storage.

See more articles by Henry Newman.

Henry Newman has been a contributor to TechnologyAdvice websites for more than 20 years. His career in high-performance computing, storage and security dates to the early 1980s, when Cray was the name of a supercomputing company rather than an entry in Urban Dictionary. After nearly four decades of architecting IT systems, he recently retired as CTO of a storage company’s Federal group, but he rather quickly lost a bet that he wouldn't be able to stay retired by taking a consulting gig in his first month of retirement.

Enterprise Storage Forum offers practical information on data storage and protection from several different perspectives: hardware, software, on-premises services and cloud services. It also includes storage security and deep looks into various storage technologies, including object storage and modern parallel file systems. ESF is an ideal website for enterprise storage admins, CTOs and storage architects to reference in order to stay informed about the latest products, services and trends in the storage industry.

Property of TechnologyAdvice. © 2026 TechnologyAdvice. All Rights Reserved

Advertiser Disclosure: Some of the products that appear on this site are from companies from which TechnologyAdvice receives compensation. This compensation may impact how and where products appear on this site including, for example, the order in which they appear. TechnologyAdvice does not include all companies or all types of products available in the marketplace.