Technical computing and HPC workloads are moving to the cloud at an ever-increasing pace. The ability to spin up a compute instance quickly and pay only for what you use is a very attractive option. Moving forward, shared storage in the cloud will become an increasing necessity for these workloads. Is this even possible and what options are there?

Public cloud providers have capabilities that allow us to spin up a compute instance that has an operating system and network connectivity very easily. In many cases, you can attach storage block devices to the instance, providing the basis for local storage. (Some providers include a small amount of “instance storage” as well.) Then you can install, build and run your applications in the instance.

If you are not running completely in the cloud, then you will have to upload your data set to the instance. When you’re done running the application(s), you can copy the data back or move it to more permanent cloud storage. (In the case of Amazon this could be S3 or Glacier.

But this scenario is limited to storage that is associated with the instance (server). What happens if we need shared storage in the cloud itself as we usually do in the HPC world?

There are many times that people or applications need shared storage. The idea is simple—there is one storage pool that is shared by a number of compute resources. In the case of systems in your data center, the centralized storage is shared by a number of servers. The most classic form of shared storage is NFS (Network File System), and in the Microsoft world there is CIFS. The configuration for both approaches is basically the same: there is some centralized storage with at least one server that is connected to a network (typically Ethernet). Client systems then “mount” the storage and immediately have access to the data on the central storage.

The concept has worked well for many years and is still heavily used today. It allows servers to share files so that several applications can read the same data set. The shared storage approach means that the files are in one location so that you don’t have to copy the data to each server that needs it. This saves on space and network bandwidth, and it also makes data management much easier.

On the other hand, if for some reason the centralized storage fails, then every server loses access to the data. But with a good design and processes this problem can be overcome to a great extent.

The issue we should all think about as we move to the cloud is how do we create shared storage in the cloud? Regardless of the answer, the shared storage in the cloud will be constructed from the same components as shared storage not in the cloud: storage, servers, networking and software (always back to the fundamentals). Let’s examine these components and solutions that utilize them by focusing on Amazon Web Services (AWS).

Brief Amazon Review

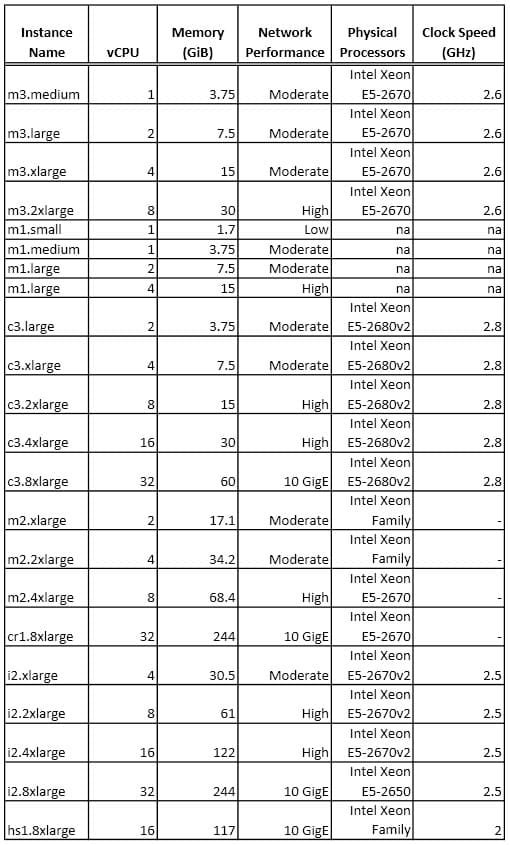

Before jumping into shared storage concepts, I want to quickly review Amazon Web Services (AWS). Let’s start with the compute/networking side of the shared storage solutions. Recall that Amazon has several different compute instances (Amazon EC2) which are effectively servers and networking. The compute power, the general performance, the amount of memory and the network connectivity of the instances varies.

AWS Instances (servers and networking)

If you are not familiar with AWS you might be surprised to learn that Amazon has a number of different instance types. You can think of instance as a VM with an operating system and some applications. An easy example is a version of CentOS running in a VM with a number of tools installed.

AWS has a wide array of instances that have features and capabilities that can be exploited for solving problems. The list of instances gets updated frequently but here is an abbreviated list of instances and their major features when I wrote this article:

I listed just a few of the features of an instance so you could get a feel for some of the differences. There are many other aspects to selecting an instance such as selecting “cluster” instances, including Enhanced Networking, which has lower latencies and lower network jitter (uses SR-IOV), and cluster networking which provides high-bandwidth and low-latency networking between all instances in the cluster.

Amazon storage options

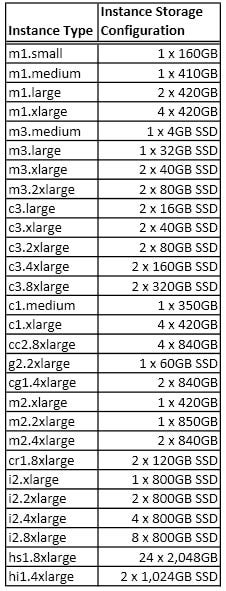

Amazon also has several storage options. I won’t cover all of them because this article is concerned with performance more than capacity or archive. Let’s start with the first one that is generally referred to as “ephemeral storage.” This is “pre-attached” storage that comes with the instance for free (i.e. no extra cost). The word “ephemeral” is used because if you stop and end the instance or if it crashes, then the data is gone.

Don’t dismiss ephemeral storage because it can be very useful depending upon the situation. Virtually all of the instances have ephemeral storage—even the smallest instance, the m1.small, has a 160GB local drive. As the instances get larger, the number, capacity and performance of the local devices increases. A quick list is below.

As you can see, all of the instances have their own storage that can be used for anything you want. Many of the instances have local SSD storage. In particular, the i2.8xlarge instance has eight 800GB SSD drives. You can get quite a bit of IO performance from this storage (Amazon doesn’t publish the performance of the drives in ephemeral storage). Perhaps with the right file system, the SSDs could be used as a cache. Or it could be used outright for file system storage.

There are a large number of instances that have internal SSDs but not always a lot of capacity. At the same time there are instances that have a number of internal drives with more capacity. For example, the m1.xlarge instance has four 420GB drives which can be used to create a RAID-5 volume group with 1,260 GB. There is also an instance, the hi1.8xlarge instance, that has 24, 2TB drives. Using all of them in RAID-6 gives you about 44TB of useable capacity.

A second storage option is called EBS or Elastic Block Store. The concept is pretty simple: an EBS volume is simply a network block device that you can attach to your instance. You can think of it as a “virtual hard drive” if you like. It’s not in the server that contains your instance but rather it is probably a volume from a centralized storage system that is serving out block storage via iSCSI. (Amazon doesn’t document the details of their EBS storage.)

After attaching the EBS volumes to your instance, you can use them like you would directly attached devices. You can combine them with LVM. You can use software RAID (md) across them to create a raided device. Or you can use both LVM and software RAID to create the underlying storage device for your file system. Then you build your file system on top of the device(s).

Below is a list of basic EBS characteristics (from Amazon):

- The size of the storage volume is variable and under your control up to 1TB in size.

- Volumes are placed in a specific Availability Zone, and can then be attached to instances in that same zone.

- Multiple volumes can be attached to a single EC2 instance.

- There are two volume types: Standard and Provisioned IOPS

- Standard volumes have an IO performance of about 100 IOPS (about the same as a single hard drive).

- Provisioned IOPS volumes have an IO performance up to about 4,000 IOPS. Fundamentally, a Provisioned IOPS volume is designed for up to 30 IOPS per GB.

- EBS volume data is replicated across multiple servers in an Availability Zone to prevent the loss of data from the failure of any single component.

- If the instance crashes or you stop and terminate the instance, your data is still in the EBS volumes (you have to explicitly stop and terminate the EBS volumes to avoid charges).

- EBS volumes have snapshot capabilities:

- Snapshots are stored in Amazon S3.

- You can use the point-in-time snapshots to instantiate new volumes.

- You can copy snapshots across AWS regions, making it easier to use multiple AWS regions for geographical expansion, data center migration, and disaster recovery.

- You can view performance metrics for Amazon EBS volumes using Amazon CloudWatch, giving you insight into the performance.

- If an EBS volume fails, you can recover the volume from the last snapshot.

- After a snapshot is taken, you can immediately access the Amazon EBS volume data. However, this does not mean all of the data is immediately available (EBS snapshots implement a lazy loading).

- You can specify a larger size snapshot volume that the EBS origin volume.

- You can share the Amazon EBS snapshot by allowing others to create their own EBS volumes based on yours.

- You only pay for the storage and performance that you actually provision.

- EBS volumes are managed with the same IAMS (AWS Identity and Access Management) that you use for instance security (role-based access controls). This includes users and groups.

You can use EBS volumes with any Amazon instance, but if performance is truly critical, then Amazon offers Amazon EBS-optimized EC2 instances. These instances cost more, but they provide dedicated throughput between your EC2 instance (server instance) and EBS. The performance ranges from 500 Mb/s to 2,000 Mb/s depending upon the instance type used. These instances can be used with either Standard volumes or Provisioned IOPS EBS volumes.

I’m not going to cover other Amazon storage options, such as S3 or Glacier, since the focus is a bit different than performance. S3 can be a very useful storage solution but for shared storage it’s better to be used in combination with EBS and ephemeral storage.

NAS Options

Network attached storage (NAS) is probably the most pervasive shared storage technology and has been around for a fairly long time. The NFS bits, both the server and the client, are included with virtually every single Linux distribution. Plus, Windows XP and Windows 7 have the ability to be NFS clients for no additional cost (things change with Windows 8). However, there are commercial products that provide NFS capability for Windows as well.

Using the compute, network, and storage options in AWS with the NFS software bits, there is a large range of possible NAS/CIS shared solutions. Let’s start with the “do-it-yourself” option before moving on to pre-packaged NAS solutions.

Do it yourself

As I previously mentioned, virtually every distribution of Linux comes with the capability of NFS, both client and server. You can spin up a Linux instance in Amazon, configure the storage, be it ephemeral or EBS or both, however you like, and then configure the NFS server (i.e. /etc/exports), and export the storage. Conceptually, this is a fairly easy way to get shared storage, and it works. However, the devil is in the details (as always).

I won’t go over the details of creating a NAS in this article, but you can look around the web for some tutorials about taking disks in your system and exporting them to clients via NFS and CIFS (using Samba). In the case of Amazon AWS the “disks” are EBS Volumes and/or ephemeral storage. There is a good video from AWS re:Invent 2013 about NFS and CIFS Options for AWS. In the video, some of the details of creating a NAS solution are covered along with some recommendations and general rules of thumb. There are also some pointers to software that can help you get started.

Pre-packaged NAS solutions

With the rise of technical computing in the public clouds there has been a corresponding increase in the number of pre-packaged NAS solutions. These are AMIs that can be used to quickly create NAS solutions using Amazon instances and/or EBS volumes. These commercial offerings have an hourly pricing associated with them on top of the hourly pricing for the instances and the EBS volumes. Each of them has their own unique features and capabilities.

In this section, I’m going to cover a NAS AMI that is available in the Amazon Marketplace. It is representative of what is possible for pre-packaged NAS storage solutions in the cloud.

SoftNAS: SoftNAS has created a NAS gateway, called the SoftNAS Cloud, that utilizes AWS instances, as well as Amazon EBS and S3 storage, to create a NAS in the cloud. It uses ZFS as the underlying file system, giving you SSD caching (presumably using the SSD ephemeral storage in the compute instance), compression, deduplication, scheduled snapshots and read/write clones. The company states that they can achieve up to 10,000 IOPS using EBS and S3 storage, or 40,000 IOPS when using SSD caching. The standard SoftNAS Cloud product scales to 20 TB but the SoftNAS Professional Edition can scale to 154TB of space. (It appears they have tested it up to 500 TB.)

The SoftNAS Cloud can utilize EBS or S3 storage to create something of a tiered storage. It appears that you can combine EBS storage and what the company calls “S3 Cloud Disk” into a RAID-1 mirror configuration. This gives you the potential of improving performance while taking advantage of the redundancy of S3. The nice thing about the S3 Cloud Disk is that it is like “thin provisioning” because you can “allocate” some storage capacity to it but you actually don’t pay for it until you use it.

The SoftNAS Cloud provides NFS, CIFS and iSCSI access to servers. Note that using Amazon’s VPC, you can connect local servers to the AWS instances so that the both the local servers and AWS instances can connect to the same storage with varying degrees of performance, but still sharing the same storage.

One nice feature is that the SoftNAS team has created a nice GUI front end to the SoftNAS Cloud product. It allows you to manage and configure the storage, and it also includes some basic monitoring data that you typically see in NAS (i.e. write and read throughput, etc.). You can configure deduplication and compression via the GUI but be aware that you need to pay attention to the instance you are using for the NAS gateway. In general, compression requires more CPU power, and deduplication requires more memory.

You can also create a second SoftNAS Cloud instance and do replication between them (basically asynchronous replication). SoftNAS calls this Sanreplicate, and it is useful if you need to ensure that your data is accessible. (Note: I don’t know the technology they are using but it appears to be rsync.) If you need synchronous replication then you could use something like DRDB to replicate between SoftNAS instances when writing data. However, you should think about doing this before using the NAS gateway; otherwise, you will have to unmount the exported file system from the clients, synchronize the two instances and then re-export the file system.

If you want to get started with SoftNAS, there is a Marketplace AMI (Amazon Machine Image) that you can use. Prices vary depending upon the instance you are using. For example, the t1.micro instance is $0.02/hr (pretty cheap for learning about SoftNAS). The prices go up to $2.40/hr for a c2.8xlarge instance (32 vCPUs, 60 GB memory, 10GigE). For the SoftNAS Cloud prices you pay, you get support from SoftNAS. Alos, you can use one instance of SoftNAS for 30 days for no software charges (you still pay for the AWS instance and EBS volumes).

One feature you should definitely keep in mind is that EBS volumes have snapshot capability. This means that you can take a snapshot of the EBS volumes used by SoftNAS and then either use these to create a replica of the original data.

While SoftNAS is relatively easy to get started, you should definitely stop and think about what you need from a Cloud NAS storage solution. What kind of performance do you think you need? How much capacity? Do you need to replicate the NAS instance or is there another mechanism for keeping a copy of your data somewhere? What is the network configuration you want to use? Do you need the provisioned IOPS capability of EBS or not? What kind of network do you need? Do you need to increase the capacity at some point?

Sit down and carefully plan what you need before you start building something—it can save you time, money and some frustration.

Parallel storage

NAS solutions can provide reasonable performance for a wide range of applications in the cloud and allow you to run many client instances sharing the same storage (shared storage). But what happens if you need more performance than what a single NAS gateway can provide? Or what do you do when you have applications that can benefit from parallel IO? The good news is that there are some solutions available in the Amazon Marketplace. These AMIs provide shared parallel storage for applications.

Parallel storage allows you to combine instances (i.e. more than one) and storage to a single file system. This allows very large capacities and faster throughput. The exact details of how the storage and servers are combined into a single file system depend upon the specific file system, but conceptually the idea is to spread portions of the file across multiple servers with their own storage so that the data access for a file can be done in parallel and so that you can lose a server and storage without loss of data (or access to the data).

In this article, I’m going to cover two options in the Amazon Marketplace: Lustre, and OrangeFS.

Lustre: Lustre is the most common parallel file system for HPC according to some studies (e.g. 2013 IDC file system study). It’s open-source with a development community and two sponsoring groups (Open Scalable File System – OpenSFS and European Open File System – EOFS). There are several companies that will sell either support contracts for Lustre (e.g. Intel) or a combined hardware and software solution (Xyratex, DDN, Dell, Terascala, Bull, Inspur) that includes support for Lustre in addition to the hardware.

Recently, Intel has created a version of Lustre that runs in AWS using EC2 instances and EBS volumes. This version, called ICEL(Intel Cloud Edition of Lustre) has three different instance options at this time, one of which is free to use but doesn’t come with any support. The instances are:

The two versions with Global Support provide you with support, one of which uses HVM instances. The “Community Version” is free to use, but if you run into problems, you have to turn to the community Lustre mailing lists for help.

Just like other storage solutions in the cloud, ICEL is constructed from AWS compute and storage instances. You use an instance as an OSS (Object Storage Server) and attach EBS volumes to it for storage. You do the same for the MDS (Metdata Server) and MGS (Management Server) servers: select a compute instance and then attach EBS volumes to it. To get the performance desired, you select the number of OSS instances you need and to get the capacity you want, you attach a number of EBS volumes to each OSS. (To make things symmetrical, it is recommended that you use the same number of EBS volumes for each OSS instance.)

Assembling the OSS and MDS instances with attached and formatted EBS volumes including installing and configuring Lustre can be time consuming. ICEL has created a CloudFormation template that does all of this for you. You only need to set the KeyName value to your ssh-key, and set the LustreZA value to the specific Availability Zone in the region you are using, and the template automates the deployment of ICEL for you. Once ICEL is up and running, you then have to install the Lustre client on the instances you plan to use with ICEL.

There are some materials around the web that discuss ICEL and the performance. The first presentation was in April 2013 at the 2013 Lustre User Group (LUG). There is also a video of the paper, where Robert Read discusses Lustre in AWS. Notice that Robert did some testing using DNE (Distributed NameSpace) for Lustre, which is the first release of distributed metadata for Lustre. The really amazing thing about building parallel storage solutions in AWS is that if you need more aggregate IO throughput, you can just add OSS instances. If you need more capacity, you just spin up additional OSS nodes. If you need more aggregate metadata performance, you just spin up MDS instances with EBS volumes. You don’t have to wait for weeks to get new hardware—you just spin up a new instance, and you are off to the races.

The most recent presentation is from the Fall of 2013 at the LAD13 conference (European Lustre User Group Conference). The ICEL presentation is available online. There is an accompanying video of the presentation as well. If you notice in the presentation, the authors mention that the current Lustre AMIs in Marketplace come with Ganglia, LMT (Lustre Monitoring Tool) and ltop (part of LMT), which can be used for monitoring the Lustre file system. They even have a screenshot of Ganglia illustrating monitoring tools for ICEL.

As a matter of full disclosure, I work at Intel as the senior product manager for Intel Lustre in the High Performance Data Division (HPDD), but the ICEL product is predominantly handled by another team with the division.

OrangeFS: One of the very first parallel file systems is PVFS (Parallel Virtual File System). It started as a research project at Clemson University with the first paper being published in 1996. PVFS has been steadily refined and developed over time, even spawning a rewrite, PVFS2. A software company named Omnibond has recently created a commercial version of PVFS2, called OrangeFS that comes with full support.

There is a version of OrangeFS in AWS that uses the compute instances as well as the EBS volumes. “OrangeFS in the Cloud” was announced in May of 2013. There are two AMIs:

The community version does not use provisioned IOPS with the EBS volumes, resulting in lower performance. There are a total of seven possible EC2 compute instances you can use with this AMI. It also has a fixed price per instance of $0.45/hr but no support.

The Advanced Version comes with support, costs $0.85/hour, and uses the same instances as the Community Version except that it uses Provisioned IOPS to achieve better IOPS performance.

Both the community and advanced editions have four creation options:

- “1-click” single instance with 1.28TB of capacity

- 4 instances with 5 TB of capacity

- 8 instances with 10TB of capacity

- 16 instances with 20 TB of capacity

The number of instances refers to the number of EC2 compute instances used.

There are a couple of references on the web that talk about OrangeFS itself and about using OrangeFS in the cloud. You can read about PVFS or OrangeFS at their respective websites but there is a fairly extensive paperfrom the 2012 IEEE International Conference on Massive Storage Systems and Technology that is worth reading. It is authored by by Walt Ligon, who was one of the originators of the PVFS project.

A second item of note is a presentation entitled, An MPI-IO Cloud Cluster Bioinformatices Summer Project. The presentation is quite interesting because it has a couple of parts. The first part is a presentation on the Amazon storage components. The second part is about using bioinformatics applications that use MPI-IO and OrangeFS in the cloud.

Summary

More and more technical computing or HPC workloads are moving into the cloud. Many of these workloads need or work better with shared storage. Creating shared storage in the cloud is only beginning to be addressed. These solutions are built from the same components as NAS solutions in your data center: compute, network, storage and software. The big difference is that you can spin up a compute instance or add storage in the matter of a few minutes rather than weeks or perhaps months waiting for the hardware to arrive, be installed, tested and put into production.

Using Amazon as an example, there are some initial designs and experiments with NAS in the cloud including some commercial solutions such as SoftNAS Cloud. It’s fairly easy to configure your own NAS solution if you desire using the exact same commands you would use if you were building your own solution. Commercial solutions such as SoftNAS Cloud offer the option of having all of the heavy lifting done for you in exchange for paying for the software (and you get support).

In addition to NAS, you can also create parallel storage solutions. For example, in Amazon AWS, there are two options, one for Lustre, and one for OrangeFS (PVFS). Both use the same compute and storage instances that you use for NAS, but you create several instances that are combined to create a single file system. If you need more performance, just add more instances. If you need more capacity, just add more instances. Since this is the cloud, it’s very easy to spin up a new instance and add it to the existing storage.

Photo courtesy of Shutterstock.