What’s the difference between solid state drives (SSD) and hard disk drives (HDD) and which is best for you?

The answer depends on understanding the balance of cost, performance, capacity, and reliability between these two storage technologies. In many cases the ultimate goal is to combine HDD and SDD in a manner geared for your workloads and budget.

Storage Resource:

So what’s best for your needs? Let’s dive in.

In the market for the best SSDs? See our list of the best and fastest SSDs.

Jump to:

Difference between SSD and HDD

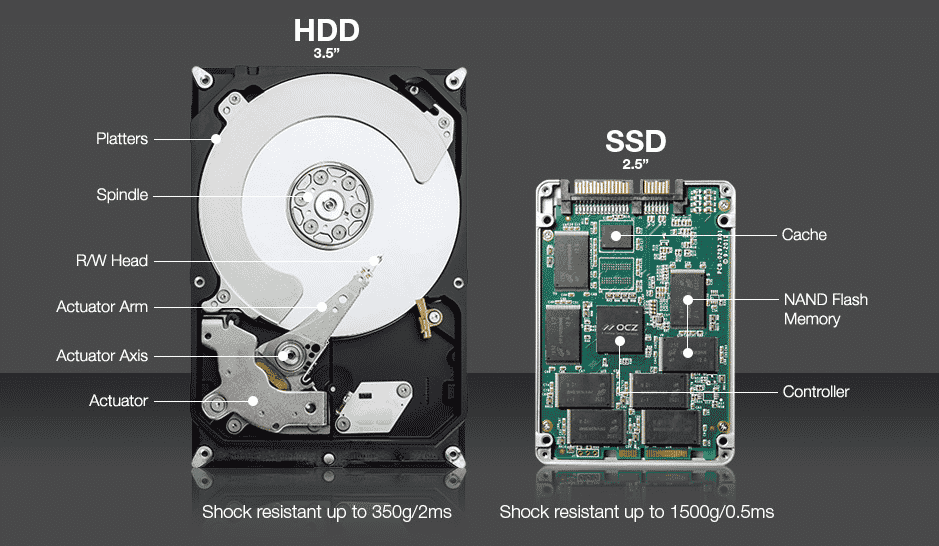

Although both SSD and HDD perform similar jobs, their underlying technology is quite different. Let’s look inside:

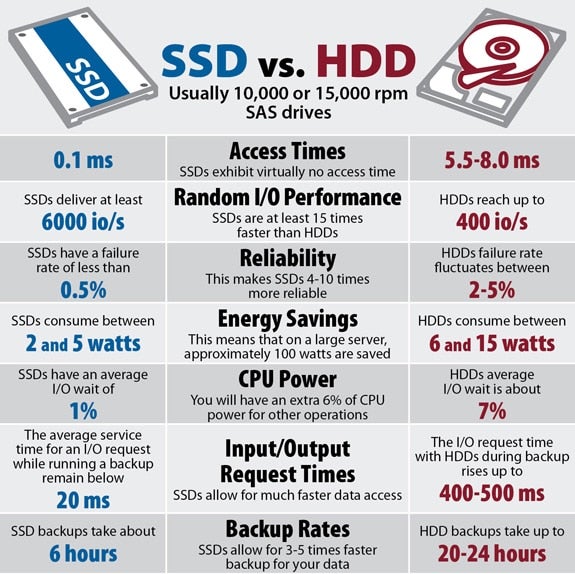

• Read and write speeds: SDDs are significantly faster than HDDs. HDD platters spin from 7,5000 rpm to 15,000 rpm. The read/write heads of HDDs position themselves over the spinning platters to read or write data. Sequential reads and writes are efficient, but when discs are crowded with data the heads must access multiple sectors – an operation called fragmenting. SSDs are not subject to fragmenting because read/write operations access cells simultaneously. This makes SSDs much faster then even 15K RPM enterprise HDDs.

• Capacity: SSD capacity has overtaken HDDs. Nimbus, a vendor, offers a 100TB 3D NAND flash SSD in a 3.5-inch form factor. HDD manufacturers are still working to increase areal density. For instance, Toshiba introduced a 14TB 3.5-inch form factor HDD that uses conventional magnetic recording, as opposed to higher capacity shingled magnetic recording, to boost capacity. Still, SSDs tend to have greater capacity.

• Encryption: Software-based encryption – based on passwords – works on both HDDs and SDDs. Data passes through an algorithm that encrypts data as it writes to disk, and de-encrypts data upon read. The function is simple and inexpensive, but passwords are vulnerable to storage system hacks. Software-based encryption also puts a heavy load on CPU resources. The need for encryption favors SSDs: AES encryption administered by a cryptoprocessor has had success. The device is located on a chip or microprocessor in the SSD.

• Workloads: SSDs are ideal for high performance processing, whether they reside in an all-flash array or in hybrid storage arrays. They clearly top HDD in performance. Companies typically reserve SSDs for high performance applications.

Let’s recap the HDD vs SDD difference:

HDDs…

• Use magnetic platters and moving parts to store data.

• Are the legacy data storage standard for PCs, servers and enterprise storage arrays.

• Have lower price tags than SSDs on a per-GB basis.

• Are slower than SSDs.

• Use more electricity than SSDs and require more cooling.

SSDs…

• Use semiconductor chips to store data and have no moving parts.

• Are gaining popularity but remain a pricey storage alternative for PCs, servers and storage systems.

• Have higher price tags than HDDs on a per-GB basis.

• Are much faster than HDDs

• Use less electricity than HDDs and run cooler.

| SSD | HDD | |

|---|---|---|

| Price | $0.25-$0.27 per GB average | $0.2-$0.03 per GB average |

| Lifespan | 30-80% test developed bad block in their lifetime | 3.5% developed bad sectors comparatively |

| Ideal for | High performance processing Residing in APA or Tier 0/1 media in hybrid arrays |

High capacity nearline tiers Long-term retained data |

| Read/write speeds | 200 MB/s to 2500 MB/s | up to 200 MB/s |

| Benefits | Higher performance for faster read/write operations and fast load times | Less expensive Mature technology and massive installed user base |

| Drawbacks | May not be as durable/reliable as HDDs Not good for long-term archival data |

Mechanical components take longer to read-write than SSDs |

SSD vs HDD Speed and Performance

The speed difference between SSD and HDD is very clear: SSD performance is its primary differentiator because HDD can only accelerate only so much; the very design of an HDD imposes speed limitation.

SSD speed

• SSD read write speed: SSD speed wins, due to high IOPs (In and Out per Second). SSD has no moving parts and thus no physical seek limits, so the IOPs is higher than that of HDD.

• Faster Access: an SSD can access memory addresses much faster than the HDD can move drive heads. This dramatically higher performance places SSD in the ideal position for high IOPs. Top performance storage tiers frequently uses only SSD, although a storage tier one step down may combine high performance SSD with a high performing 15RPM HDD.

HDD Speed

• HDD Read Write Speed: HDD is slower, because of its design. In a hard disk array, the controllers direct read/write requests to physical disk locations. The platter spins and disk drive heads move to the designated location.

• Access time issues: With SSDs, non-contiguous writes come into play, meaning the read head has to seek for information in disparate locations. This adds latency. All this physical movement creates slow downs that SSD do not suffer from.

SSD vs HDD Lifespan and Reliability

SSD vs HDD reliability is a murkier issue than SSD vs. HDD performance. Are SSDs more reliable than HDDs, or vice versa? In short, it depends.

SSDs are extremely reliable in harsh environmental conditions because they have no moving parts to break. SSD aren’t bothered by extreme cold and heat, or being dropped. HDD can suffer issues based on harsh conditions, and a dropping it might break it.

However, data centers (or your home) typically do not experience arctic temperatures or liftoff. And SSDs do have some hardware that can fail, such as transistors and capacitors. Wayward electrons can also do some damage and failing firmware can take an SSD down with it. Wear and tear are also issues, and even SSD memory cells eventually wear out.

HDD Lifespan and Reliability

Even when HDDs are running in an environmentally safe location (and not being dropped on their heads), internal threats include equipment failures, data errors, and head crashes.

Equipment failure is usually due to wear and tear or manufacturing defects. This is not typical of newer HDD, despite any slower performance. Manufacturers measure HDD reliability by running clusters of disk models and families, and using the resulting reliability numbers to produce mean time between failures (MTBF) or annualized failure rates (AFR).

Head crashes are the common cause of a failed HDD. It happens when read/write heads scrape or touch the surface of the platter.

Data errors occur thanks to several causes. Firmware and the OS can identify some errors; others go undetected until the hard drive fails. Error correcting code (ECC) helps to protect against data errors by writing data into protected sectors.

HDD manufacturers report failure rates that average between .55% and .90% failure rates per new batch of units. However, HDD manufacturers do not report how many under-warranty disks they replace each year. Failures may not be due to the disk – they could be from an overheated data center, or a dropped system, or a natural disaster.

SSD Lifespan and Reliability

SSDs under 2-3 years in age have a significantly lower ARR (Annual Replacement Rate) than hard drives. But as the SSD ages, the story changes. In some tests 20% of flash drives developed uncorrectable errors in a four-year period, which is considerably higher than hard drives.

While SSDs are better in harsh conditions, they can still fail from a variety of causes:

Bit errors are common failures in which random, incorrect data bits are stored to cells (also referred to as leaking electrons).

Internal technical errors are caused by flying or shorn writes, which are writes that are written to the wrong location, or truncated (shorn) thanks to power loss. The term unserializability means that writes are recorded in the wrong order.

Firmware is also to blame for some SSD reliability failures, as it is subject to failure, corruption, and improper upgrades. And electronic components like chips and transistors can fail. Finally, although NAND flash is non-volatile, a power failure can corrupt a read/write action.

Additional tests over a 32-month period concluded that 30%-80% of flash drives developed bad blocks during their lifetime, while HDDs developed only 3.5% bad sectors. Since hard drive sectors are smaller than flash drive blocks, HDD sector failures impact less data.

Why such a large range of 30%-80% for SSDs? This is partially due to three different vendor measurements for SSD reliability and durability: standard age, total written TBs, and the average amount of TBs written to the drive within a specified time like a week or day. In short, hard use will take a toll on today’s SSDs.

SSD vs HDD Price Comparison

Finally, we come to the crux of the SSD vs HDD debate: cost.

On a per-gigabyte (GB) basis, SSDs are more expensive than HDDs. The gap has narrowed significantly over the years, but SSD prices remain much more expensive in this regard. Let’s take a look:

| Model | Capacity | List Price | $/GB (GB = 1,000 MB) |

|---|---|---|---|

| SSD: Samsung MZ-V7P1T0BW SSD 970 PRO NVMe M.2 |

1TB | $499.99 | $0.49 |

| SSD: Intel SC2KG480G701 DC (Data Center) S4600 Series SATA 2.5-inch |

480GB | $419.99 | $0.87 |

| SSD: Crucial CT250MX500SSD11 MX500 Series SATA 2.5-inch |

250GB | $72.99 | $0.29 |

| HDD: Western Digital WD121KRYZ WD GOLD Series SATA 3.5-inch |

12TB | $473.99 | $0.039 |

| HDD: Seagate ST8000DM004 Barracuda Pro SATA 3.5-inch |

8TB | $224.99 | $0.028 |

Still, the SSD vs. HDD pricing question is more involved than simple price tag. Tape is the cheapest form of storage followed by HDDs, followed by SSDs. If cheapest is your goal, tape is your choice. Yet as with many things in life, choosing one over another comes with trade-offs.

Take cars, for example. A performance car costs more than an economical daily driver. The same applies to SSDs and HDDs. Sure, both types of drives will store data, just like both types of cars will get you from point A to point B.

But one can get you there much, much faster.

A few years back, Dell examined the differences between enterprise performance SAS SSDs, enterprise value SATA SSDs, and a 10K HDD. While there’s been some change since then, Dell’s findings remain relevant in today’s market. On a per GB basis, the enterprise performance SSD cost over $30 at the time, compared to just under $20 for the enterprise value SSD and well under $5 for the 10K HDD.

SSD vs HDD: Total Cost of Ownership (TCO)

Additionally, buyers must also consider the ongoing costs of operating both SSD and HDD.

According to a 2017 whitepaper from Samsung, total cost of ownership (TCO) calculations often tip into SSD’s favor when factoring in many capital and operating expenditures (capex and opex). For example, Samsung found that an SSD can consumer 62 percent less electricity than an enterprise HDD. In terms of read IOPS per watt, an SDD has up to an astonishing 179,500 percent advantage over an HDD.

This storage TCO calculator from Samsung offers IT buyers a glimpse into how the TCO of SSDs and HDDs compare.

So the real question for you is: is the faster performance worth such a dramatic price jump? What’s most important to you: lower costs (the HDD) or the best possible speed (SSD)?

Which is better, SSD or HDD? The Bottom Line

Want to make the most out of your HDD and SSD investments? Invest your IT dollars wisely with these principles:

Choose based on the job:

HDDs deliver good performance at a great price in sequential workloads like media streaming.

SSDs, meanwhile, make quick work out of workloads with random read and operations.

In short, buy the drive that best suits the job – and don’t spend for performance when you don’t need to.

Not SSD or HDD, but Both If your workloads are complex, it’s likely best to combine SSD and HDD. You might invest in an all-flash SSD array for high-performance IOPs, but most data center managers buy hybrid arrays with SSD and HDD working in tandem. SSD costs are too high to justify nearline and secondary storage media. And although SSD is capable of higher capacity, data centers primarily need capacity for aging data, which favors HDD. Higher capacity comes at a very high cost and has little business justification – so for many situations HDD works just fine.

The enterprise data storage marketplace has already drawn the same conclusion; HDD handles more workload than SSD.