There has been a perennial argument of SAS versus SATA for enterprise storage. Some people say it’s OK to use SATA for enterprise storage and some say that you need to use SAS. In this article I’m going to address two aspects of the SAS vs. SATA argument. The first is about the drives themselves, SATA drives and SAS drives. The second is about data integrity in regard to SATA channels and SAS channels (channels are the connections from the drives to the Host Bus Adapter – HBA).

If you’re in the market for the best SSDs, see our list of the best and fastest SSDs.

| Table of Contents: |

SAS vs. SATA: Drives – Hard Error Rate

This subject has been written about several times including one of Henry Newman’s recent articles. It is defined as the number of bits that are read before the probability of hitting a read error reaches 100% (i.e. can’t read the sector). When a drive encounters a read error it simply means that any data that was on the sector cannot be read. Most hardware will go through several retries to read the sector but after a certain number of retries and/or a certain period of time, it will fail and the drive reports the sector as unreadable and the drive has failed.

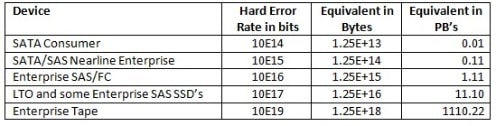

Below is a table from Henry’s previously mentioned article that lists the hard error rates for various drive types and how much data, in petabytes, would have to read before encountering an unreadable sector.

Table 1: Hard error rate for various storage media

The first row in the table, which are drives listed as “SATA Consumer,” are drives that typically only have a SATA interface (no versions with a SAS interface). Here is an example of a SATA consumer drive spec sheet. Notice that the hard error rate, referred to as “Nonrecoverable Read Errors per Bits Read, Max” in the linked document, is 10E14 as shown in the table above.

The second class of drives, labeled as “SATA/SAS Nearline Enterprise” in the above table, can have a SATA or SAS interface (same drive for either interface). For example, Seagate has two enterprise drives, where the first one has a SAS 12 Gbps interface and the second one has a SATA 6 Gbps interface. Both drives are the same but have different interfaces. The first one has a 12Gbps interface and the second one has a 6Gbps SATA interface but both have the same hard error rate, 10E15.

The third class of drives, listed in the third row of the table as “Enterprise SAS/FC,” typically only has a SAS interface. For example, Seagate has a 10.5K drive with a SAS interface (no SATA interface). The hard error rate for these drives is 10E16.

What the table tells us is that Consumer SATA drives are 100 times more likely than Enterprise SAS drives to encounter a read error. If you read 10TB of data from Consumer SATA drives, the probability of encountering a read error approaches 100% (virtually guaranteed to get an unreadable sector resulting in a failed drive).

SATA/SAS Nearline Enterprise drives improve the hard error rate by a factor of 10 but they are still 10 times more likely to encounter a hard read error (inability to read a sector) relative to an Enterprise SAS drive. This is equivalent to reading roughly 111 TB of data (0.11 PB).

On the other hand, using Enterprise SAS drives, a bit more data can read before encountering a read error. For Enterprise SAS drives about 1.1 PB of data can be read before approaching a 100% probability of hitting an unreadable sector (hard error).

At the point where you encounter a hard error the controller assumes the drive has failed. Assuming the drive was part of a RAID group the controller will start a RAID rebuild using a spare drive. Classic RAID groups will have to read all the disks that remain in the RAID group to rebuild the failed drive. This means they have to read 100% of the remaining drives even if there is no data on portions of the drive.

For instance, if we have a RAID-6 group with 10 total drives and you lose a drive, then 100% of the seven remaining drives have to be read to rebuild the failed drive and regain the RAID-6 protection. This is true even if the file system using the RAID-6 group has no data in it.

For example, if we are using ten 4TB Consumer SATA drives in a RAID-6 group, there is a total of 40TB of data. Given the information in the previous table, when about 10TB of data is read then there is almost a 100% chance of encountering a hard disk error. The drive on which the error has occurred is then failed causing a rebuild. In the case of the ten disk RAID-6 group, this means that there are now nine drives but we can only lose one more drive before losing data protection (recall that RAID-6 allows you to lose two drives before the next lost drive results in unrecoverable data lost).

In the scenario, I’m going to assume there is a hot-spare drive that can be used for the rebuild in the RAID group. In a classic RAID-6, all of the remaining nine drives will have to be read (a total of 36TB of data) for the rebuild. The problem is that during the rebuild the probability of hitting another hard error reaches 100% when just 10TB of data is read (a total of 36TB needs to be read for the rebuild). When this happens there is now a double drive failure and the RAID group is down to eight drives.

If there is a second hot-spare drive then the RAID group starts rebuilding from the remaining eight drives utilizing the two hot spare drives. To finish the rebuild, 32TB of data needs to be read (8 x 4TB). But again, after reading about 10TB of data there is nearly a 100% probability of hitting a hard error (32TB of data needs to be read). Therefore the second rebuild is very likely to fail.

Now three drives in the RAID group have been lost and no data can be recovered from the RAID group. At this point the data needs to be restored from a backup. But hopefully not one based on Consumer SATA drives, because of the need to restore 40TB of data – and it is likely to hit a hard error on the restore because 40TB of data has to be read. In fact you are likely not to be able to recover the data from a backup or copy that has been made with Consumer SATA drives because of the exact same scenario I laid out.

In the case of SATA/SAS Nearline Enterprise drives instead of about a 100% probability of hitting a hard drive error at about 10TB of data, it happens at 100TB of data (a factor of 10 improvement over Consumer SATA drives). In the case of SAS drives, the probability of a hard drive error is about 100% after 1.11PB of data has been read. RAID groups of 1.11PB in size are not that common at this point but don’t blink.

It can be confusing to ascertain the hard error rate for the drive you have, so you have to read the specifications carefully looking for the hard error rate. If the rate is not given, chances are that it’s not very good (just my opinion). Depending upon the hard error rate and the drive capacity, you can then decide the size of the RAID groups you can construct without getting to close to the 100% probability point.

I have heard many stories of people running Consumer SATA storage arrays that are almost always undergoing constant rebuilds with a corresponding decrease in performance. These designs did not take into account the hard error rate of the drives.

One additional thing to note is that as drives get larger their rotational speed has remained fairly constant. Therefore the rebuild times have greatly increased and the needed CPU resources have greatly increased as well (computing p and q parity for RAID-6, etc.).

With the current capacity of drives the time for a single drive rebuild can be measured in terms of days and is driven primarily by the speed of a single drive. During the rebuild time you are vulnerable to a failure of some type and this could result in the loss of the RAID group (i.e. shorter rebuild times are better). Moreover, during the rebuild period the performance is also degraded because the drives are being read to rebuild the RAID group. Therefore the back-end performance will suffer to some noticeable degree.

Here is a second point that might have escaped notice. The discussion to this point has been about SAS drives vs. SATA drives. While I used hard drives in the discussions, everything I have said to this point applies to SSD’s as well. This is extremely important to note because there are storage solutions where SATA SSD’s act as a cache for hard drives (or a tier of storage). These drives have the same hard error rate as their spinning cousins. After about 10 TB of Consumer SATA SSD’s, you approach a 100% probability of hitting a hard error causing a RAID rebuild. Please look for the hard error rate on the SSD drive. Here is an example.

From this section we learned that Consumer SATA drives can have a hard error rate that is 10x to 100x greater than drives that are either “Nearline Enterprise SATA/SAS” or “Enterprise SAS drives.”

Data Integrity

The next topic in the SAS vs. SATA debate I want to discuss is data integrity. How important is your data to you? Do you have photos of your kids when they were younger that you want to keep around for a very long time so they look like you just took them? Perhaps a better way to state the question is: “Can you tolerate losing part of the picture or having the picture become corrupt?”

Now ask a similar question about your “enterprise data.” How important is that data to your business? Can you tolerate any data corruption? When I worked for a major aerospace company they were required to keep engineering data on the type of aircraft if there were any of the aircraft still flying. When I left they had still stored data from the 1940’s.

Now think of aircraft being designed today. It is likely that some of them will be around for 100 years or more. For example, the B-52, which entered service in 1955, is still in active service and will likely continue to fly for many more years into the 2040’s, at which time it will be almost 100 years old!

The records from the 1940’s and 1950’s are all paper documents but today’s aircraft are designed with very little paper and are almost 100% digital. Making sure the digital data is still the same when you access it in 80 years is very important. That is, data integrity is very important and may be the most important aspect of data management a company can address.

In the subsequent sections I’m going to focus on Silent Data Corruption (SDC) of both the SAS and SATA data channel which have an impact on data integrity of each type of connection. Then I’ll talk about the impact of T10 DIF/PI and T10-DIX on improving silent data corruption and how they either integrate (or not) with SAS and SATA. Then finally I’ll mention the file system ZFS as a way to (possibly) help the situation.

Silent Data Corruption in the Channel

In the SAS vs. SATA argument a key area that is often overlooked is the data channel itself. Channels refer to the connection from the HBA (Host Bus Adapter) to the drive itself. Data travels through these channels from the controller to the drive and back. As with most things electrical, channels have an error rate due to various influences. The interesting aspect of SAS and SATA channels is that these errors result in what is termed Silent Data Corruption (SDC). This means that you don’t know when they happen. A bit is flipped and you have no way of detecting it, hence the word “silent.”

In general, the standard specification for most channels is 1 bit error in 10E12 bits. That is, for every 10E12 bits transmitted through the channel you will get one data bit corrupted silently (no knowledge of it). This number is referred to as the SDC Rate the number of bits before you encounter a silent error. The larger the SDC Rate, the more data needs to be passed through the channel before encountering an error.

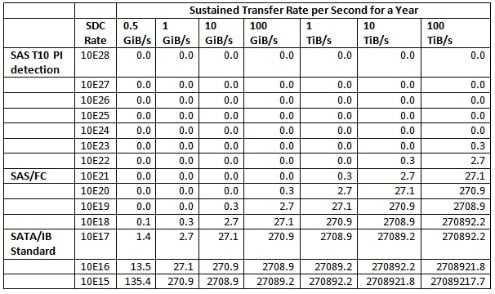

The table below lists the number of SDC’s likely to be encountered for a given SDC rate and a given data transfer rate over a year (table is courtesy of Henry Newman from a presentation given at the IEEE MSST 2013 conference).

Table 2: Numbers of Errors as a function of SDC rate and throughput

For example, if the SDC rate is 10E19 and the transfer data rate is 100 GiB/s you will encounter about 2.7 SDC’s in a year. The key thing to remember is that these errors are silent – you cannot detect them.

The SATA channel (and the IB channel) has an SDC of about 10E17. If you transfer data at 0.5 GiB/s you will likely encounter 1.4 SDC’s in a year. In the case of faster storage with a transfer rate of 10 GiB/s you are likely to encounter 27.1 SDC’s in a year (one every 2 weeks). For very high-speed storage systems that use a SATA channel with a transfer data rate of about 1 TiB/s, you could encounter 2,708 SDC’s (one every 3.2 hours).

On the other hand, SAS channels have a SDC rate of about 10E21. Running at a speed of 10 GiB/s you probably won’t hit any SDC’s in a year. Running at 1 TiB/s you are likely to have 0.3 SDC’s in a year.

The importance of this table should not be underestimated. A SATA channel encounters many more SDC’s compared to a SAS channel. The key word in the abbreviation SDC is “silent.” This means you cannot tell when or if the data is corrupted.

T10 Discussion

Sometimes even an SDC of 10E21 is not enough. We have systems with transfer rates hitting the 1 TiB/s mark pretty regularly and new systems being planned and procured with transfer rates of 10 TiB/s or higher (100 TiB/s is not out of the realm of possibility).

Even with SAS channels, at 10 TiB/s you could likely encounter 2.7 SDC’s a year. This may seem like a fairly small number but if data integrity is important to you, then this number is too big. What if the data corruption occurs on someone’s genome sequence? All of a sudden they may have a gene mutation that they actually don’t and the course of cancer treatment follows a direction that may not actually help the person.

Moreover, what happens if the channel is failing and the bit error rate of the base channel drops to a worse number before it fails? For example, what happens if the channel rate decreases from 10E12 to something smaller, perhaps 10E11.5 or 10E11? At the very least, systems with very high data rates could see a few more SDC’s than expected.

There is a committee that is part of the InterNational Committee for Information Technology Standards (INCITS), which in turn reports to the American National Standards Institute (ANSI). This committee, called the “T10 Technical Committee on SCSI Storage Interfaces” or T10 for short, is responsible for the SCSI architecture standards, and most of the SCSI command set standards (used by almost all modern I/O interfaces).

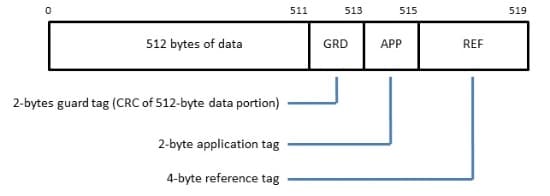

The T10 organization has created a new standard called T10 PI/DIF (PI = Protection of Information Disk, DIF = Data Integrity Field disk). They are really two standards but I tend to combine them since one without the other doesn’t achieve either goal (in the rest of the article I’ll just use T10-DIF to basically refer to both). This standard attempts to address data integrity via hardware and software. The T10-DIF standard adds three fields (CRC’s) to a standard disk sector. A diagram for a 512-byte disk sector with the additional fields is shown below:

In a 512-byte sector (shown as 0 to 511 byte count in the previous figure), there are three additional fields, resulting in a 520-byte chunk (not a power of two). The first additional field is a 2-byte data guard that is a CRC of the 512-byte sector. This field is abbreviated as GRD. The second field is a 2-byte application tag, abbreviated as APP. The third field is a 4-byte reference field, abbreviated as REF. With T10-DIF the HBA computes a 2-byte guard CRC (GRD) that is added to the 512-byte data sector before the entire 520-byte sector is passed to the drive. Then the drive can check the GRD CRC against the CRC of the 512-byte sector to check for an error. This greatly reduces the possibility of silent data corruption from the HBA to the disk drive.

T10-DIF means that drives have to be able to handle 520 byte sectors (not just 512 bytes). Moreover, if the drives have 4096-byte sectors then theT10-DIF standard changes to 4,104 byte sectors. There are HBA and disk manufacturers that support T10-DIF drives today. For example, LSI has HBA’s that are T10-DIF compliant and there T10-DIF compliant drives from several manufacturers.

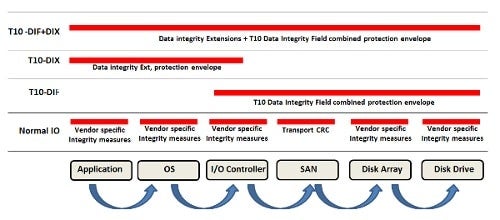

A visual way of thinking about the protection it provides is shown in the figure below:

Figure 2: T10 protection in the data path

At the bottom of the figure is a representation of the data path from the application down to the disk drive including the possibility of a storage network (SAN) being in the path (this can be a SAS network as well). The first row above the data components, labeled as “Normal IO,” illustrates the data integrity checks that vendors have implemented. They are not coordinated and are not tied to each other across components, leaving the possibility of silent data corruption between steps in the data path.

The next level above, labeled on the left as “T10-DIF,” illustrates where T10-DIF enters the picture. The HBA computes the checksum and puts it in the GRD field of the 520-byte sector. Then this is passed all the way down to the drive, which can check that the data is correct by checking the GRD checksum against the computed checksum of the data. T10-DIF introduces some data integrity protection from the HBA to the disk drive.

If you go back to the table of SDC’s you will find that when using T10-DIF with a SAS channel, the SDC increases to 10E28. For a storage system running at 100 TiB/s for an entire year it is not likely that a single SDC will be encountered in the SAS channel. Just in case you didn’t notice, a storage system running at 100 TiB/s for a year is roughly 3,153,600,00 TiB of total throughput (roughly 31,536,000 Exabytes).

Using T10-DIF added 7 orders of magnitude to the SDC protection of a SAS channel (10E21 to 10E28). SAS + T10-DIF is now 11 orders of magnitude better than the SATA channel (10E28 versus 10E17).

There is a second standard named T10-DIX that computes a checksum from the application and is inserted into the 520-byte chunk (the APP field). The T10-DIX protection is show in the previous diagram on the line with the left-hand label of “T10-DIX.” It can be used to check data integrity all the way down to the HBA where T10-DIF kicks in.

If you look at the previous diagram, the application, or something close to it, creates a 2-byte CRC (tag) and puts it in the 520-byte data chunk. This allows the data to be checked all the way to the HBA. Then the HBA can do a checksum and add it to the correct place in the T10-DIF field and send it down to the drive.

If you look at the very top line of the previous diagram, labeled on the left as “T10-DIF+DIX,” you can see how the T10 additions ensure data integrity from the application to the drive. This is precisely what is needed if data integrity means anything to you.

There are a few things of note with T10-DIF (PI) and T10-DIX. The first is that the application field cannot be passed through the VFS layer without changes to POSIX. Secondly, in the case of NFS the ability to pass 520-bute sectors is not likely happen either, unless the underlying protocol is changed (and POSIX). That means that NFS is not a good protocol at this time if you want data integrity from the application down to the HBA (T10-DIX) from the host.

If you have carefully read the T10 discussion you will notice that it is all around SAS. T10-DIF/PI and T10-DIX cannot be implemented with SATA. Therefore the SAS channel with T10-DIF just widens the gap with the SATA channel in terms of SDC.

Notice that the data integrity discussion is about channels and not drives. If any storage device uses a SATA channel then it suffers from the SDC rate previous discussed. If the storage device uses a SAS channel then it has the same SDC and can use T10-DIF/PI and T10-DIX to improve the SDC to a very high level. To be crystal clear, this includes SSD devices. SATA SSD devices that use the SATA Channel have a very poor SDC relative to SSD’s that use the SAS Channels (SAS attached SSD devices).

There are many storage systems that use a caching tier of SSD’s. The concept is to use very fast SSD’s in front of a large capacity of drives. The classic approach is to use SAS drives for the “backing store” in the caching system and use the SSD’s as the write and read cache layer. Many of these solutions use SAS channels on the backing storage, resulting in a reasonable SDC rate, but use SSD’s with a SATA channel in the caching layer.

Some of these caching layers can run at 10 GiB/s. With a SATA channel you are likely to get 27.1 SDC’s per year (about one every two weeks) while the SAS channel devices have virtually no SDC’s in a year. The resulting storage solution only has as much data integrity as the weakest link – in this case, the SATA Channel. With this concept you have taken a SAS Channel based storage layer with a very good SDC rate and couple it to a SATA channel based caching layer with an extremely poor SDC rate.

Can’t ZFS cure SATA’s ills?

One question you may have at this point is if a file system like ZFS could allow you to use drives attached via a SATA channel despite their limitations? ZFS focuses on data integrity and checksums the data so shouldn’t it “fix” some of SATA channel problems? Let’s take a look at this since the devil is always in the details.

ZFS does read checksums. When it writes data to the storage, it computes checksums of each block and writes them along with the data to the storage devices. The checksums are written in the pointer to the block. A checksum of the block pointer itself is also computed and stored in its pointer. This continues all the way up the tree to the root node which also has a checksum.

When the data block is read, the checksum is computed and compared to the checksum stored in the block pointer. If the checksums match, the data is passed from the file system to the calling function. If the checksums do not match, then the data is corrected using either mirroring or RAID (depends upon how ZFS is configured).

Remember that the checksums are made on the blocks and not on the entire file, allowing the bad block(s) to be reconstructed if the checksums don’t match and if the information is available for reconstructing the block(s). If the blocks are mirrored, then the mirror of the block is used and checked for integrity. If the blocks are stored using RAID then the data is reconstructed just like you would any RAID data – from the remaining blocks and the parity blocks. However, a key point to remember is that it in the case of multiple checksum failures the file is considered corrupt and it must be restored from a backup.

ZFS can help data integrity in some regards. ZFS computes the checksum information in memory prior to the data being passed to the drives. It is very unlikely that the checksum information will be corrupt in memory. After computing the checksums, ZFS writes the data to the drives via the channel as well as writes the checksums into the block pointers.

Since the data has come through the channel, then it is possible that the data can become corrupted by a SDC. In that case ZFS will write corrupted data (either the data or checksum possibly both). When the data is read, ZFS is capable of recovering the correct data because it will either detect a corrupted checksum for the data (stored in the block pointer) or it will detect corrupted data. In either case, it will restore the data from a mirror or RAID.

The key point is that the only way to discover if the data is bad is to read it again. ZFS has a feature called “scrubbing” that walks the data tree and checks both the checksums in the block pointers as well as the data itself. If it detects problems then the data is corrected. But scrubbing will consume CPU and memory resources while storage performance will be reduced to some degree (scrubbing is done in the background).

If you get a hard error on the drive (see first section) before ZFS scrubs the data that affects corrupted data (due to SDC in the SATA channel) then it’s very possible that you can’t recover the data. The data was corrupted but the checksums could have been used to correct it but now a drive with the block and block pointer is dead making life very difficult.

Given the drive error rate of Consumer SATA drives in the first section and the size of the RAID groups, plus the SATA Channel SDC, this combination of events can be a distinct possibility (unless you are start scrubbing data at a very high rate so that newly landed data is scrubbed immediately, which limits the performance of the file system).

Therefore ZFS can “help” the SATA channel in terms of reducing the effective SDC because it can recover data corrupted by the SATA channel, but to do this, all of the data that is written must be read as well (to correct the data). This means to write a chunk of data you have to compute the checksum in memory, write it with the data to the storage system, re-read the data and checksum, compare the stored checksum to the computed checksum, and possibly recover the corrupted data and compute a new checksum and write it to disk. This is a great deal of work just to write a chunk of data.

Another consideration for SAS vs. SATA is the performance. Right now SATA has a 6 Gbps interface. Instead of doubling the interface to go to 12 Gbps, the decision was made to switch to something called SATA Express. This is a new interface that supports either SATA of PCI Express storage devices. SATA Express should start to appear in consumer system in 2014 but the peak performance can vary widely from as low as 6 Gbps for legacy SATA devices to 8-16 Gbps PCI Express devices (e.g. PCIe SSDs).

However, there are companies currently selling SAS drives with a 12 Gbps interface. Moreover, in a few years, there will be 24 Gbps SAS drives.

SATA vs. SAS: Summary and Observations

Let’s recap. To begin with, SATA drives have a much lower hard error rate than SAS drives. Consumer SATA drives are 100 times more likely to encounter a hard error than Enterprise SAS drives. The SATA/SAS Nearline Enterprise drives have a hard error rate that is only 10 times worse than Enterprise SAS drives. Because of this, RAID group sizes are limited when Consumer SATA drives are used or you run the risk of multi-disk failure that even something like RAID-6 cannot help. There are plenty of stories of people who have used Consumer SATA drives in larger RAID groups where the array is constantly in the middle of a rebuild. Performance suffers accordingly.

The SATA channel has a much higher incidence rate of silent data corruption (SDC) than the SAS channel. In fact, the SATA channel is four orders of magnitude worse than the SAS channel for SDC rates. For the data rates of today’s larger systems, you are likely to encounter a few silent data corruptions per year even running at 0.5 GiB/s with a SATA channel (about 1.4 per year). On the other hand, the SAS Channel allows you to use a much higher data rate without encountering an SDC. You need to run the SAS Channel at about 1 TiB/s for a year before you might encounter an SDC (theoretically 0.3 per year).

Using T10-DIF, the SDC rate for the SAS channel can be increased to the point we are likely never to encounter a SDC in a year until we start pushing above the 100 TiB/s data rate range. Adding in T10-DIX is even better because we start to address the data integrity issues from the application to the HBA (T10-DIF fixes the data integrity from the HBA to the drive). But changes in POSIX are required to allow T10-DIX to happen.

But T10-DIF and T10-DIX cannot be used with the SATA channel so we are stuck with a fairly high rate of SDC by using the SATA Channel. This is fine for home systems that have a couple of SATA drives or so, but for the enterprise world or for systems that have a reasonable amount of capacity, SATA drives and the SATA channel are a bad combination (lots of drive rebuilds and lots of silent data corruption).

File systems that do proper checksums, such as ZFS, can help with data integrity issues because of writing the checksum with the data blocks, but they are not perfect. In the case of ZFS to check for data corruptions you have to read the data again. This really cuts into performance and increases CPU usage (remember that ZFS uses software RAID). We don’t know the ultimate impact on the SDC rate but it can help. Unfortunately I don’t have any estimates of the increase in SDC when ZFS is used.

Increasingly, there are storage solutions that use a smaller caching tier in front of a larger capacity but slower tier. The classic example is using SSD’s in front of spinning disks. The goal of this configuration is to effectively utilize much faster but typically costlier SSD’s in front of slower but much larger capacity spinning drives. Conceptually, writes are first done to the SSD’s and then migrated to the slower disks per some policy. Data that is to be read is also pulled into the SSD’s as needed so that read speed is much faster than if it was read from the disks. But in this configuration the overall data integrity of the solution is limited by the weakest link as previously discussed.

If you are wondering about using PCI Express SSD’s instead of SATA SSD’s drives you can do that but unfortunately, I don’t know the SDC rate for PCIe drives and I can’t find anything that has been published. Moreover, I don’t believe there is a way to dual-port these drives so that you can use them between two servers for data resiliency (in many cases if the cache goes down, the entire storage solution goes down).

If you have made it to the end of the article, congratulations, it is a little longer than I hoped but I wanted to present some technical facts rather than hand waving and arguing. It’s pretty obvious that for reasonably large storage solutions where data integrity is important, SATA is not the way to go. But that doesn’t mean SATA is pointless. I use them in my home desktop very successfully, but I don’t have a great deal of data and I don’t push that much data through the SATA channel. Take the time to understand your data integrity needs and what kind of solution you need.

Photo courtesy of Shutterstock.