Random access memory, RAM, provides short term storage space for data and program code that a computer processor is in the act of using, or which it expects to use imminently. RAM is found in both SSD and HDD systems.

One of the key characteristics of RAM is that it is much faster than a hard disk drive or other long term storage device, which means that the computer is not kept waiting for data to process.

The simple answer to the question “why do you need RAM?” is: speed.

What is RAM and How Does it Work?

The name random access memory derives from the fact that, in general, any random bit of data can be read from or written to random access memory as quickly as any other bit. This contrasts with storage media such as spinning disks or tape, where the access speed depends on the exact location of the data on the storage media, the speed of rotation of the media, and other factors.

In fact, random access memory is not the fastest storage area that a computer has access to. The very fastest areas are the hardware registers built in to the central processing unit, followed by on-die and external data caches. However these areas are very small (because they are expensive) – often measured in bytes, kilobytes, or a few megabytes.

By contrast, a modern computer system will have random access memory storage measured in Gigabytes, while slower but cheaper longer term storage provided by hard drives or solid state drives is often measured in Terabytes.

Random access memory also differs from long term storage in that it is volatile. This means that it can only store data (or program code) while the computer is powered on. As soon as the power is cut all the data contained in random access memory is lost.

For that reason, random access memory is sometimes known as working memory, which is used while the computer is operating. Before switching off, all data that is to be retained must be written to non-volatile long term memory so that it can be accessed in the future.

Data stored in random access memory can be accessed far faster than data stored in the computer’s hard drive.

RAM’s Other Functions

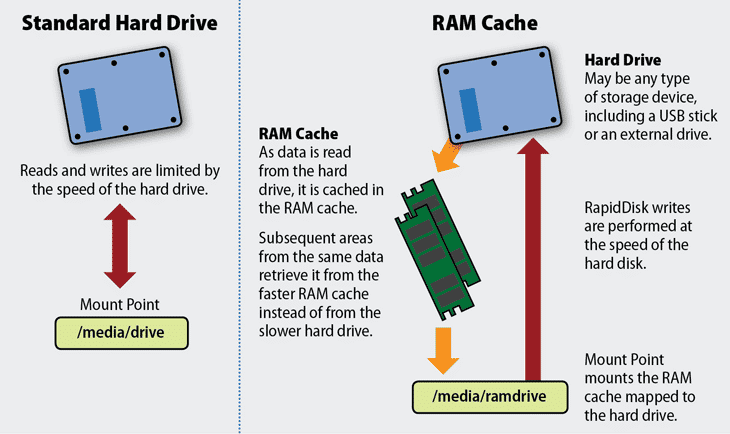

As illustrated in the graphic above, the function of RAM is to provide fast temporary storage and workspace for data and program code, which includes both applications and the system’s operating system along with hardware drivers for each hardware device, such as hard disk controllers, keyboards and printers.

But because random access memory works very quickly compared to longer term storage, it is also used in other ways which take advantage of this speed.

One example is the use of random access memory as a “RAM disk.” This reserves random access memory space and uses it as a virtual hard disk drive. This is assigned a drive letter, and appears exactly like a conventional disk drive except that it works much faster.

In some circumstances this can be very useful, but the drawbacks are that the size of a RAM disk is limited, and using a RAM disk means there is less random access memory left for regular usage.

Another use for random access memory is “shadow RAM.” In some operating systems (but not Windows), some of the contents of the system’s BIOS – which is stored in the system’s read-only memory (ROM) – is copied into RAM. The system then uses this copy of the BIOS code instead of the original version stored in ROM.

The benefit of this is speed: reading BIOS code from RAM is about twice as fast as reading it from ROM.

Types of RAM Memory

Random access memory chips are generally packed into standard sized RAM modules such as Dual In-line Memory Modules (DIMMs) or more compact Small Outline Dual In-line Memory Modules (SODIMMs) which can be inserted into a computer motherboard’s RAM module sockets.

The two most common forms of random access memory today are:

- Dynamic random access memory (DRAM) which is slower but lower cost

- Static random access memory (SRAM) is faster but more expensive.

Many people wonder about the differences between DRAM vs. RAM, but in fact DRAM is just a type of RAM.

SRAM vs. DRAM

To understand why SRAM memory is more expensive than DRAM memory, it is necessary to look at the structure of the two types of random access memory.

Random access memory is made up of memory cells, and each memory cell can store a single bit of data: either a zero or a one. Put simply, the cells are connected in a grid made up of address lines and perpendicular bit lines. By specifying an address line and a bit line, each individual cell is uniquely addressable.

The difference between SRAM memory and DRAM memory is the structure of the cells themselves.

DRAM is by far the simpler of the two cell types, since it consists of just two electronic components: a capacitor and a transistor. The capacitor can be filled with electrons to store a one, or emptied to store a zero, while the transistor is effectively the switch which enables the capacitor to be filled or emptied.

A problem with this type of cell is that capacitors are leaky, so as soon as they are filled with electrons they begin to drain. That means that full capacitors which are storing ones have to be repeatedly refilled thousands of times a second. For that reason these random access memory cells are called “dynamic,” and a side effect is that they consume more electricity than their static counterparts.

To learn more about the differences between these technologies, see SRAM vs DRAM.

Understanding SRAM Cells

Static random access memory cells are far more complicated because they are built using several (usually six) transistors or MOSFETS, and contain no capacitors. The cell is “bistable” and uses a “flip flop” design. Put simply, this means that a zero going in to one half results in a one coming out; this is fed into the other side, where the one going in results in a zero coming out.

This is fed back to the start, with the result that the cell holds a fixed value until it is altered. This static state results in the name static random access memory. But it is important to note that, like dynamic random access memory, static random access memory is volatile and loses all data when it loses power.

The relative complexity of static random access memory means that it offers much lower storage density, which in turn makes it much more expensive per byte stored. However, the complex design is actually much faster than dynamic random access memory, so SRAM is generally only used to provide short term storage in caches (both on-die and external to the processor). In contrast, DRAM is generally used for what is commonly called random access memory.

How Much Random Access Memory is Best?

Most operating systems specify a recommended amount (or a minimum) of random access memory that a system needs to run the operating system. For example, the minimum RAM requirement for Windows Server 2019 is 512 GB and for Windows 10 it is 1 GB (32-bit version) or 2 GB (64-bit version).

But in order to run multiple applications, then more RAM will almost certainly be required. Having inadequate random access memory resources will slow the system down and in some cases cause programs to crash or be unable to operate as required. In practice most of these systems will have at least 8 GB or random access memory installed.

The general rule of thumb about RAM is that more is better: a system with more random access memory will in usually be able to run more applications at the same time and operate faster than a similar system with less random access memory.

But there are a few constraints. The most obvious constraint is cost, and a system that is only lightly used and works satisfactorily does not strictly need additional random access memory, although it may benefit from it. So it may not be worth spending additional financial resources on it.

Another constraint is the hardware of the system itself. That’s because every motherboard has a limit to the amount of random access memory that can be installed on it.

But in general, increasing the amount of random access memory in a system is one of the most cost effective ways of increasing performance.

RAM History, Trends and Future Developments

Early RAM technology involved cathode ray tubes (similar to those found in older monitors and television sets) and magnetic cores. Today’s solid state memory technology was invented towards the end of the 1960s.

Recent developments include the introduction of double data rate (DDR) random access memory, and successive generations of this technology including DDR2, DDR3, and DDR4. Each version of DDR is faster and uses less energy than the previous one. The standard for DDR5 is being finalized and the first DDR5 products are expected towards the end of 2019.

Next Generation RAM: Optane

The biggest change that is on the horizon is a new technology from Intel called 3D XPoint, branded as Optane.

3D XPoint is less expensive than DRAM, but somewhat slower. It therefore offers a low cost alternative to DRAM in systems that require a huge amount of random access memory, such as those running in-memory databases. Equipping such systems with DRAM may be prohibitively expensive, but 3D XPoint could provide adequate performance at much lower cost.

An additional benefit of 3D XPoint is that it is non-volatile, meaning that in the event of a system crash or power cut the system can be restarted much more quickly. This is because data does not have to be read back into memory from slower long term storage, and data loss is more easily avoided.