Memory Management is the allocation of RAM into usable blocks. Explore Memory Management in the OS and how an MMU operates. Click here now.

The reality of most compute and storage deployments is that all types of computer memory are constrained by an upper limit.

No resource on a modern system is perhaps as constrained as memory, which is always needed by operating systems, applications and storage. Without unlimited memory, at some point memory is fully consumed, which leads to system instability or data loss.

Since the beginning of modern IT, the challenge of memory exhaustion has been handled by a diverse set of capabilities, usually grouped under the heading of memory management.

In this guide, EnterpriseStorageForum outlines what memory management is all about.

Memory management is all about making sure there is as much available memory space as possible for new programs, data and processes to execute. As memory is used by multiple parts of a modern system, memory allocation and memory management can take on different forms.

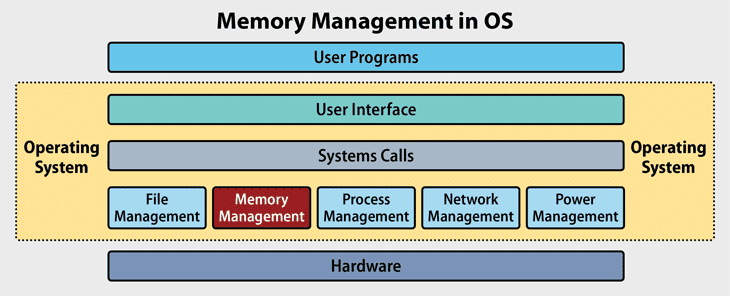

To be effective, a computer’s memory management function must sit between the hardware and the operating system.

Memory management is all about allocation and optimization of finite physical resources. Memory is not uniform – for example a 2GB RAM DIMM is not used as one large chunk of space. Rather memory allocation techniques are used for segmentation of RAM into usable blocks of memory cache.

Memory management strategies within an operating system or application typically involve an understanding of what physical address space is available in RAM and performing memory allocation to properly place, move and remove processes from memory address space.

Static and dynamic memory allocation in an operating system is linked to different types of memory addresses. Fundamentally, there are two core types of memory addresses:

The Memory Management Unit (MMU) within a computing system is the core hardware component that translates virtual logical address space to physical addresses. The MMU is typically a physical piece of hardware and is sometimes referred to as a Paged Memory Management Unit (PMMU).

The process by which the MMU converts a virtual address to a physical address is referred to as virtual address translation and makes use of a Page Directory Pointer Table (PDPT) to convert one address type to another.

The process is directly tied to page table allocation, matching and managing one address type to another. To help accelerate virtual address translation there is a caching mechanism known as the Translation Lookaside Buffer (TLB) which is also part of the virtual address to physical address translation process.

Applications and data can be loaded into memory in a number of different ways, with the two core approaches being static and dynamic loading.

When memory is allocated in a system, not all of the available is always consumed in a linear manner, which can lead to fragmentation. There are two core types of memory fragmentation, internal and external

Within logical address space, virtual memory is divided up using paging, meaning it’s divided into fixed units of memory, referred to as pages. Pages can have different sizes, depending on the underlying system architecture and operating system The process of page table management can be intricate and complex.

Memory segmentation within the primary memory of a system is a complicated process that references specific bits within a memory unit.

Each segment within system memory gets its own address, in an effort to improve optimization and memory allocation. Segment registers are the primary mechanism by which modern systems handle memory segmentation. Paging and segmentation are similar but do have a few distinct differences.

Swapping is the process by which addition memory is claimed by an operating system from a storage device.

How swapping works is an operating system defines an area of storage that is used as “swap space,” that is storage space where memory process will be stored and run as physical and virtual memory space is exhausted, released and reclaimed. The usage of swap space with traditional storage is a sub-optimal way of expanding available memory as it incurs the overhead of transferring to and from physical RAM. Additionally, traditional storage devices run with slower interface speeds that RAM.

Swapping however is now being revisited as a way to expand memory with the emergence of faster, PCIe SSDs which offer an interface connect speed of up to 16 Gb/s. In contrast a SATA connected SSD has a maximum connection speed of 6.0 Gb/s.

Memory management is an essential element of all modern computing systems. With the continued used of virtualization and the need to optimize resource utilization, memory is constantly being allocated, removed, segmented, used and re-used. With memory management techniques, memory management errors, that can lead to system and application instability and failures can be mitigated

Sean Michael Kerner is an Internet consultant, strategist, and contributor to several leading IT business web sites.

Enterprise Storage Forum offers practical information on data storage and protection from several different perspectives: hardware, software, on-premises services and cloud services. It also includes storage security and deep looks into various storage technologies, including object storage and modern parallel file systems. ESF is an ideal website for enterprise storage admins, CTOs and storage architects to reference in order to stay informed about the latest products, services and trends in the storage industry.

Property of TechnologyAdvice. © 2026 TechnologyAdvice. All Rights Reserved

Advertiser Disclosure: Some of the products that appear on this site are from companies from which TechnologyAdvice receives compensation. This compensation may impact how and where products appear on this site including, for example, the order in which they appear. TechnologyAdvice does not include all companies or all types of products available in the marketplace.