SSD Caching stores readily needed data so that it’s quickly available. Explore how it works and boosts computer performance & speed. Click here now.

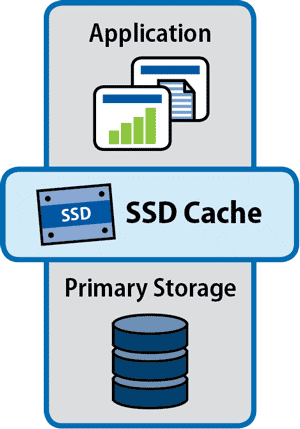

SSD caching is a computing and storage technology that stores frequently used and recent data to a fast SSD cache. This solves HDD-related I/O problems by increasing IOPS performance and reducing latency, significantly shortening load times and execution. Caching works on both reads and writes, and particularly benefits read-intensive applications.

Caching is not new to hard drives. Operating systems like Windows and Linux come with native caching software. HDD array caching software exists and increases overall HDD performance, but the configuration is expensive and complex.

SSD caching is also called flash memory caching. Although flash and SSD are not the same thing, most SSDs are NAND flash. In this architecture, the caching program directs data that does not meet caching requirements to HDD’s, and temporarily stores high I/O data to the NAND flash memory chips.

This temporary storage, or cache, accelerates read and write requests by keeping a copy of the data closer to the processor. Caches may consist of an entire SSD or a fraction of the memory cells within an SSD. Many SSDs already come with a caching storage area, which may be NAND and/or DRAM.

SSD caching improves performance by storing readily needed data so that it’s more quickly available.

To fully understand how SSD caching works, let’s look at the various types of SSD caching. These different types of SSD caching include read caching, write-through, write-back, and write-around.

SSD caching improves storage performance by keeping frequently accessed data immediately available. When the host issues a data request, the caching software will analyze SSD caches first to see if the data already resides there.

If not, the caching software will use algorithms to predict the patterns of data access. The algorithms identify least and most frequently used data, and least and most recent data access, enabling it to place copies of high priority active data into fast cache memory.

Not every application improves with SSD caching. Any application that issues primarily sequential reads and writes, such as video streaming, does not need random I/O caching. And data that has no predictive patterns, such as random data reading, does not benefit from SSD caching because there are no data patterns to reliably predict.

SSD caching may occur in any type of device that uses SSDs:

SSD caching can significantly improve performance and lower latency for enterprise applications and large virtualized networks.

For example, SSD caching accelerates I/O performance, and virtualized environments generate large volumes of random I/O. This is because virtualized environments are bringing together many different server functions and applications. This includes VDI’s with hundreds to thousands of virtual desktops, or virtualized computing networks with dozens of different application servers and hundreds of dynamic virtual machines.

All these virtualized entities share the same underlying storage media – mostly HDDs, since it is not cost-effective to replace HDDs arrays with all-flash arrays to support virtualized environments. AFAs support extremely high numbers of I/O, but even larger virtualized environments do not automatically generate nearly as much I/O that the AFA can support, now or in the future.

This architecture does not justify the high cost of an all-flash array. But within an HDD or hybrid array that underlies a virtualized network, SSD caching enables the hard drives to support high I/O requirements even for intensive virtualized workloads.

Server-based SSDs, as opposed to networked array-based storage, also work in virtualized networks. In these cases, the host server uses SSD caches in its direct-attached storage to serve multiple VMs. Because the SSD cache is physically close to the I/O location, latency reduces even more. The drawback is that the server fails, the cached data may be inaccessible and perhaps even unrecoverable depending on the type of write cache. However, if IT backs up/snapshots/replicates the cached data and rapidly restores to another server, this is not a huge drawback.

“Best” is complex concept in SSD caching, because there are many technologies that deliver caching software commands. These include VMWare and Hyper-V, specific applications, third-party software, Windows and Linux, SSD storage controllers, and storage arrays. For example:

Christine Taylor is a writer and content strategist. She brings technology concepts to vivid life in white papers, ebooks, case studies, blogs, and articles, and is particularly passionate about the explosive potential of B2B storytelling. She also consults with small marketing teams on how to do excellent content strategy and creation with limited resources.

Enterprise Storage Forum offers practical information on data storage and protection from several different perspectives: hardware, software, on-premises services and cloud services. It also includes storage security and deep looks into various storage technologies, including object storage and modern parallel file systems. ESF is an ideal website for enterprise storage admins, CTOs and storage architects to reference in order to stay informed about the latest products, services and trends in the storage industry.

Property of TechnologyAdvice. © 2026 TechnologyAdvice. All Rights Reserved

Advertiser Disclosure: Some of the products that appear on this site are from companies from which TechnologyAdvice receives compensation. This compensation may impact how and where products appear on this site including, for example, the order in which they appear. TechnologyAdvice does not include all companies or all types of products available in the marketplace.