The reality of most compute and storage deployments is that all types of computer memory are constrained by an upper limit.

No resource on a modern system is perhaps as constrained as memory, which is always needed by operating systems, applications and storage. Without unlimited memory, at some point memory is fully consumed, which leads to system instability or data loss.

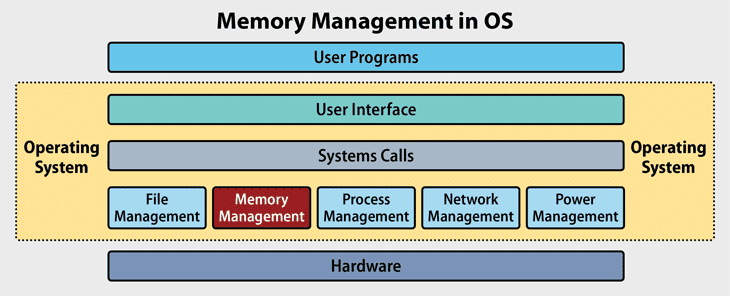

Since the beginning of modern IT, the challenge of memory exhaustion has been handled by a diverse set of capabilities, usually grouped under the heading of memory management.

In this guide, EnterpriseStorageForum outlines what memory management is all about.

What Is Memory Management?

Memory management is all about making sure there is as much available memory space as possible for new programs, data and processes to execute. As memory is used by multiple parts of a modern system, memory allocation and memory management can take on different forms.

- Operating System – Operating systems like Microsoft Windows and Linux, can make use of physical RAM as well as hard drive swap space to manage a total pool of available memory.

- Programming Languages – The C programming language requires developers to directly manage memory utilization, while other languages like Java and C#, for example, provide automatic memory management.

- Applications – Applications consume and manage memory, but are often limited in memory management capabilities as defined by the underlying language and operating system.

- Storage memory management – With new NVME storage drives, operating systems can benefit from faster storage drives to help expand and enable more persistent forms of memory management.

To be effective, a computer’s memory management function must sit between the hardware and the operating system.

How Does Memory Management Work?

Memory management is all about allocation and optimization of finite physical resources. Memory is not uniform – for example a 2GB RAM DIMM is not used as one large chunk of space. Rather memory allocation techniques are used for segmentation of RAM into usable blocks of memory cache.

Memory management strategies within an operating system or application typically involve an understanding of what physical address space is available in RAM and performing memory allocation to properly place, move and remove processes from memory address space.

Types of Memory Addresses

Static and dynamic memory allocation in an operating system is linked to different types of memory addresses. Fundamentally, there are two core types of memory addresses:

- Physical addresses – The physical address is the memory location within system RAM and is identified as a set of digits.

- Logical addresses – Also sometimes referred to as virtual memory, a logical address is what operating systems and applications access to execute code, as an abstraction of physical address space.

How does MMU convert virtual address to physical address?

The Memory Management Unit (MMU) within a computing system is the core hardware component that translates virtual logical address space to physical addresses. The MMU is typically a physical piece of hardware and is sometimes referred to as a Paged Memory Management Unit (PMMU).

The process by which the MMU converts a virtual address to a physical address is referred to as virtual address translation and makes use of a Page Directory Pointer Table (PDPT) to convert one address type to another.

The process is directly tied to page table allocation, matching and managing one address type to another. To help accelerate virtual address translation there is a caching mechanism known as the Translation Lookaside Buffer (TLB) which is also part of the virtual address to physical address translation process.

Memory Allocation: Static vs. Dynamic Loading

Applications and data can be loaded into memory in a number of different ways, with the two core approaches being static and dynamic loading.

- Static Loading – Code is loaded into memory, before it is executed. Used in structured programming languages including C.

- Dynamic Loading – Code is loaded into memory as needed. Used in object oriented programming languages, such as Java.

Memory Fragmentation

When memory is allocated in a system, not all of the available is always consumed in a linear manner, which can lead to fragmentation. There are two core types of memory fragmentation, internal and external

- Internal Fragmentation – Memory is allocated to a process or application and isn’t used, leaving un-allocated or fragmented memory.

- External Fragmentation – As memory is allocated and then deallocated, there can be small spaces of memory leftover, leaving memory holes or “fragments” that aren’t suitable for other processes.

Paging

Within logical address space, virtual memory is divided up using paging, meaning it’s divided into fixed units of memory, referred to as pages. Pages can have different sizes, depending on the underlying system architecture and operating system The process of page table management can be intricate and complex.

- For more information on how paging is handled in Linux, check out the full kernel.org documentation.

- On Windows, Microsoft provides details on its paging process here.

Segmentation

Memory segmentation within the primary memory of a system is a complicated process that references specific bits within a memory unit.

Each segment within system memory gets its own address, in an effort to improve optimization and memory allocation. Segment registers are the primary mechanism by which modern systems handle memory segmentation. Paging and segmentation are similar but do have a few distinct differences.

Swapping

Swapping is the process by which addition memory is claimed by an operating system from a storage device.

How swapping works is an operating system defines an area of storage that is used as “swap space,” that is storage space where memory process will be stored and run as physical and virtual memory space is exhausted, released and reclaimed. The usage of swap space with traditional storage is a sub-optimal way of expanding available memory as it incurs the overhead of transferring to and from physical RAM. Additionally, traditional storage devices run with slower interface speeds that RAM.

Swapping however is now being revisited as a way to expand memory with the emergence of faster, PCIe SSDs which offer an interface connect speed of up to 16 Gb/s. In contrast a SATA connected SSD has a maximum connection speed of 6.0 Gb/s.

Why do We Need Memory Management?

Memory management is an essential element of all modern computing systems. With the continued used of virtualization and the need to optimize resource utilization, memory is constantly being allocated, removed, segmented, used and re-used. With memory management techniques, memory management errors, that can lead to system and application instability and failures can be mitigated

Advantages

- Maximizes the availability of memory to programs

- Enables re-use and reclamation of memory that is not actively in use

- Can help to extend available physical memory with swapping

Disadvantages

- Can lead to fragmentation of memory resources

- Adds complexity to system operations

- Introduces potential performance latency